[2509.01322] LongCat-Flash Technical Report

Show the PDF file for the paper titled Longcat-Flash Technical Report, written by Meituan Longcat and 181 other books

PDF HTML (experimental) view

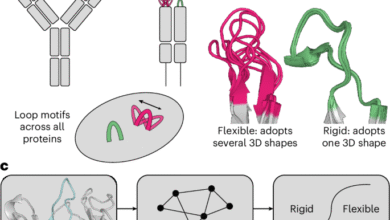

a summary:We offer the LongCat-Flash, a 560 billion language model (MOE) designed for both arithmetic efficiency and advanced capabilities. LongCat-Flash, which arises from the need for developmentable efficiency, adopts two new designs: (a) Zero coordination experts, which allows the allocation of dynamic computer budget and activate 18.6B-31.3B (average on average) per code on demand for continuous requirements, and improve resource use. (B) MEE related to the abbreviation, which expands the window of interference, which indicates noticeable gains in the efficiency of reasoning and productivity compared to a similar scale models. We develop a comprehensive scaling framework for large models that combine superior transfer, preparation of typical growth, a multi -side stability group, and the inevitable account to achieve stable and repetitive training. It is worth noting that the use of synergy between the architectural design efforts that are developing and infrastructure, we complete the typical training on more than 20 trillion symbol within 30 days, with more than 100 TPS code to infer at a cost of \ 0.70 dollars per million output symbols. For the development of the LongCat-Flash towards working intelligence, we do pre-training on a large scale on improved mixtures, followed by the target in the middle and after training in thinking, symbol and instructions, while increasing artificial data and the tasks of using tools. Comprehensive assessments show that as a non-thinking institution model, the LongCat-Flash offers a very competitive performance among other leading models, with exceptional strengths in job tasks. The model inspection point for the open source LongCat-Flash to enhance community research.

LongCat Chat: URL https

Hugging face: URL https

Jetbb: This URL https

The application date

From: Jiaqi Zhang [view email]

[v1]

Monday, 1 Sep 2025 10:05:45 UTC (7,133 KB)

[v2]

Friday, 19 Sep 2025 13:34:47 UTC (7,127 KB)

Don’t miss more hot News like this! AI/" target="_blank" rel="noopener">Click here to discover the latest in AI news!

2025-09-22 04:00:00