AI

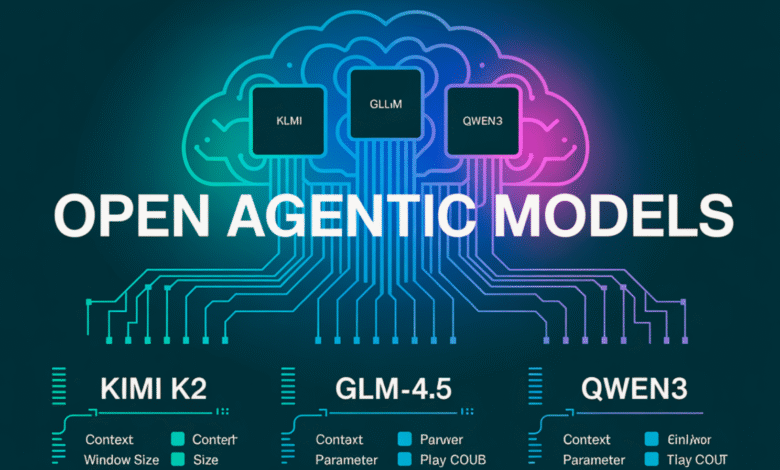

The Best Chinese Open Agentic/Reasoning Models (2025): Expanded Review, Comparative Insights & Use Cases

China continues to determine the pace of open source innovation from large models, especially for family structures and deep thinking. Below is a comprehensive and modern evidence of the best open Chinese models/thinking, expanding with the latest and most influential expatriates.

1. Kim K2 (Moonshot AI)

- Introduction account: The structure of the mixture of experts, up to 128 kilometers context, the excessive worker’s ability and the dual -language fluency (Chinese/English).

- Strengths:

- High standard performance in thinking, coding, mathematics and long work.

- Well -rounded agent skills: Use tools, multi -step automation, protocol adherence.

- Using casesWorking progress of general purposes, documents intelligence, code generation, multi -language institution.

- Why chooseThe most balanced budget for the open systems of the agent.

2. GLM – 4.5 (ZHIPU AI)

- Introduction account: 35B Total parameters, original agent design, long context support.

- Strengths:

- It is designed for this purpose to implement the complex agent, automate workflow, and coordinate tools.

- The licensed ecosystem of the Massachusetts Institute of Technology (700,000+ developer), a rapid community adoption.

- Using casesMulti -agent applications, independent, cost -effective factors, research that requires the logic of the original agent.

- Why choose: To build deep, integrated applications for tools, open LLM on a large scale.

3.

- Introduction account: A mixture of the next generation of experts, controlling the depth/thinking modes, the dominant multi -language model (119+ languages), the coding academic specialist.

- Strengths:

- Switching the dynamic “thinking/not thinking”, the delivery of the advanced job, and the highest grades in mathematics/code/tools.

- QWEN3-Coder: It deals with 1m code for the symbol, and excels in the Step-step-by-step analysis and the complex DeV function.

- Using cases: Multi -Language tools, SAAS Global, logic/multimedia coding applications, DeV teams centered around the Chinese.

- Why choose: Deficient control, the best multi -language support, a world -class code agent.

4. Deepseek-r1 / v3

- Introduction accountFirst logic, multi -stage RLHF training, 37B stimulant parameters for each query (R1); V3 expands to 671b for global mathematics/code.

- Strengths:

- The latest logic in logic and the series of thinking exceeds most Western competitors in scientific tasks.

- Protocols “Deep search” information/search/search for self -government completely.

- Using casesTechnical/scientific research, realistic analyzes, environments that appreciate the ability to interpret.

- Why chooseThe maximum accuracy of thinking, agent accessories for research and planning.

5. Wu Dao 3.0 (Bay)

- Introduction accountStandard family (Aquilachat, Eva, Aquilacode), open capabilities, long sources and a strong long context.

- Strengths:

- It treats both texts and images, and supports multi -language workflow, which is completely suitable for startups and low users.

- Using cases: Press -off Publishing of the agent, small and medium companies, developing a flexible application.

- Why choose: The most practical and standard for multimedia agent tasks and the smallest size.

6. Chatglm (zhipu ai)

- Introduction account: Ready -to -edge windows, dual language, context up to 1 meter, quantity for low memory devices.

- Strengths:

- It is better for functional applications on the device, long independent thinking, and publishing mobile devices.

- Using casesLocal/government publishing operations, sensitive scenarios, resources dedicated.

- Why chooseFlexible scaling from the cloud to the edge/mobile, strong bilateral efficiency.

7. Manus & OpenManus (Monica Ai / Community)

- Introduction account: The new criterion for China for general artificial intelligence agents: independent thinking, the use of tools in the real world, and the agent coordination. Openmanus provides Agentic workflow based on many basic models (Llama, GLM, Deepseek variables).

- Strengths:

- Natural independent behavior: search on the Internet, travel planning, search writing, voice commands.

- OpenManus is very units, combining Chinese open or royal llms for tasks designed.

- Using cases: Factors to complete the real task, multi -agent coordination, and open source work frames.

- Why chooseThe first main step towards AGI -like agent applications in China.

8. Doubao 1.5 Pro

- Introduction account: It is known for the structure of the logic of reality and thinking, the high -context window (expected 1m+ symbols).

- Strengths:

- Actual solution solution, superior logical structure, which can be developed to the deployment of multiple institutions.

- Using casesScenals that emphasize logical rigor, automation at the level of the institution.

- Why choose: Enhancing thinking and logic, strong in developed business environments.

9. Baichuan, Stepfun, Minimax, 01.AI

- Introduction account: “Six Tigers” from the Chinese open AI (for every Massachusetts Institute Technology Technology), each provides logical/strong agents in its field (Stepfun/AIGC, Minimax/Memory, Baichuan/Multiladingual Legal).

- Strengths:

- Various applications: from conversation agents to the logic of the field in law/finance/science.

- Why choose: Choose the requirements for the sector, especially high -value business applications.

Comparative table

| model | Best for | agent? | Multi -language? | Window of context | Coding | Thinking | Unique features |

|---|---|---|---|---|---|---|---|

| Kim K2 | All purposes are an agent | Yes | Yes | 128 kg | High | High | A mixture of experts, quickly, open |

| GLM-4.5 | Original agent applications | Yes | Yes | 128k+ | High | High | The original task/planning the application programming interface |

| QWEN3 | Control, multi -language, saas | Yes | Yes (119+) | 32k – 1m | summit | summit | Fast mode switch |

| QWEN3-Coder | Ribo coding | Yes | Yes | Up to 1m | summit | High | Reibo analysis step -by -step |

| Deepseek-r1/v3 | Thinking/Mathematics/Science | some | Yes | big | summit | higher | RLHF, Agentic Science, V3: 671B |

| Wu Dow 3.0 | Units, multimedia, small and medium companies | Yes | Yes | big | Middle | High | Text/image, symbol, normative structures |

| Chatglm | Edge/Use of a mobile agent | Yes | Yes | 1m | Middle | High | Quantity, resource saving |

| Manus | Independent/sound factors | Yes | Yes | big | a task | summit | Voice/smartphone, AGI in the real world |

| Doubao 1.5 Pro | Heavy logic Foundation | Yes | Yes | 1m+ | Middle | summit | 1m+ symbols, logic structure |

| Baichuan/etc. | The logic of the industry | Yes | Yes | Different | Different | High | Sector specialization |

Main meals and when to use the model

- Kim K2: The best round-if you want strength, balanced thinking, long context, and the vast language support.

- GLM-4.5: The original agent, great for independent tasks applications or tool coincidence; Open source ecosystem commander.

- QWEN3/QWEN3-comrOut of graceful control, multi -language tasks/institutions, and high -level code agencies.

- Deepseek-r1/v3: The golden standard for the series of thinking and mathematics/science and the logic of the research class.

- Wu Dow 3.0The most practical for small and medium -sized companies/startups, especially for multimedia solutions (text/image/code).

- Chatglm/Manus/OpenManusField publishing, privacy, and truly independent factors recommended for use in the real world, or multi-agents cooperative agents.

- Doubao 1.5 Pro/Baichuan/Six Tigers: Consider publishing operations for the sector or if realistic consistency and specialized logic is extremely important.

Michal Susttter is a data science specialist with a master’s degree in Data Science from the University of Badova. With a solid foundation in statistical analysis, automatic learning, and data engineering, Michal is superior to converting complex data groups into implementable visions.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-08-11 08:02:00