A Coding Guide to Design and Orchestrate Advanced ReAct-Based Multi-Agent Workflows with AgentScope and OpenAI

In this tutorial, we built an advanced multi-agent incident response system using AgentScope. We coordinate multiple ReAct agents, each with a clearly defined role such as routing, screening, analyzing, writing, and reviewing, and connect them through structured routing and a shared message center. By integrating OpenAI models, lightweight invocation tools, and a simple internal playbook, we demonstrate the complexity of real-world agent workflows in pure Python without heavy infrastructure or fragile glue code. verify Full codes here.

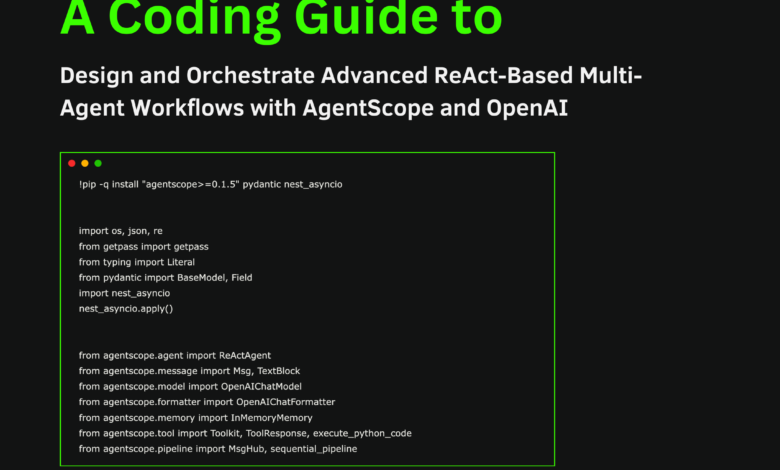

!pip -q install "agentscope>=0.1.5" pydantic nest_asyncio

import os, json, re

from getpass import getpass

from typing import Literal

from pydantic import BaseModel, Field

import nest_asyncio

nest_asyncio.apply()

from agentscope.agent import ReActAgent

from agentscope.message import Msg, TextBlock

from agentscope.model import OpenAIChatModel

from agentscope.formatter import OpenAIChatFormatter

from agentscope.memory import InMemoryMemory

from agentscope.tool import Toolkit, ToolResponse, execute_python_code

from agentscope.pipeline import MsgHub, sequential_pipeline

if not os.environ.get("OPENAI_API_KEY"):

os.environ["OPENAI_API_KEY"] = getpass("Enter OPENAI_API_KEY (hidden): ")

OPENAI_MODEL = os.environ.get("OPENAI_MODEL", "gpt-4o-mini")We have set up the execution environment and installed all the required dependencies so that the tutorial runs reliably on Google Colab. We securely upload the OpenAI API key and configure the core AgentScope components that will be shared across all agents. verify Full codes here.

RUNBOOK = [

{"id": "P0", "title": "Severity policy", "text": "P0 critical outage, P1 major degradation, P2 minor issue"},

{"id": "IR1", "title": "Incident Triage Checklist", "text": "Assess blast radius, timeline, deployments, errors, mitigation"},

{"id": "SEC7", "title": "Phishing Escalation", "text": "Disable account, reset sessions, block sender, preserve evidence"},

]

def _score(q, d):

q = set(re.findall(r"[a-z0-9]+", q.lower()))

d = re.findall(r"[a-z0-9]+", d.lower())

return sum(1 for w in d if w in q) / max(1, len(d))

async def search_runbook(query: str, top_k: int = 2) -> ToolResponse:

ranked = sorted(RUNBOOK, key=lambda r: _score(query, r["title"] + r["text"]), reverse=True)[: max(1, int(top_k))]

text = "\n\n".join(f"[{r['id']}] {r['title']}\n{r['text']}" for r in ranked)

return ToolResponse(content=[TextBlock(type="text", text=text)])

toolkit = Toolkit()

toolkit.register_tool_function(search_runbook)

toolkit.register_tool_function(execute_python_code)We define a lightweight internal playbook and implement a simple relevance-based search tool on top of it. We register this functionality along with a Python implementation tool, enabling agents to retrieve policy knowledge or compute results dynamically. It shows how we augment agents with external capabilities that go beyond purely linguistic reasoning. verify Full codes here.

def make_model():

return OpenAIChatModel(

model_name=OPENAI_MODEL,

api_key=os.environ["OPENAI_API_KEY"],

generate_kwargs={"temperature": 0.2},

)

class Route(BaseModel):

lane: Literal["triage", "analysis", "report", "unknown"] = Field(...)

goal: str = Field(...)

router = ReActAgent(

name="Router",

sys_prompt="Route the request to triage, analysis, or report and output structured JSON only.",

model=make_model(),

formatter=OpenAIChatFormatter(),

memory=InMemoryMemory(),

)

triager = ReActAgent(

name="Triager",

sys_prompt="Classify severity and immediate actions using runbook search when useful.",

model=make_model(),

formatter=OpenAIChatFormatter(),

memory=InMemoryMemory(),

toolkit=toolkit,

)

analyst = ReActAgent(

name="Analyst",

sys_prompt="Analyze logs and compute summaries using python tool when helpful.",

model=make_model(),

formatter=OpenAIChatFormatter(),

memory=InMemoryMemory(),

toolkit=toolkit,

)

writer = ReActAgent(

name="Writer",

sys_prompt="Write a concise incident report with clear structure.",

model=make_model(),

formatter=OpenAIChatFormatter(),

memory=InMemoryMemory(),

)

reviewer = ReActAgent(

name="Reviewer",

sys_prompt="Critique and improve the report with concrete fixes.",

model=make_model(),

formatter=OpenAIChatFormatter(),

memory=InMemoryMemory(),

)

We build several specialized ReAct agents and an orchestrated router that decides how to handle each user request. We assign clear responsibilities to screening, analysis, writing and review agents, ensuring separation of concerns. verify Full codes here.

LOGS = """timestamp,service,status,latency_ms,error

2025-12-18T12:00:00Z,checkout,200,180,false

2025-12-18T12:00:05Z,checkout,500,900,true

2025-12-18T12:00:10Z,auth,200,120,false

2025-12-18T12:00:12Z,checkout,502,1100,true

2025-12-18T12:00:20Z,search,200,140,false

2025-12-18T12:00:25Z,checkout,500,950,true

"""

def msg_text(m: Msg) -> str:

blocks = m.get_content_blocks("text")

if blocks is None:

return ""

if isinstance(blocks, str):

return blocks

if isinstance(blocks, list):

return "\n".join(str(x) for x in blocks)

return str(blocks)We present a log data model and a utility function that normalizes the proxy output to clean text. We ensure that downstream agents can safely consume and optimize previous responses without coordination issues. It focuses on making communication between agents robust and predictable. verify Full codes here.

async def run_demo(user_request: str):

route_msg = await router(Msg("user", user_request, "user"), structured_model=Route)

lane = (route_msg.metadata or {}).get("lane", "unknown")

if lane == "triage":

first = await triager(Msg("user", user_request, "user"))

elif lane == "analysis":

first = await analyst(Msg("user", user_request + "\n\nLogs:\n" + LOGS, "user"))

elif lane == "report":

draft = await writer(Msg("user", user_request, "user"))

first = await reviewer(Msg("user", "Review and improve:\n\n" + msg_text(draft), "user"))

else:

first = Msg("system", "Could not route request.", "system")

async with MsgHub(

participants=[triager, analyst, writer, reviewer],

announcement=Msg("Host", "Refine the final answer collaboratively.", "assistant"),

):

await sequential_pipeline([triager, analyst, writer, reviewer])

return {"route": route_msg.metadata, "initial_output": msg_text(first)}

result = await run_demo(

"We see repeated 5xx errors in checkout. Classify severity, analyze logs, and produce an incident report."

)

print(json.dumps(result, indent=2))We coordinate the entire workflow by routing the request, executing the appropriate agent, and running a collaborative optimization loop using the message center. We coordinate multiple agents sequentially to optimize the final output before returning it to the user. It brings all the previous components together into a cohesive and comprehensive agent pipeline.

In conclusion, we have shown how AgentScope enables us to design robust, modular, and collaborative agent systems that go beyond immediate interactions. We dynamically routed tasks, used tools only when they were needed, and optimized deliverables through coordination across multiple agents, all within a clean, repeatable Colab setup. This pattern demonstrates how we can scale from simple agent experiments to production-style reasoning paths while maintaining clarity, control, and scalability in our agent AI applications.

verify Full codes here. Also, feel free to follow us on twitter Don’t forget to join us 100k+ mil SubReddit And subscribe to Our newsletter. I am waiting! Are you on telegram? Now you can join us on Telegram too.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of AI for social good. His most recent endeavor is the launch of the AI media platform, Marktechpost, which features in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand by a broad audience. The platform has more than 2 million views per month, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2026-01-05 07:54:00