StepFun AI Introduce Step-DeepResearch: A Cost-Effective Deep Research Agent Model Built Around Atomic Capabilities

StepFun introduced Step-DeepResearch, a 32B parameter deep search operator that aims to turn web research into an actual research workflow with long-term thinking, tool use, and structured reporting. The model is built on Qwen2.5 32B-Base and is trained to act as a single agent that plans, explores sources, checks evidence, and writes reports with citations, while keeping the cost of inference low.

From research to deep research

Most current web proxies are tuned to standards for answering multi-hop questions. They try to match the real answers to the short questions. This is closer to targeted retrieval than real research. Different deep search tasks. It involves recognizing underlying intentions, making long-term decisions, using multi-turn tools, structured reasoning, and cross-source verification under uncertainty.

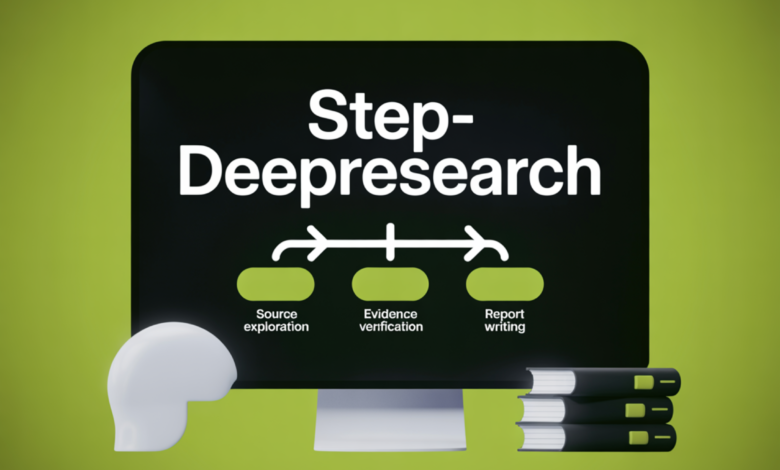

Step-DeepResearch reformulates this as sequential decision making across a combined set of atomic capabilities. The research team identifies 4 atomic capabilities: planning and analyzing tasks, searching for deep information, reasoning and verifying, and creating professional reports. Instead of coordinating many external factors, the system integrates this loop into a single model that decides the next action at each step.

Collect data on atomic capabilities

To teach these atomic abilities, the research team is building separate data pipelines for each skill. For planning, they start from high-quality technical reports, survey papers and financial analysis documents. They reverse engineer real-world research plans and task trees from titles, summaries, and structure, and then create paths that follow these plans. This exposes the template to long-horizon project structures, not just short question templates.

To search deeply for information, they create graph-based queries via knowledge graphs such as Wikidata5m and CN-DBpedia. They sample subgraphs, expand them using search, and synthesize questions that require multiple-hop thinking across entities and documents. The separate pipeline uses a Wiki-style hyperlink index to force retrieval of cross-documents and evidence sets. Easy questions that a robust model can already solve are filtered out using a simple ReAct method strategy, so training focuses on difficult research problems.

Reflection and validation data are generated through self-correcting loops and multi-agent teacher traces. Teacher agents extract claims, plan verifications, check facts, re-plan if discrepancies arise, and only then write reports. The resulting paths are cleaned and used as supervision for a single student agent. Report generation training takes place in two stages, intermediate field pattern and depth training using query report pairs, then fine-tuning is supervised by strict coordination and plan consistency constraints.

Progressive training on Qwen2.5-32B-Base

The training pipeline consists of 3 phases, active intermediate training, supervised fine-tuning, and reinforcement learning. In the middle of Training Phase 1, the team is injecting atomic capabilities without tools, using a context length of 32K tokens. The data covers active reading, the effects of synthetic reasoning, summarization and reflection. The research team shows consistent gains in SimpleQA, TriviaQA, and FRAMES as the training volume reaches around 150 billion codes, with the largest gains in FRAMES, which emphasizes structured thinking.

In the second stage, the context is extended to 128 thousand symbols and explicit tool calls are introduced. The model learns tasks such as answering URL-based questions, deep web searching, summarizing long documents, and long dialogue inference. This phase aligns the model with real research scenarios where search, browsing and analysis need to be mixed in one path.

During supervised fine-tuning, the four atomic capabilities consist of full deep search and deep search traces. Data cleaning maintains correct and short paths in terms of steps and tool calls. The pipeline introduces controlled tool errors followed by correction to improve robustness, and enforces citation formats so that reports remain based on retrieved sources.

Reinforcement learning then improves the agent in a real tool environment. The research team constructs tasks and checklists through backward synthesis, and trains the checklist-style rubric judge to score reports along precise dimensions. The reward design converts ternary heading labels into asymmetric binary rewards that capture positive goals and violations. The policy is trained with a PPO and a learning critic, using generalized feature estimation with near-zero discounting so as not to truncate long paths.

ReAct architecture for single agent and search stack

At inference time, Step-DeepResearch acts as a single ReAct-style agent that exchanges reasoning, tool calls, and feedback until it decides to output a report. The toolkit includes batch web search, a task manager, shell commands, and file operations. Execution is done in sandbox mode with the terminal persisted through tmux. The perceptual-oriented browser reduces redundant page capture using perceptual hashing distance. Document analysis, transcription, and image analysis tools support multimedia input.

Obtaining information uses two related sources. The StepFun team states that its research API is based on over 20 million high-quality research papers and 600 unique indicators. The research team then describes a coordinated authority indexing strategy that isolates more than 600 trusted domains, including government, academic, and institutional sites. Retrieval works at the paragraph level and uses authority-aware classification so that high confidence domains are preferred when relevance is similar.

File Tools supports patch-based editing, so the agent can update only the modified sections of the report. The summary-aware storage system writes the full tool output to local files and inserts only compressed summaries into context. This acts as external memory and avoids context overflow for long projects.

Evaluation, cost and access

To measure deep searching behavior, the team introduced ADR-Bench, a Chinese benchmark that includes 110 open-ended tasks across 9 domains. 70 tasks cover broad areas such as education, science, engineering and social life, and are evaluated by experts side by side. 40 tasks in finance and law are scored using clear evaluation headings that follow atomicity and verifiability constraints.

Widespread artificial intelligence Research titles,The compliance rate of Step-DeepResearch reaches 61.42 percent,,which is similar to OpenAI-DeepResearch and Gemini-DeepResearch,,and clearly outperforms multiple open and proprietary,baselines. In ADR-Bench, expert-based Elo ratings show the 32B outperforms larger open models like the MiniMax-M2, GLM-4.6, and DeepSeek-V3.2, and competes with systems like Kimi-Researcher and MiniMax-Agent-Pro.

Key takeaways

- One factor, atomic power design: Step-DeepResearch is a 32B single parameter agent built on Qwen2.-32B-Base, it accommodates 4 atomic capabilities, planning, deep information search, reasoning, verification and professional report generation, instead of relying on many external agents.

- Collect target data for each skill: The research team builds separate data pipelines for planning, deep information searching, reasoning, and report writing, using reverse-engineered schemas from real reports, graph-based queries via Wikidata5m, CN-DBpedia, multi-agent teacher traces, and strict reporting format data.

- Three-stage training with long context and RL: The training uses intermediate training, fine-tuning and supervised reinforcement learning, with intermediate training up to 150B codes at 32K and then 128K context, SFT composes full deep search paths, and PPO-based RL with rule judge improves reports against fine-grained checklists.

- ReAct architecture with structured search and external memory: At inference time, the model runs a ReAct loop that calls tools to bulk search web, task processes, shells, and files, uses a search API based on over 20 million sheets and 600 distinct indices along with over 600 trusted scopes, and relies on debug editing and abstract storage to act as external memory.

- Competitive quality at lower cost: Across AI research benchmarks, the model achieves 61.42 percent rubric compliance and competes with OpenAI-DeepResearch and Gemini-DeepResearch, achieving a 67.1 percent win/break rate on the ADR Bench against strong baselines.

verify paper and Repo. Also, feel free to follow us on twitter Don’t forget to join us 100k+ mil SubReddit And subscribe to Our newsletter. I am waiting! Are you on telegram? Now you can join us on Telegram too.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2026-01-25 21:08:00