Scaling AI inference with open-source efficiency

Nvidia Dynamo, an open source inference program designed to accelerate and expand the scope of thinking models within artificial intelligence factories.

Managing and coordinating requests for inferring from artificial intelligence efficiently across a fleet of graphics processing units is a decisive endeavor to ensure that artificial intelligence factories can work effectively in terms of optimal cost and increase the generation of symbolic revenues.

With the increasingly spread of the logic of artificial intelligence, each Amnesty International model is expected to generate tens of thousands of symbols with each router, and mainly represents the “thinking” process. Consequently, enhancing the performance of reasoning while reducing its cost simultaneously is very important to accelerate growth and increase revenue opportunities for service providers.

A new generation of artificial intelligence inference programs

NVIDIA Dynamo, which succeeds in the NVIDIA TRIONTON, is a new generation of artificial intelligence inference programs specifically designed to increase the generation of symbolic revenues of artificial intelligence factories that publish artificial intelligence models.

Dynamo coordinates deductive communication and rushes across thousands of graphics processing units. It uses the dismantled service, a technology that separates the processes of processing and obstetrics from the large LLMS models on the distinctive graphics processing units. This approach allows to improve each stage independently, meet its specific mathematical needs and ensure the maximum use of GPU resources.

“Industries around the world train Amnesty International models to think and learn in different ways, making them more sophisticated over time,” said Jensen Huang, founder and director of NVIDIA. “To enable the future of the logic allocated to Amnesty International, NVIDIA Dynamo helps to serve these models on a large scale, which leads to savings in costs and competencies through artificial intelligence factories.”

Using the same number of graphics processing units, Dynamo has shown the ability to double the performance and revenue of artificial intelligence factories that serve Llama models on the current fold platform in NVIDIA. Moreover, when playing the Deepseek-R1 model on a wide range of GB200 NVL72 shelves, the smart inferring improvements for NVIDIA Dynamo have increased the number of symbols created by more than 30 times per unit of graphics processing.

To achieve these improvements in the performance performance, NVIDIA Dynamo includes many major features designed to increase productivity and reduce operating costs.

Dynamo can add dynamic graphics processing units and re -customize them in an actual time to adapt to volatile sizes and types of requests. The program can also determine the graphics processing units specified in large groups suitable for reducing response accounts to minimum efficiently. Dynamo can also cancel the infinite data download to memory devices and more cost -effective storage devices while quickly recovered when needed, thus reducing the total inference costs.

Nvidia Dynamo is fully released as an open source project, and provides wide compatibility with famous frameworks such as Pytorch, Sglang, Nvidia Tensorrt-LLM and VLLM. This open approach supports emerging institutions and companies and researchers in developing and improving new methods to serve artificial intelligence models through various infrastructure.

NVIDIA expects Dynamo to accelerate the adoption of artificial intelligence inference through a wide range of organizations, including major cloud service providers and artificial intelligence creators such as AWS, Cohere, Coreweave, Dell, Fireworks, Google Cloud, Lambda, Meta, Microsoft Azure and Nebius, NetApp, OCI, OCI, OCI, OCI, and OCI,

Nvidia Dynamo: Exclusive inference and AI

The main innovation of NVIDIA Dynamo is its ability to set the knowledge of memory inference systems from submit previous requests, known as KV cache, through thousands of graphics processing units.

The program then intelligently directs new inference requests to graphics processing units that have the best identification of knowledge, avoiding effective expensive accounts and editing other graphics processing units to deal with new incoming requests. This smart guidance mechanism significantly enhances efficiency and reduces transition time.

“To deal with hundreds of millions of requests monthly, we rely on NVIDIA GPU and inference programs to provide performance and reliability and expand our request from our business and users,” said Dennis Yarat, CTO of artificial intelligence.

“We look forward to taking advantage of Dynamo, through the enhanced distributive application capabilities, to pay more efficiency that serves inference and meet the account requirements for new logical thinking models.”

The AI COHERE platform is already planning to take advantage of Nvidia Dynamo to enhance AI Agentic capabilities within a series of models orders.

“The expansion of advanced artificial intelligence models requires multiple sophisticated GPU, smooth coordination and total low -communication libraries that transmit contexts of thinking smoothly through memory and storage,” explained by Sorb Baji, SVP Engineering in COHERE.

“We expect Nvidia Dynamo to help us provide a major user experience for our institutions.”

Support for the dismantled service

The NVIDIA Dynamo Integration platform also features strong support for non -renewable service. This advanced technology sets the various mathematical stages of LLMS – including the decisive steps to understand the user’s inquiry and then create the most appropriate response – to the various graphics processing units within the infrastructure.

The uniform application is especially suitable for thinking forms, such as the new Nvidia Llama Nemotron model, which uses advanced inference techniques to improve contextual understanding and generating response. By allowing for each stage to be seized and resources independently, the uncontrolled application improves productivity in general and provides faster response times for users.

Together, of artificial intelligence, a prominent player in the AI Access Cloud cloud, also looks forward to integrating his inference engine with Nvidia Dynamo. This integration aims to enable smooth scaling of the inference burdens through the multiple GPU. Moreover, together AI will be allowed to treat traffic jams that may arise in different stages of the model pipeline.

“Expanding the scope of thinking models effectively requires new advanced inference techniques, including disjointed guidance and recognition of context,” CE Zhang, CTO from AI together.

“Openness and Nvidia Dynamo will allow us to smoothly connect its components in our engine to serve more requests while improving resource use-which leads to our accelerated computer investments. We are excited to take advantage of the statute’s capabilities to bring in cost-cost models to cost to cost cost.”

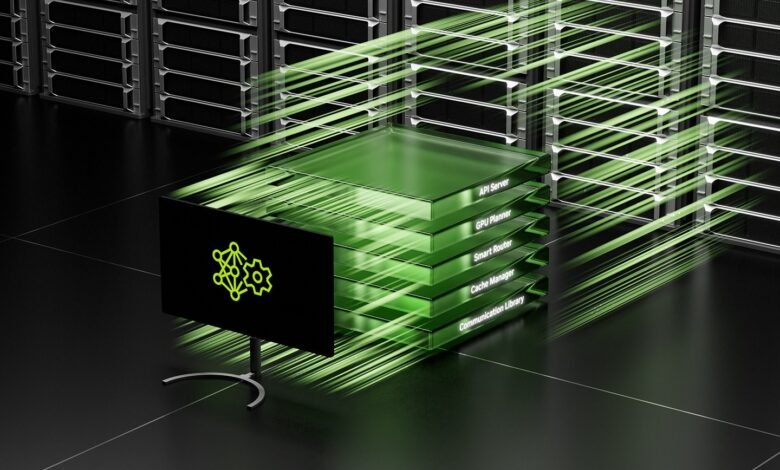

Four major innovations for Nvidia Dynamo

NVIDIA highlighted four main innovations within Dynamo that contribute to reducing the costs of the inference service and enhancing the total user experience:

- GPU: The advanced planning engine that adds dynamically and removes graphics processing units at the request of the volatile user. This ensures the allocation of optimal resources, which prevents both excessive provision of graphics processing unit.

- Smart router: LLM is a smart router that directs inference requests across large fleets of graphics processing units. Its basic function is to reduce the costly or overlapping GPU accounts, thus editing the valuable GPU to deal with new incoming requests more efficiently.

- Low Communications Library to assign: Mohtna Inference Library designed to support modern GPU-To-GPU Etisalat. It tries the complications of data exchange via heterogeneous devices, which accelerates the data transmission speeds.

- Memory Director: A smart motor manages emptying and re -downloading data and re -downloading it to and from memory devices and low -cost storage devices. This process is designed to be smooth, ensuring that there is no negative effect on the user experience.

NVIDIA Dynamo will be provided at NIM Microservices and will be supported in a future version of the company’s AI Enterprise program platform.

See also: LG Exaone Deep is an orange for mathematics, science and coding

Do you want to learn more about artificial intelligence and large data from industry leaders? Check AI and Big Data Expo, which is held in Amsterdam, California, and London. The comprehensive event was identified with other leading events including the smart automation conference, Blockx, the digital transformation week, and the Cyber Security & Cloud.

Explore the upcoming web events and seminars with which Techforge works here.

2025-03-19 16:49:00