Sensor-Invariant Tactile Representation for Zero-Shot Transfer Across Vision-Based Tactile Sensors

Touch sensing is a decisive way for smart systems to realize and interact with the material world. The GELSIGHT sensor and its variables have emerged as touching touch techniques, providing detailed information about the surfaces of the connection by converting touch data into visual images. However, vision -based touch sensor lacks the ability to move between sensors due to the differences in design and manufacturing, which leads to great differences in touch signals. Simple differences in optical design or manufacturing processes can create great contradictions in the sensor output, which causes machine learning models on a single performance sensor when applied to others.

Computer vision models are widely applied to vision -based touch images due to their visual nature in nature. The researchers adaptive to learning methods of representation from the vision community, with contradictory learning being famous for developing touch and visual representations of specific tasks. Car coding methods are also explored, as some researchers use a convincing automatic encrypted (MAE) to learn touch representations. Methods such as multi -purpose representation uses touch data sets in LLM frames, and coding types of sensors as distinctive symbols. Despite these efforts, current methods often require large data groups, treat sensory types of fixed groups, and lack flexibility in generalization on invisible sensors.

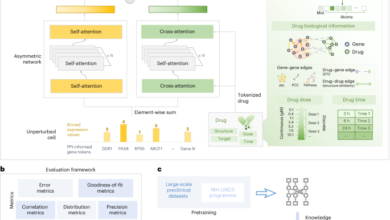

Researchers from the University of Illinois Urbana Chambine suggested the representation of the touch that changes in the sensor (SITR), which is a touch of transmission through various sensors by touch in a zero way. This depends on the hypothesis that achieving the transfer of the sensor requires learning effective representations of the sensing by exposure to various sensors changes. It uses three basic innovations: Using easy calibration images to characterize individual sensors with transformer, using contradictory learning to be supervised to emphasize the engineering aspects of touch data through multiple sensors, and develop a wide industrial data set that contains 1 million examples of 100 sensors.

The researchers used the touch image and a set of calibration images of the sensor as network inputs. The sensor wallpaper is offered from all input images to insulate pixel color changes. After the vision transformer (VIT), these images are written in the codes, with calibration images that require the distinctive symbol only once for each sensor. Moreover, signs of supervision of the training process are directed: the loss of the rebuilding of the normal pixel map for the distinctive symbols to correct the output and a contradictory loss of category symbol. During pre -training, the light coding unit rebuilt the contact surface as a natural map of the encoder. Moreover, SITR Entitions Learning Edit for Supervision Disclosure (SCL), and expanding traditional contrast methods by using stickers information to determine the similarity.

In the classification tests of objects using the real world researchers’ data collection, SITR outperforms all basic models when transported via different sensors. While most models work well in non -transport settings, they fail to generalize when tested on distinctive sensors. SITR’s ability to capture significant features and a sensor variable is still strong despite the changes in the field of sensor. In statutory estimation tasks, where the goal is to estimate the 3-dof position changes using initial and final images, SITR reduces the root square error by approximately 50 % compared to the basic lines. Unlike the classification results, Imagenet pre -training marginally improves appreciation performance, indicating that the features learned from natural images may not effectively transmit to touch areas of micro -slope tasks.

In this paper, the researchers introduced SITR, a touch representation framework that is transmitted through various touch -based sensors. They built large -scale data collections and aligned the sensor using synthetic and realistic data and developed a way to train SITR to capture dense features and sensors. SITR represents a step towards a unified approach to touch sensing, where models can smoothly circulate through different types of sensors without or adjust the re -training. This penetration has the ability to accelerate the progress of automated manipulation and seek touch by removing a major barrier in front of the adoption and implementation of these promising sensors.

Payment Paper and symbol. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 85k+ ml subreddit.

🔥 [Register Now] The virtual Minicon Conference on Open Source AI: Free Registration + attendance Certificate + 3 hours short (April 12, 9 am- 12 pm Pacific time) [Sponsored]

Sajjad Ansari is in the last year of the first university stage of Iit khargpur. As enthusiastic about technology, it turns into the practical applications of Amnesty International with a focus on understanding the impact of artificial intelligence techniques and their effects in the real world. It aims to clarify the concepts of complex artificial intelligence in a clear and accessible way.

2025-04-08 15:50:00