AI doctor learns to ‘see’ medical images

Google gives diagnostic artificial intelligence the ability to understand visual medical information with its latest research on amie (expressing medical intelligence explorer).

Imagine chat with artificial intelligence about healthy anxiety, and instead of just processing your words, it can actually look at the image of this disturbing rash or ECG printed understanding. This is what Google aims.

We have already known that Amy showed the promise in the text -based medical chats, thanks to the previous work published in nature. But let’s face it, real medicine is not only about words.

Doctors rely heavily on what they can Sees Leather conditions, machinery readings, laboratory reports. The Google team also indicates the right, even the simple instant messaging platforms “allows for fixed multimedia information (for example, pictures and documents) to enrich the discussions.”

The text text was only missing a huge piece of mystery. The big question, as the researchers said, was “whether LLMS could have diagnostic clinical conversations that include this most complex type of information.”

Google teaching Amie to search and mind

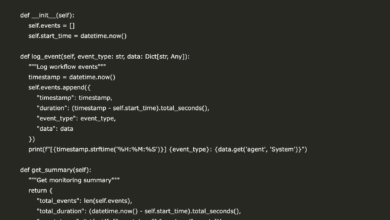

Google engineers have strengthened Amie using the Flash Gemini 2.0 model as the brain’s brain. They gathered this with what they call a “framework for state -real logic”. In simple English, this means that artificial intelligence does not only follow the text; She adapts her conversation based on what she has learned so far and what he still has to discover.

It is close to how the human doctor works: collecting clues, forming ideas about what may be a mistake, then asking for more specific information – including visual evidence – to narrow things.

“This enables Amie to seek the relevant multimedia artifacts when needed, to explain its results accurately, and to integrate this information smoothly into continuous dialogue, and use it to improve diagnoses,” explains Google.

Think about the conversation that flows through the stages: collecting the history of the patient first, then moving towards diagnostic and management suggestions, and finally follow -up. Artificial intelligence constantly evaluates its understanding, and asking for a picture of the skin or laboratory result if it feels a gap in its knowledge.

To obtain this properly without an endless experimental experience on real people, Google built a detailed simulation laboratory.

Google has created vibrant patients, withdrawing medical images and data from sources such as the PTB-XL ECG database and a SCIN dermatology collection, with the addition of reasonable rear stations using Gemini. Next, they allowed Amie to “chat” with simulation patients inside this setting and automatically check the quality of his performance on things such as the accuracy of diagnosis and avoid errors (or hallucinations “).

Virtual OSCE: Google Amie is placed in its steps

The real test came in the preparation of a designer to reflect how to evaluate medical students: OSCE clinical examination (OSCE).

Google conducted a study of 105 different medical scenarios. The real actors, trained to photograph patients constantly, either with the new multimedia amie or with actual human care doctors (PCPS). These chats occurred through an interface where the patient can download images, just as it may be in the modern correspondence application.

After that, specialized doctors (in skin diseases, heart disease and internal medicine) and representatives of the patient themselves reviewed the conversations.

Human doctors recorded everything of the quality of history, the accuracy of the diagnosis, the quality of the proposed management plan, to communication and sympathy skills – and of course, how artificial intelligence interpreted visual information.

Surprising results from the simulation clinic

Here where it becomes interesting. In this comparison face to face within the study environment it controls, Google found that Amie not only kept-often appeared.

Artificial Intelligence is classified as better than human PCPS in the interpretation of common media data during chats. He also recorded higher in diagnostic accuracy, as it produced the differential diagnostic lists (the ranking list in possible cases) that specialists considered more accurate and complete based on the details of the case.

Specialized doctors who review the texts tend to evaluate Amie’s performance in most areas. They have noticed in particular the “quality interpretation of image and thinking”, the accuracy of diagnostic work, the integrity of its administrative plans, and its ability to science when the situation needs urgent attention.

Perhaps one of the most surprising results came from the patient’s actors: they often found that artificial intelligence is more sympathetic and worthy of human doctors in these text reactions.

In a decisive safety note, the study did not find any statistically significant difference between the number of times that amie committed errors based on the images (concrete results) compared to human doctors.

The technology never stands, so Google has also conducted some early tests that the Gemini 2.0 Flash exchanging the latest Gueini 2.5.

Using their simulation framework, the results have not glimpsed to more gains, especially in obtaining a proper diagnosis (higher resolution 3) and suggesting appropriate management plans.

Despite the promising, the team is fast to add a dose of realism: these are just automatic results, and “strict evaluation by reviewing expert doctors is necessary to confirm these advantages.”

Important reality checks

Google is greatly presented about the restrictions here. “This study explores a system of research only in an evaluation similar to OSCE using the patient’s actors, which suffers from great complexity in the complexity … for real care,” they are clearly mentioned.

Simulator scenarios, no matter how well designed, are not the same as dealing with the unique complications of real patients in a crowded clinic. It also stresses that the chat interface does not embody the richness of a real video or a personal consultation.

So, what is the next step? Transfer carefully towards the real world. Google is already involved with the Beth Israel Deaconess Medical Center for a research study to learn how Amie performs in actual clinical settings with the patient’s consent.

Researchers also recognize the need to surpass the text and fixed images ultimately towards dealing with video and sound in real time-the type of interaction in the field of health care from today.

Giving artificial intelligence the ability to “see” and explain the type of visual evidence that doctors use every day provides a glimpse of how to help artificial intelligence one day. However, the path of these promising results to a safe and reliable daily health care tool still requires careful movement.

(Alexander Shin Photography)

See also: Is AI Chatbots really changed the world of work?

Do you want to learn more about artificial intelligence and large data from industry leaders? Check AI and Big Data Expo, which is held in Amsterdam, California, and London. The comprehensive event was identified with other leading events including the smart automation conference, Blockx, the digital transformation week, and the Cyber Security & Cloud.

Explore the upcoming web events and seminars with which Techforge works here.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-05-02 12:38:00