A Step-by-Step Guide to Build a Fast Semantic Search and RAG QA Engine on Web-Scraped Data Using Together AI Embeddings, FAISS Retrieval, and LangChain

In this tutorial, we tend strongly to the growing ecosystem from artificial intelligence to show the extent of the speed of transforming the irregular text to the service of proficiency in the questions that cite its sources. We will question a handful of live web pages, divide them into knit parts, and nourish these pieces into a metal inclusion model/M2-By 80m-8K-RCIVAL. These vectors fall into the FAISS index to find a similarity of millimeters, and after that, a lightweight Chattogetter model that remains on the basis of the corridors that have been recovered. Since AI together deals with implications and chat behind one application programming interface, we avoid isolating many service providers, shares, or SDK accents.

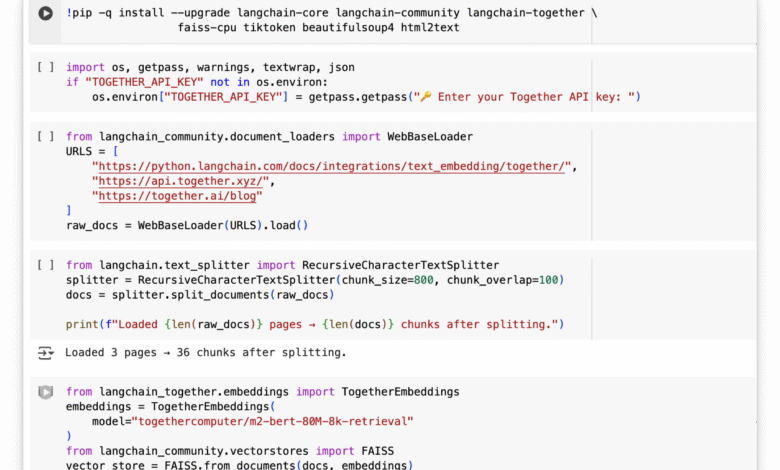

!pip -q install --upgrade langchain-core langchain-community langchain-together

faiss-cpu tiktoken beautifulsoup4 html2text

Quiet PIP promotions (-q) and install everything that Colab Rag needs. It pulls the basic Langchain libraries as well as the integration of artificial intelligence together, FAISS to search for vectors, deal with Tiktoken, and a lightweight HTML or HTML via beutifulsoup4 and HTML2Text, ensuring that the note note to the end to one party without additional preparation.

import os, getpass, warnings, textwrap, json

if "TOGETHER_API_KEY" not in os.environ:

os.environ["TOGETHER_API_KEY"] = getpass.getpass("🔑 Enter your Together API key: ")We check if the environment variable together _API_KEY has already been set; If not, it is safely asking us to switch with GetPass and store it in OS.ENVIRON. The rest of the notebook can call the AI applications interface together without arduous secrets or exposing them in a normal text by capturing accreditation data once for each time.

from langchain_community.document_loaders import WebBaseLoader

URLS = [

"https://python.langchain.com/docs/integrations/text_embedding/together/",

"https://api.together.xyz/",

"https://together.ai/blog"

]

raw_docs = WebBaseLoader(URLS).load()

WeBBASELOADER brings each URL, BOILERPLATE, and restores the Langchain document that contains the text of the clean page as well as descriptive data. By passing a list of links related to together, we immediately collect direct documents and blog content that will be included later for semantic research.

from langchain.text_splitter import RecursiveCharacterTextSplitter

splitter = RecursiveCharacterTextSplitter(chunk_size=800, chunk_overlap=100)

docs = splitter.split_documents(raw_docs)

print(f"Loaded {len(raw_docs)} pages → {len(docs)} chunks after splitting.")RecursivecharactertextSplitter Slices of each page brought to ~ 800 pieces of tapes with 100 letters overlap so that contextual clues are not lost at the boundaries of a piece. The resulting menu documents contain the Langchain Document with a bite size, and the printed version shows the number of pieces produced from the original pages, which are the basic preparatory for high -quality inclusion.

from langchain_together.embeddings import TogetherEmbeddings

embeddings = TogetherEmbeddings(

model="togethercomputer/m2-bert-80M-8k-retrieval"

)

from langchain_community.vectorstores import FAISS

vector_store = FAISS.from_documents(docs, embeddings)

Here we are based on the M2-Bert retrieval model from AI 80 M-Ert as a flowing character, then feed each text on it while fais.from_documents creates a memory-headed index. Supports the vector store resulting from searches in the millical pocket pocket again, converting our intensive pages into a searched semantic database.

from langchain_together.chat_models import ChatTogether

llm = ChatTogether(

model="mistralai/Mistral-7B-Instruct-v0.3",

temperature=0.2,

max_tokens=512,

)

Cattogether wraps an exposed model for chatting together AI, Mistral-7B-Instruct-V0.3 to be used like any other Langchain LLM. The 0.2 low temperature maintains the answers on the ground and is repeated, while Max_tokens = 512 leaves space for detailed multi -vertebral responses without a fugitive cost.

from langchain.chains import RetrievalQA

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=vector_store.as_retriever(search_kwargs={"k": 4}),

return_source_documents=True,

)

Retrievalqa puts the pieces together: it takes Retriever Faiss (return the best 4 similar pieces) and nourish those excerpts in LLM using a simple “object” template. Preparing Return_source_documents = correct means that each answer will return with the exact clips that you depend on, giving us Q-AND-A ready to be martyred.

QUESTION = "How do I use TogetherEmbeddings inside LangChain, and what model name should I pass?"

result = qa_chain(QUESTION)

print("n🤖 Answer:n", textwrap.fill(result['result'], 100))

print("n📄 Sources:")

for doc in result['source_documents']:

print(" •", doc.metadata['source'])Finally, we send natural inquiries through QA_chain, which recalls the four most relevant pieces, feeds it to the Cattogether model, and restores a brief answer. Then the coordinated response is printed, followed by the URL Source List, which gives us both compound interpretation and transparent martyrdom in one shot.

In conclusion, in approximately fifty lines of the code, we built a full -ranging ring supported by Amnesty International: absorption, inclusion, storage, recovery and speaking. This approach is deliberate units, the FAISS exchange of chroma, or the trading of 80 m, the parameter of the multi -language model together, or connect the Raranker device without touching the rest of the pipeline. What remains fixed is the convenience of the uniform together for background access: rapid inclusion and affordable prices, chat models that have been seized for the following instructions, and a generous free plane that makes the experience painful. Use this template to pave the inner knowledge assistant, a documentation robot for customers, or a personal search assistant.

verify Clap notebook here. Also, do not hesitate to follow us twitter And do not forget to join 90k+ ml subreddit.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-05-14 07:11:00