Google AI Creates Fictional Folksy Sayings

Google AI creates fictional sayings

Google AI creates fictional sayings The phrase that may one day look like science fiction is now rooted in reality, and it attracts a lot of attention. Do we see the rise of the wisdom made of the machine or just a glossy entertainment wrapped in nostalgia? Since Google introduces a new overview feature on artificial intelligence, many users discover their ability to manufacture convincing but completely fake expressions. If you are interested in how artificial intelligence formed our language and may mislead anyone who trusts his answers.

Also read: The fully automated warehouse

Understand Google AI

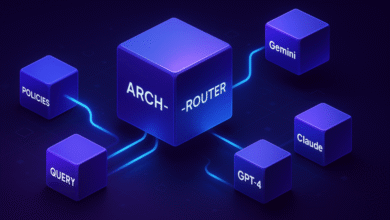

An Amnesty International Overview of Google was presented as part of its development towards obstetric research. The GEMINI AI model uses a Google model to provide summary answers at the highest search results, in order to save user time by skipping unrealistic web pages. Instead of viewing a list of links such as traditional search, AI makes an overview of information distillation in conversation responses using broken data from different angles of the Internet.

The comfort comes at a cost. Within days of the broader operation in May 2024, users began noting some of the extraordinary output. One of the viral examples included a response that advises people to add glue to the pizza sauce to make it better than any respectable source of cooking. Accidents raised questions: What happens when artificial intelligence does not know the answer? What generates it when he tries to “look human” without an actual human vision?

Invention of wisdom: Vasasi sayings from anywhere

In an attempt to appear more associated and conversations, Ins AI began to pump responses with fake expressions that look like traditional wisdom. For example, when they are asked to explain the reason for the cat’s response, she responded with a phrase claim, “as the old proverb says, the cold cat is a happy cat.” There is no documented evidence of a saying at all before artificial intelligence is printed, but she feels almost familiar and real.

This particular issue has become so noticeable that experts have begun to comb the created expressions of artificial intelligence used in various contexts. Some seemed to have mixed actual quotes and the wisdom of the southern style, while others were explicit nonsense that became palatable to familiar linguistic patterns. Google tried to make artificial intelligence look more humane by simulating informal language, but in doing so, he may have accidentally created a folklore that produces convincing lies.

Also read: View artificial intelligence trends show visions of stability

Why are fake expressions a greater concern than the jokes that made a mistake?

At a glance, it may seem harmless, perhaps humor, that artificial intelligence generates sayings of delicate air. However, once the wrong term is repeated through a reliable system like Google, it acquires sudden credibility. An unfamiliar person may take the subject of this information at the nominal value, assuming that it is a widely acceptable cultural saying or the truth. Then this wrong information spreads, either through an oral or social word.

Linguists warn that expressions and popular wisdom are deeply linked to culture and experience. When the device creates Fu expressions, it undermines this tradition by introducing a false context in the narration. Over time, this risk changing the language and teaching users, especially young generations who can start using these phrases as if they were part of the actual heritage.

Confidence in information depends on originality. When artificial intelligence shows the phrase “looks right”, people may not stop to check its origins. This is the place where the decisive difference lies: it is possible to laugh at jokes and errors, but the phrase that was passed as the ancient truth restores knowledge in a imitation manner.

Also read: The legend of Japanese administration has been revived as Amnesty International

The technical roots of Hallusa International Organization

The AI phenomenon, which generates false but reasonable content, is known as “hallucinations”. This often happens when the system has a limited high -quality data about a specific question or tries to fill the gaps by creating the creative information between fragmented information. Gemini, the leading foundation model of Google, is specifically trained to produce a human -like text, which makes it vulnerable to the manufacture of details in a convincing tone.

Automated learning models such as Gemini work by predicting the following word probably in the sentence. This is done by analyzing huge data collections, most of which are sources of books, websites and articles. If this data lacks examples or contains rare patterns, the model tries to bridge the gap with its own extrapolation. When the claims require that the system be friendly or “speak as a human”, the possibility of invented expressions increases.

In organized data environments, the algorithm usually works well. But the language, unlike numbers or code, is liquid and rich in context. Without understanding cultural importance or historical origin, artificial intelligence ends with the formulation of responses that feel right but incorrectly and realistic.

How Google responds to criticism

After a flood of the reverse reaction, Google admitted problems with an artificial intelligence overview and began to remove the terrible examples in particular. According to what was reported, the engineers tighten the content filters and change the instructions used to create the results. Reforms are gradually put forward, and Google warns that although the improvements are coming, any Amnesty International model is 100 % guaranteed.

A spokesman stated that the company is still committed to high -quality information and will continue to invest in preventing misleading content. At the same time, the technology giant urges users to make notes when they face inaccuracy. These corrections help control outputs and enhance strict standards through research experiences.

Despite the reforms, the incident emphasizes a deeper challenge: artificial intelligence is great to look confident but has no internal measure of the truth. It lacks the basis in reality unless the inputs of the name in the human being, he trained it otherwise. This raises an important issue for future artificial intelligence systems aimed at fluently interacting with human beings strictly.

Also read: Google’s new artificial intelligence tool enhances the learning experience

Impacts on optimizer search engines, content creators and digital marketers

For those who work in SEO and create digital content, the general view of Amnesty International provides both complications and opportunities. On the one hand, if the AI summaries dominate the user’s attention, web sites may suffer from low clicking rates. People looking for quick information may depend only on the baits created by artificial intelligence, which exceeds the deeper content hosted in actual sites.

On the other hand, an inaccurate overview opens a door for reliable content who focus on accuracy and context. By creating well studied articles, digital publishers can put themselves as reliable sounds when artificial intelligence information fails. Google claims an overview of Amnesty International withdraws from reliable sources, so improving your field authority and the rear bond definition file becomes more important than ever.

Content creators should also see how phrases created from artificial intelligence to search behavior. If people repeat or search for newly created expressions, this may lead to the creation of the trends of emerging economic officials. Monitoring these unexpected linguistic transformations provides a competitive advantage for blogs and companies that quickly adapt to new keyword patterns.

What does this tell us about language and technology

At the heart of this controversy lies a greater philosophical question: Should the machines create a language that goes beyond the human experience? Language is not just a tool, it is a living record of collective history. Artificial intelligence, regardless of progress, lacks cultural context. It does not know what looks like sitting on a balcony with the elderly they tell stories. However, she is now narrating strong stories in itself.

Merging linguistic creativity and arithmetic logic makes the role of artificial intelligence in forming the user’s understanding more powerful than ever. While fictional sayings may seem simple, they act as warnings about deeper systemic issues. The wrong information does not always spread because it often lies in wisdom.

In order for artificial intelligence to become a responsible contributor to the language, strict criteria must be kept from honesty, martyrdom and transparency. Users must maintain a healthy level of doubts, regardless of the extent of “Vasasi”, a phrase may seem one of the largest technical company in the world.

Also read: Google’s new artificial intelligence tool enhances the learning experience

Reference

Jordan, Michael, and others. Artificial Intelligence: A guide to human thinking. Penguin books, 2019.

Russell, Stewart, and Peter Norfig. Artificial intelligence: a modern approach. Pearson, 2020.

Koblland, Michael. Artificial Intelligence: What everyone needs to know. Oxford University Press, 2019.

Jeron, Aurelin. Practical learning with Scikit-Learn, Keras and Tensorflow. O’reillly Media, 2022.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-05-20 13:48:00