This AI Paper Introduces Group Think: A Token-Level Multi-Agent Reasoning Paradigm for Faster and Collaborative LLM Inference

The prominent exploration field includes enabling LLMS models to work cooperatively. LLMS is now checked by multi -agents supported by LLMS because of their ability to coordinate difficult problems by dividing tasks and working simultaneously. This trend gained attention due to its ability to increase efficiency and reduce cumin in actual time applications.

There is a common problem with LLM cooperative systems is the serial communication of agents. In such systems, each agent should wait for others to complete the thinking steps before follow -up. This slows the treatment, especially in situations that require rapid responses. Moreover, agents often repeat efforts or generate inconsistent outputs, as they cannot see advanced ideas of their peers during the generation. This cumin and repetition reduces the practical application to spread multi -agent LLMS, especially when time and calculation are restricted, such as edge devices.

Most of the current solutions have relied on successive or parallel samples techniques independently to improve thinking. Methods like a series of idea that pays assistance models to solve problems in an organized way, but often come with an increase in the time of reasoning. Curricula such as tree trees and graphic drawing through this are expanded through branching thinking paths. However, these methods still do not allow mutual adaptation in the actual time between factors. The multi -agent settings explored cooperative methods, but mostly by exchanging messages alternately, which offer delay again. Some advanced systems suggest complex dynamic schedule or roles -based configurations, which are not improved for effective inference.

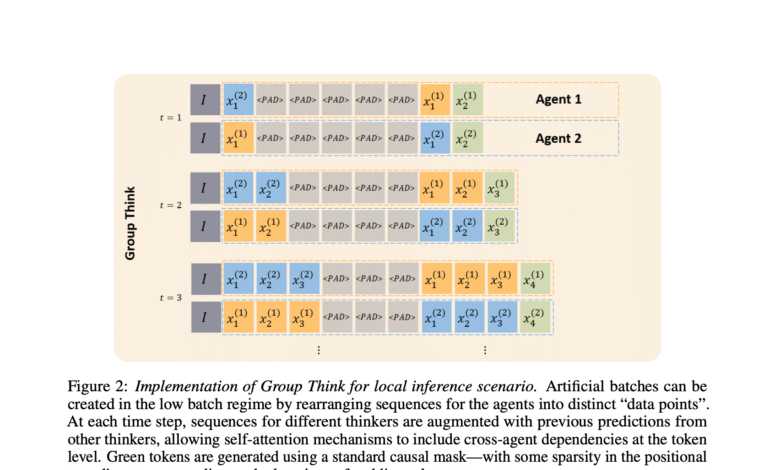

Research from MediaTek Research has provided a new method called Group Think. This approach enables multiple thinking factors within LLM to work simultaneously, and monitor partial outputs of each other at the distinctive symbol level. Each logical thread adapts to advanced ideas of others in the middle of the generation. This mechanism reduces duplication and enables agents to change the direction if another interconnection indicator is placed better to continue a specific line of thinking. Group Think is implemented through an attention mechanism at the distinctive symbol level that allows each audience to pre -created symbols from all agents, which supports actual time cooperation.

The method works by appointing each of his own chain agents from the distinctive symbol indicators, allowing its outputs to be intertwined in memory. These interlocking codes are stored in a joint cache that can be accessed for all agents during the generation. This design provides effective attention through thinking threads without architectural changes in the transformer model. Implementation works on both personal devices and in data centers. On local devices, the inactivity account is effectively used by pushing the multiple agent outputs, even with a one -pay size size. In data centers, Group Think allows multiple requests to be processed together, and the symbols overlap with agents while maintaining the dynamics of the correct attention.

Performance tests show that the group is greatly considering improving cumin quality and output. In the census tasks, such as inserting 100 distinct names, it has achieved almost complete results faster than the traditional methods of the series. The acceleration was proportional to the number of thinkers. For example, four cumin thinkers lower the factor of about four. In the problems of gap and oppression, using the Floyd-Larshall algorithm on a five-contract graph, four thinkers reduced the time of completion to half the time of one factor. Group Think The challenges of generating the code in programming tasks have been resolved more effectively than basic models. With four or more thinkers, the model produced the correct symbol clips much faster than traditional thinking models.

This research shows that the current LLMS, and if not explicitly trained for cooperation, can already show the emerging group thinking behaviors under the preparation of the group thinking. In experiments, the factors have diversified their work normally to avoid repetition, and often divide tasks by subject or focus. These results indicate that the efficiency and development of Group Think can be further enhanced by training for cooperative data.

Check the paper. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 95K+ ML Subreddit And subscribe to Our newsletter.

Niegel, a trainee consultant at Marktechpost. It follows an integrated double degree in materials at the Indian Institute of Technology, Khargpur. Nichil is a fan of artificial intelligence/ml that always looks for applications in areas such as biological materials and biomedical sciences. With a strong background in material science, it explores new progress and creates opportunities to contribute.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-05-24 03:48:00