EnCharge’s Analog AI Chip Promises Low-Power and Precision

Naveen Verma Laboratory at Princeton University is like a museum of all the ways that engineers have tried to make very effective of artificial intelligence using analog phenomena instead of digital computing. On one seat lies the most efficient energy retinal computer that has ever made. In another segment, you will find memory that can calculate the largest matrix of the numbers of any analog AI system so far.

There is no commercial future, according to Verma. Less than that, this part of his laboratory is a cemetery.

The analog of artificial intelligence has seized the imagination of architectural engineers for years. It combines two main concepts that must make automated learning less intense in energy. First, it limits the movement of the expensive bits between memory chips and treatments. Second, instead of 1S and 0S of logic, it uses current flowing physics to perform the automatic learning key account efficiently.

Although the idea was, many AI’s analog plans were not delivered in a way that could take a bite of artificial intelligence appetite. Verma will be known. He tried them all.

But when IEEE SICTRUM It was visited a year ago, there was a slide at the back of the Verma Laboratory, which represents some hope in the analog intelligence and the resourceful computing of the energy needed to make artificial intelligence useful and incompatible everywhere. Instead of account with the current, the shipping slide summarizes. It may seem illogical, but it may be the key to overcoming noise that hinders all analogous AI.

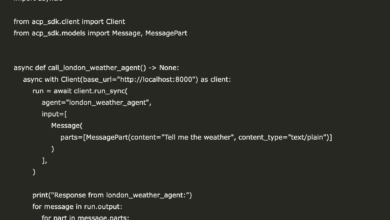

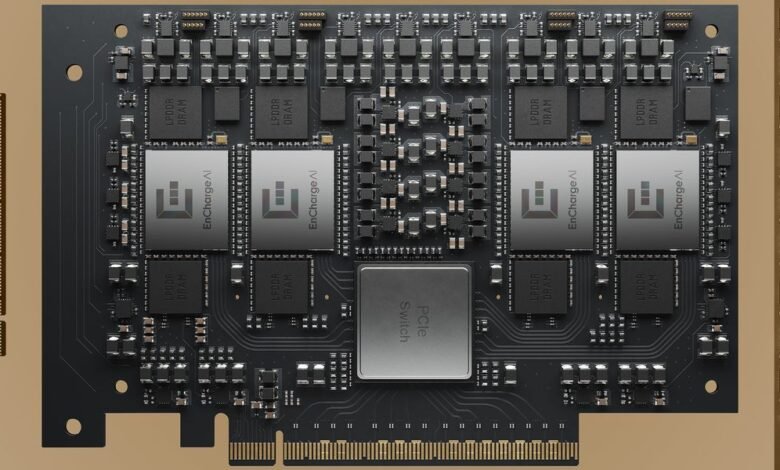

This week, Verma’s English AI unveiled the first segment based on this new structure, En100. The startup company claims that the chip is a lot of work of artificial intelligence with the performance of every Watt to 20 times better than competing chips. It is designed in a single processor card that adds 200 trillion per second at 8.25 watts, which aims to maintain the battery life in laptops that can be able to artificial intelligence. Moreover, a 4 -chip card is targeted, 1000 processes of operations per second for artificial intelligence stations.

Current and chance

In automated learning, “it became clear, through stupid luck, the main process that we do is the complications of the matrix,” says Verma. This takes a set of numbers mainly, hitting them with another group, and adding the result of all these strikes. Early, the engineers noticed a coincidence: Two basic electrical engineering bases can do this exactly. The Om law says that you get the current by hitting the voltage and delivery. The current Kirschov Law says that if you have a set of currents at a point of a set of wires, the total of these currents is what leaves this point, “the current Kirchhoff Law says. Therefore, basically, each group of power voltages pushes the current input through the resistance (the connection is the opposite of the resistance), the multiplication of the voltage value, and all these currents add to the production of one value. Mathematics, I did.

It looks good? Well, improve. Many of the data that forms the nerve network is “weights”, the things through which the inputs are doubled. The transfer of these data from memory to the processor’s logic to do the work is responsible for a large part of the spending of energy graphics processing units. Instead, in most AI analog schemes, weights are stored in one of several types of non -volatile memory as a connection value (the above resistance). Since the weight data is the place where the calculation should be, it should not be moved as much, and the provision of a pile of energy.

The combination of free mathematics and fixed data is the calculations that only need one thousand trillion from the energy. Unfortunately, this is almost not the analog artificial intelligence efforts offered.

The problem with the current

The main problem with any type of analog computing has always been the reference to noise. The analog intelligence has by the truck load. The signal tends, in this case, that the sum of these strikes tends to be overwhelmed by many possible sources of noise.

“The problem is that semiconductor devices are messy things,” says Verma. Suppose you have a representative nerve network where the weights are stored as connectors in the individual RRAM cells. These weight values are stored by placing a relatively high voltage across the Rram cell for a specific period of time. The problem is that you can adjust the exact same effort on two cells for the same time, and these cells will end with slightly different delivery values. Worse, these delivery values may change with the temperature.

The differences may be small, but remember that the process adds many strikes, so that noise is enlarged. Worse, the resulting current is then converted into an effort is the entrance to the next layer of nerve networks, a step that adds more noise.

The researchers attacked this problem from the perspective of computer science and device physics. Hoping to compensate for noise, the researchers invented ways to bake some knowledge of physical organs in their nerve network models. Others focused on making devices that act as expected as possible. IBM, which conducted intense research in this field, alike.

Such technologies are competitive, if not commercially successful, in smaller systems, aimed at providing low -energy machinery for devices on the edges of the Internet of Things networks. The early Ai Mythic AI produced more than one generation of analog AI chip, but it is competing in a field with low -energy digital chips.

EN100 computers card is the new analog AI chip structure.EnararGure Ai

The Engram solution strips noise by measuring the amount of charging instead of the shipping flow in the double -learning talisman. In the traditional analog AI, beating depends on the relationship between voltage, delivery and current. In this new scheme, this depends on the relationship between voltage, capacity and charging – as shipping is mainly equal to the effort of capacity.

Why is this difference important? It comes to the beating component. Instead of using some weak and weak devices such as RRAM, the English uses condensate.

The capacitor is basically two conductors that chocolate insulation. The difference between the conductors causes the accusation to accumulate one of them. The key to them for the purpose of machine learning is that its value, capacity, is determined according to its size. (More Mosul space or less space between conductors means more capacity.)

“The only thing they rely on is engineering, mainly the space between the wires,” says Verma. “This is the only thing you can control very well in CMOS techniques.” The English builds a group of delicate value condensate in the layers of copper on the silicon of their processors.

Data that forms most of the nerve network form, weights, is stored in a group of digital memory cells, each of which is condensed. Then the data that the nervous network is analyzed by the weight of the weight is struck using a simple logic built into the cell, and the results are stored as fees on the capacitors. Then the ceiling turns into a position where all shipments accumulate from the beating results and the result is numbered.

Although the initial invention, dating back to 2017, was a great moment for the Verma laboratory, it says that the basic concept is very old. He says: “It is called Switchared Capacitor, it turned out that we have been doing it for decades,” he says. It is used, for example, in analog to high digital. “Our innovation was to know how you can use it in a structure that works in computing in memory.”

a race

The Verma and English Laboratory spent years in proving that technology was subject to developable and developed and involved in improving it with a structure and programs that suit the needs of artificial intelligence that differ greatly from what it was in 2017. The products resulting with early access developers now, the company-which recently collected $ 100 million from Samsung Venture Versterns, and others in The tour is early.

But Engring enters a competitive field, and among the competitors Kahona the Great, NVIDIA. At the Grand Developer event in March, GTC, NVIDIA announced plans for a compact computer product around the CPU-GPU GB10 and the work station built around the upcoming GB300.

There will be a lot of competition in the low space area. Some of them are used even a form of computing in memory. For example, D-Matrix and Axelera shared the analog AI promise, which leads to memory in computing, but they did everything digitally. Each of them has developed dedicated SRAM memory cells that are stored, doubled and implemented digitally. Even there is no less than one of the most traditional analog AI in this mixture, disposal.

Verma is, which is not surprising, optimistic. He said in a statement, “The new technology” means advanced, safe and personal artificial intelligence that can be operated locally, without relying on cloud infrastructure. “” We hope this radically expands what you can do with artificial intelligence. “

From your site articles

Related articles about the web

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-06-02 14:00:00