Yandex Releases Alchemist: A Compact Supervised Fine-Tuning Dataset for Enhancing Text-to-Image T2I Model Quality

Despite the great progress in the text generation to the (T2I) image caused by models such as Dall-E3, IMAGEN 3, stable 3s, and the achievement of the output quality-whether in terms of aesthetic and alignment-is still a continuous challenge. While pre -training on a large scale provides general knowledge, it is not sufficient to achieve high aesthetic quality and alignment. SFT control acts as a critical step after training, but its effectiveness depends heavily on the quality of the settings set.

The current general data sets used in SFT either the narrow, targeted visual fields (such as anime or specified art types) or depend on the basic extension filters via the web scale. Human -led coordination is not applicable, and often fails to identify samples that result from the largest improvements. Moreover, modern T2i models use internal royal data groups with minimal transparency, which limits the cloning of results and slow progress in this field.

Approach: Activating the guided data collection model

To alleviate these problems, I released Yandex AlchemySFT data collection is available to the public, consisting of 3,350 pairs carefully. Unlike traditional data collections, chemist is built using a new methodology that benefits from the pre -trainer publishing model to work as the potential of the sample quality. This approach allows the selection of training data with the high effect on the performance of the obstetric model without relying on the status of self -signs or simple aesthetic registration.

The alchemist is designed to improve the quality of T2i models output through the targeted control. The version also includes difficult versions of five stable publishing models for the public. Data set and models can be accessed when embracing the face under an open license. More about methodology and experiments – in Preprint.

Technical design: filtering pipelines and data collection features

The construction of the alchemist includes a multi -stage filtering pipeline starting from about 10 billion images of web sources. The pipeline was organized as follows:

- Initial liquidation: NSFW content and low -resolution images (threshold> 1024 x 1024 pixels).

- Coarse quality filter: Application of works to exclude images with artifacts of pressure, blurring movement, watermarks, and other defects. These works are trained in standard photo quality evaluation collections such as Koniq-10K and PIPAL.

- IQA -based fading and pruningSIFT -like features are used to collect similar images, with only high -quality pictures. Pictures are also recorded using the Topiq model, ensuring that clean samples are kept.

- Choose a proliferation: The main contribution is to use the mutual model for the pre -arranged image pre -arrangement model. The registration function determines samples that are stimulated by the strengths associated with visual complexity, aesthetic gravity, and stylistic richness. This allows the selection of samples most likely to enhance the performance of the estuary model.

- Re -writing the explanatory name: The last specific images are re -photographed using the model form that is accurately set to produce fast -style text descriptions. This step guarantees better alignment and ease of use in the SFT workflow.

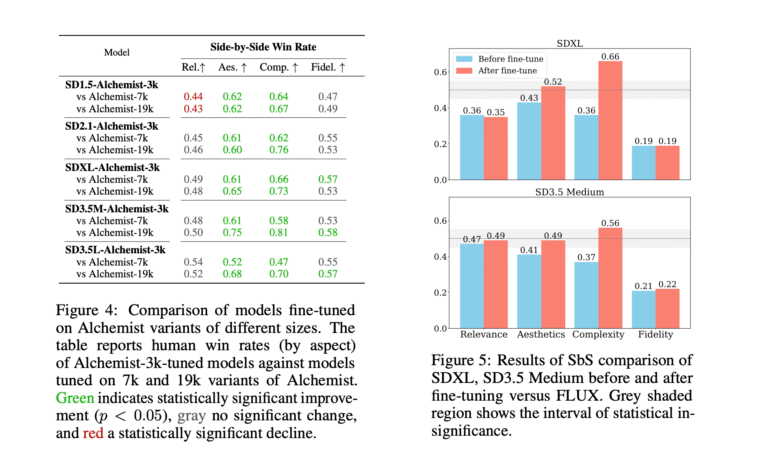

Through eradication studies, the authors determine that the increase in the size of the data set exceeds 3,350 samples (for example, 7K or 19K) leads to a decrease in the quality of the models that are seized, which enhances the value of high -quality targeted data across raw size.

Results via multiple T2i models

The alchemist effectiveness is evaluated across five stable spread variables: SD1.5, SD2.1, SDXL, SD3.5 Medium, and large SD3.5. Each model was set using three data collections: (1) chemical data set, (2) identical sub-set of Laion-Asthetics V2, and (3) its foundation lines.

Human evaluationExpert broadcasters conducted assessments side by side by four criteria-the image of the text, aesthetic quality, the complexity of the image, and sincerity. The models of alchemist showed statistically significant improvements in the aesthetic degrees and complexity, often outperforming both the basic lines and versions that are seized in aesthetics by margins from 12 to 20 %. More importantly, the importance of the image of the text remained stable, indicating that the immediate alignment was not affected negatively.

Mechanical scales: Via standards such as FD-Dinov2, scraps points, imagerward, and HPS-V2, the seized models are generally recorded higher than their counterparts. It is worth noting that the improvements were more consistent when compared to models based on the identical size of the basic models.

Equachachting the size of the data set: The installation with the larger variables of chemical (7K and 19K sample) led to a decrease in performance, which confirms that the most stringent liquidation and higher quality for each sample more influential than the size of the data set.

Yandex has used the data set to train its text -to -trimmed model to the image, Yandexart V2.5, and plans to continue to benefit from the updates of the future model.

conclusion

Alchemist provides a good and experimental realized path to improve the quality of the text to a picture through the supervision installation. The approach to the quality of the sample emphasizes the scale and provides a repeated methodology for building the data set without relying on the royal tools.

Although the most prominent improvements in cognitive features such as aesthetics and the complexity of images, the frame also highlights the differentials that arise in sincerity, especially for the most recent basic models that have already improved through the interior SFT. However, the chemist defines a new standard for SFT data groups for general purposes and provides a valuable resource for researchers and developers working to enhance the quality of the output of obstetric vision models.

verify Paper here and Alchemy Data set in the face of embrace. Thanks to the Yandex team to lead/ thought resources for this article.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically intact and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-06-09 18:42:00