Internal Coherence Maximization (ICM): A Label-Free, Unsupervised Training Framework for LLMs

Post -training methods of previously trained language models (LMS) depend on human supervision through preferential demonstrations or nutrition to determine the required behaviors. However, this approach faces critical restrictions because typical tasks and behaviors become very complicated. Human supervision is not reliable in these scenarios where LMS learns to imitate errors in demonstrations or exploit the defects inherent in counter -feeding systems. The primary challenge in LMS training lies on tasks that exceed the human ability in reliability in demonstrations or assessments. Modern research has identified various failures, including guidance in the supervision designed for the real human or human themselves.

Human supervision restrictions in LLM after training

Researchers have explored several ways to expand human supervision. A standard method uses high -quality verified bonuses, such as matching output outputs with ground truth solutions in sporting fields. Despite the evidence that pre -trained basic models have strong capabilities for the estuary tasks, with the addition of minimal improvements after training, effective deduction is still difficult. The consistent search method (CCS) is an unprepared approach to deduction that uses the logical consistency to find the underlying knowledge without supervision. However, CCS is less than the performance of supervision methods and often fails to identify knowledge due to other prominent features that meet consistency characteristics.

Entering maximization of internal cohesion (ICM)

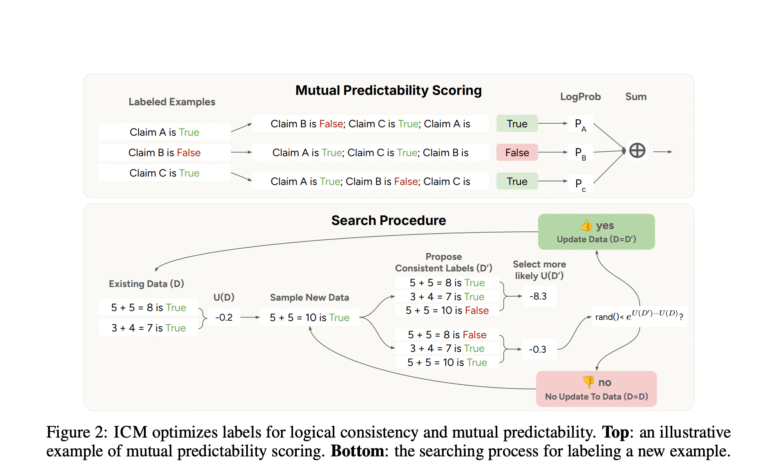

Antarbur, Schmidt Sciences, Independent, Constellation, New York and George Washington University suggested maximizing internal cohesion (ICM), which operate pre -trained models on their created posters without using any signs. This ICM is resolved by searching for stickers that are logical and mutual in accordance with the pre -trained model. Since determining the optimal stickers collection is still not allergic to, ICM uses a simulator search algorithm to approximate the maximum target. Moreover, this method corresponds to the performance of gold stickers on Struduleqa and GSM8K, and outperforms the human stickers designed on alpaka.

How ICM works

The ICM algorithm follows a repetitive process of three steps: (a) System samples is a new non -so -called data set for its potential inclusion, (B) The optimal poster for this example is determined while solving these examples at the same time, in the event of any logical contradictions at one time. ICM is evaluated across three data sets: Reuchulaqa to evaluate honesty, GSM8K-Verformation for Methomatic Errearness, and Alpaca for help and damage. The researchers used four basic lines in their experiments: zero shots, zero (chat), golden mark, and human designation. Moreover, experiments used two open models, Llama 3.1 8B and 70B, and two special models: Claude 3 Haiku and Claude 3.5 Haiku.

Standard performance and models comparisons

In tasks to deduce super capacity, ICM matches the accuracy of golden supervision by 80 %, outperforming the estimated human accuracy of 60 %. Using the ICM bonus models, the researchers have successfully trained a helpless chaatbot without human supervision. It achieves a 75.0 % resolution on the platform, compared to 72.2 % for the alternatives supervised by the trained person on production data. Moreover, using both the non -supervising and supervising RM RM, two policies are trained with RL to create useful, not honest and honest assistants. The policy that was trained with non -supervision RM achieves a 60 % victory rate. However, these policies are still lagging behind Claude 3.5 Haiku, which was publicly released, which achieves 92 % victory rates.

Conclusion and future expectations

This paper is offered to maximize internal cohesion (ICM), and it is presented in LM that is not subject to supervision of models that were formulated before training on self -created stickers. The method is constantly identical to the performance of golden supervision and transcends the supervision of the brutality, through the tasks of modeling GSM8K, Reultfulqa bonuses, and alpaka bonuses. However, ICM restrictions include relying on the emergence of the concept within the pre -trained models and the effectiveness of long inputs due to the restrictions of the context window. As LMS advances beyond the capabilities of human evaluation, ICM provides promising alternatives to the traditional RLHF, ensuring the form of human intention without the limits of human supervision.

verify paper. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Sajjad Ansari is in the last year of the first university stage of Iit khargpur. As enthusiastic about technology, it turns into the practical applications of Amnesty International with a focus on understanding the impact of artificial intelligence techniques and their effects in the real world. It aims to clarify the concepts of complex artificial intelligence in a clear and accessible way.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-06-14 20:28:00