Getting Started with Microsoft’s Presidio: A Step-by-Step Guide to Detecting and Anonymizing Personally Identifiable Information PII in Text

In this tutorial, we will explore how to use Microsoft’s Presidio, an open source framework designed to discover personal identification information (PII) in the free text. Pressidio is designed on the effective Space NLP

We will cover how:

- Prepare and install the necessary presidio packages

- Discover common Pii entities such as names, phone numbers, and credit card details

- Determine the knowledge of the fields of the field (for example, Pan, Aadhaar)

- Create and registration unknown persons (such as retail or pseudonym)

- Reuse the appointments of its identity to restore the fixed identity

Install libraries

To start Presidio, you will need to install the following key libraries:

- Presidio-aalyzer: This is the basic library responsible for discovering Pii entities in the text using integrated and dedicated identifiers.

- Useful This library provides tools to hide identity (for example, Redct, Replic, retail) discovered using composition factors.

- Space NLP model (en_core_web_lg): Pressidio Space is used under the hood for the tasks of natural language processing, such as identifying the name called. The en_core_web_lg model provides highly accurate results to discover PII in English.

pip install presidio-analyzer presidio-anonymizer

python -m spacy download en_core_web_lgYou may need to restart the session to install libraries, if you use JuPYTER/Colab.

Brezia analyst

The basic PII discovery

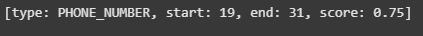

In this block, prepare the Prasidio Analyzer engine and we do a basic analysis to detect the US phone number from the text of a sample. We also suppress the lower level registry warnings from the PRSIDIO Library to remove cleaner.

Analyzrengine downloads the Space NLP pipeline and recognizes the pre -identified to survey the input text of the sensitive entities. In this example, we define phone_number as a targeted entity.

import logging

logging.getLogger("presidio-analyzer").setLevel(logging.ERROR)

from presidio_analyzer import AnalyzerEngine

# Set up the engine, loads the NLP module (spaCy model by default) and other PII recognizers

analyzer = AnalyzerEngine()

# Call analyzer to get results

results = analyzer.analyze(text="My phone number is 212-555-5555",

entities=["PHONE_NUMBER"],

language="en")

print(results)Create a dedicated PII with a list of rejection (academic addresses)

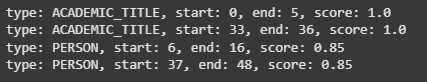

This code block shows how to create a PII identifier for Pressidio using a simple rejection menu, ideal for detecting fixed terms such as academic titles (for example, “dr.”, “Prof.”). The identifier is added to the PRSIDIO record and the analyst uses to survey the entry text.

While this tutorial covers the rejection list only, Prasidio also supports Regex patterns based on Regex, NLP models, and external identification. For those advanced methods, see official documents: add custom identifiers.

Brezia analyst

The basic PII discovery

In this block, prepare the Prasidio Analyzer engine and we do a basic analysis to detect the US phone number from the text of a sample. We also suppress the lower level registry warnings from the PRSIDIO Library to remove cleaner.

Analyzrengine downloads the Space NLP pipeline and recognizes the pre -identified to survey the input text of the sensitive entities. In this example, we define phone_number as a targeted entity.

import logging

logging.getLogger("presidio-analyzer").setLevel(logging.ERROR)

from presidio_analyzer import AnalyzerEngine

# Set up the engine, loads the NLP module (spaCy model by default) and other PII recognizers

analyzer = AnalyzerEngine()

# Call analyzer to get results

results = analyzer.analyze(text="My phone number is 212-555-5555",

entities=["PHONE_NUMBER"],

language="en")

print(results)

Create a dedicated PII with a list of rejection (academic addresses)

This code block shows how to create a PII identifier for Pressidio using a simple rejection menu, ideal for detecting fixed terms such as academic titles (for example, “dr.”, “Prof.”). The identifier is added to the PRSIDIO record and the analyst uses to survey the entry text.

While this tutorial covers the rejection list only, Prasidio also supports Regex patterns based on Regex, NLP models, and external identification. For those advanced methods, see official documents: add custom identifiers.

from presidio_analyzer import AnalyzerEngine, PatternRecognizer, RecognizerRegistry

# Step 1: Create a custom pattern recognizer using deny_list

academic_title_recognizer = PatternRecognizer(

supported_entity="ACADEMIC_TITLE",

deny_list=["Dr.", "Dr", "Professor", "Prof."]

)

# Step 2: Add it to a registry

registry = RecognizerRegistry()

registry.load_predefined_recognizers()

registry.add_recognizer(academic_title_recognizer)

# Step 3: Create analyzer engine with the updated registry

analyzer = AnalyzerEngine(registry=registry)

# Step 4: Analyze text

text = "Prof. John Smith is meeting with Dr. Alice Brown."

results = analyzer.analyze(text=text, language="en")

for result in results:

print(result)

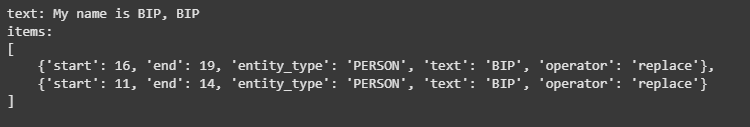

Pressidio anonymous

This code block shows how to use the Prasidio Anonymister engine to strain the detection of Pii entities in a specific text. In this example, we manually define two entities of two people using identification recognition, and simulation of the output from the Presidio analyst. These entities represent the names of “Bond” and “James Bond” in the text of the sample.

We use an “replacement” operator to replace both names with the value of the deputy (“bip”), an unidentified identity effectively for sensitive data. This is done by passing the compatorconfig operator with the strategy of not revealing its identity (replacement) to the unknown anonymous.

This style can be easily expanded to apply other compact processes such as “Redct” or “HASH” or dedicated nickname strategies.

from presidio_anonymizer import AnonymizerEngine

from presidio_anonymizer.entities import RecognizerResult, OperatorConfig

# Initialize the engine:

engine = AnonymizerEngine()

# Invoke the anonymize function with the text,

# analyzer results (potentially coming from presidio-analyzer) and

# Operators to get the anonymization output:

result = engine.anonymize(

text="My name is Bond, James Bond",

analyzer_results=[

RecognizerResult(entity_type="PERSON", start=11, end=15, score=0.8),

RecognizerResult(entity_type="PERSON", start=17, end=27, score=0.8),

],

operators={"PERSON": OperatorConfig("replace", {"new_value": "BIP"})},

)

print(result)

Learn about the designated entity, hide the retail -based identity, and restore the identity consistent with Prasidio

In this example, we take Pressidio a step forward by showing:

- ✅ Determine the custom Pii entities (for example, Aadhaar and PAN numbers) using the Regex based Pattern

- 🔐 anonymous sensitive data using a retailer -based operator (Reanonomizer)

- ♻ Re -detection of identity with the same values constantly through multiple texts by preserving

We carry out a custom re -discrimination operator verify whether a specific value has already been divided and reused with the same output to maintain consistency. This is especially useful when the anonymous data needs to keep some auxiliary tools – for example, linking records with a borrowed name.

Ranonomizer anonymous identification (Reanonomizer)

This block determines a dedicated operator called Reanonymizer who uses Hashing Sha-256 to unknown entities and ensures that the same inputs always get the same unknown output by storing retail in shared maps.

from presidio_anonymizer.operators import Operator, OperatorType

import hashlib

from typing import Dict

class ReAnonymizer(Operator):

"""

Anonymizer that replaces text with a reusable SHA-256 hash,

stored in a shared mapping dict.

"""

def operate(self, text: str, params: Dict = None) -> str:

entity_type = params.get("entity_type", "DEFAULT")

mapping = params.get("entity_mapping")

if mapping is None:

raise ValueError("Missing `entity_mapping` in params")

# Check if already hashed

if entity_type in mapping and text in mapping[entity_type]:

return mapping[entity_type][text]

# Hash and store

hashed = ""

mapping.setdefault(entity_type, {})[text] = hashed

return hashed

def validate(self, params: Dict = None) -> None:

if "entity_mapping" not in params:

raise ValueError("You must pass an 'entity_mapping' dictionary.")

def operator_name(self) -> str:

return "reanonymizer"

def operator_type(self) -> OperatorType:

return OperatorType.Anonymize Select PAN and Aadhaar

We define two dedicated PATTERNRECOGNIZERS based on Regex-one for the Indian frying pan and one for Aadhaar numbers. These PII entities will discover in your text.

from presidio_analyzer import AnalyzerEngine, PatternRecognizer, Pattern

# Define custom recognizers

pan_recognizer = PatternRecognizer(

supported_entity="IND_PAN",

name="PAN Recognizer",

patterns=[Pattern(name="pan", regex=r"\b[A-Z]{5}[0-9]{4}[A-Z]\b", score=0.8)],

supported_language="en"

)

aadhaar_recognizer = PatternRecognizer(

supported_entity="AADHAAR",

name="Aadhaar Recognizer",

patterns=[Pattern(name="aadhaar", regex=r"\b\d{4}[- ]?\d{4}[- ]?\d{4}\b", score=0.8)],

supported_language="en"

)Prepared by an unknown analyst and engines

Here we have prepared a Prasidio analyst, and we record the customized knowledge, and add the unknown services to an unknown anonymous.

from presidio_anonymizer import AnonymizerEngine, OperatorConfig

# Initialize analyzer and register custom recognizers

analyzer = AnalyzerEngine()

analyzer.registry.add_recognizer(pan_recognizer)

analyzer.registry.add_recognizer(aadhaar_recognizer)

# Initialize anonymizer and add custom operator

anonymizer = AnonymizerEngine()

anonymizer.add_anonymizer(ReAnonymizer)

# Shared mapping dictionary for consistent re-anonymization

entity_mapping = {}Analysis of texts input and identification

We analyze two separate texts that include the same Pan and Aadhaar values. The designated operator ensures that it is constantly unidentified through both inputs.

from pprint import pprint

# Example texts

text1 = "My PAN is ABCDE1234F and Aadhaar number is 1234-5678-9123."

text2 = "His Aadhaar is 1234-5678-9123 and PAN is ABCDE1234F."

# Analyze and anonymize first text

results1 = analyzer.analyze(text=text1, language="en")

anon1 = anonymizer.anonymize(

text1,

results1,

{

"DEFAULT": OperatorConfig("reanonymizer", {"entity_mapping": entity_mapping})

}

)

# Analyze and anonymize second text

results2 = analyzer.analyze(text=text2, language="en")

anon2 = anonymizer.anonymize(

text2,

results2,

{

"DEFAULT": OperatorConfig("reanonymizer", {"entity_mapping": entity_mapping})

}

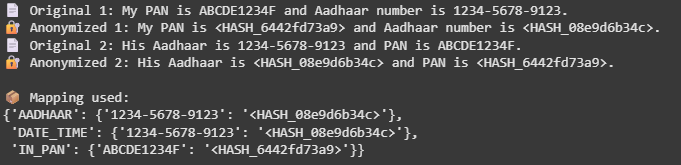

)View the results of not disclosing his identity and mapping

Finally, we print each of the unknown outputs and examine the internal used appointment to maintain consistent segmentation across values.

print("📄 Original 1:", text1)

print("🔐 Anonymized 1:", anon1.text)

print("📄 Original 2:", text2)

print("🔐 Anonymized 2:", anon2.text)

print("\n📦 Mapping used:")

pprint(entity_mapping)

verify Symbols. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

I am a graduate of civil engineering (2022) from Islamic Melia, New Delhi, and I have a strong interest in data science, especially nervous networks and their application in various fields.

Don’t miss more hot News like this! AI/" target="_blank" rel="noopener">Click here to discover the latest in AI news!

2025-06-24 09:33:00