MDM-Prime: A generalized Masked Diffusion Models (MDMs) Framework that Enables Partially Unmasked Tokens during Sampling

Introduction to MDMS and its inefficiency

MDMS models are strong tools for creating separate data, such as text or symbolic sequences, by detecting symbols over time. In each step, the symbols are either masked or not dark. However, it was noted that many steps in the reverse process do not change the sequence, which leads to frequent treatment of identical inputs and waste account. Up to 37 % of the steps may not update the sequence at all. This is highlighting the main restrictions in the current MDMS, which leads to the development of the most efficient samples methods that reduce the steps of inactivity and increase the use of each generation’s step to the maximum.

Development and improvements in MDMS

The concept of separate spread models arose from early work on bilateral data, and later expanded to practical applications such as texts and images through different noise strategies. MDMS’s recent efforts were polished by simplifying training goals and exploring alternative lunch. Improvements include mixing self -slope methods with MDMS, taking guidelines with energy -based models, selectively restoring symbols to increase the quality of the output. Other studies have focused on distillation to reduce the number of samples steps efficiently. In addition, some continuous noise methods use (for example, Gaussian) for separate data modeling; However, methods such as spreading bits are incompatible with intractable possibilities due to their dependence on quantitative measurement.

Prime submitted: Partial hideout scheme

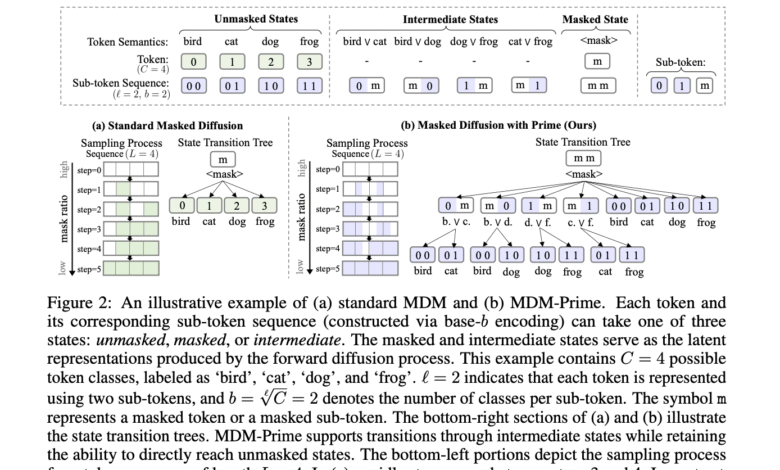

Researchers from the Institute of Nancalat, NVIDIA, and Taiwan National University provided a method called Parties to strengthen MDMS. Unlike traditional bilateral concealment, Prime allows symbols to assume intermediate situations by hiding sub -parts of the coded model of the distinctive symbol. This allows the model to gradually detect the distinctive symbol information, improve prediction quality and reduce excess account. The improved model, MDM-PRIME, achieves strong results, with a decrease in confusion in the text (15.36 on Openwebtext) and dozens of competitive FID on photo tasks (3.26 on CIFAR-10, 6.98 on imagenet-32), outperforming previous MDMS and automatic automation models without using automatic technologies.

Architecture improvements and training

MDM-PRIME is an average persuasive prevalence model that provides partial hiding at the sub-level. Instead of dealing with each symbol as one unit, it decomposes it into a series of sub -items using a reflective function. This model enables more smooth intermediate cases during the spread, which reduces the number of inactive steps. The reverse process is trained using close to these sub -tools. To address the consequences between sub -viewers and avoid invalid outputs, the model learns to distribute a shared probability with an inconsistent sequence. Architecture includes an effective design for encryption and optimal pioneer for sub -treatment.

Experimental evaluation of the tasks of text and pictures

The study evaluates MDM-PRIME on both the tasks of generating text and images. When generating the text using the Openwebtext data collection, MDM-PRIME shows significant improved improved and inexpensive step, especially when the sub-grains ℓ 4. Outing the previous methods without relying on automatic slope strategies and generalizing them well across various zero landmarks. To generate images on CIFAR-10 and Imagenet-32, it achieves MDM-PRIME with ℓ = 2 quality sample quality and lower degrees than FID compared to the foundation state, while it is more efficient. It also performs well in the tasks of generating conditional images, resulting in coherent outputs by predicting the sub -clips disabled from the images that are partially observed.

Conclusion and wider effects

In conclusion, scientific understanding from the width of atoms as the smallest units of matter has evolved into recognition of the most fundamental molecules, as it is clear from discoveries such as electron and the standard model. Likewise, in obstetric modeling, the study provides Prime, a method of crashing the distinctive symbols of separate data into more accurate sub -components. Built on MDMS, Prime improves efficiency by allowing recipes to be present in intermediate situations, and avoid frequent account on the inputs that have not changed. This allows detailed and expressive modeling. Their approach excels over the previous methods in both the text (with a confusion 15.36) and the generation of images (achieving the FID competitive degrees), providing a powerful tool to generate accurate data.

verify Paper, project page and Japemb. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

SANA Hassan, consultant coach at Marktechpost and a double -class student in Iit Madras, is excited to apply technology and AI to face challenges in the real world. With great interest in solving practical problems, it brings a new perspective to the intersection of artificial intelligence and real life solutions.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-06-30 07:23:00