Building Advanced Multi-Agent AI Workflows by Leveraging AutoGen and Semantic Kernel

In this tutorial, we are going to you through the smooth integration of the automatic nucleus and the semantic nucleus with the Goeni Flash from Google. We start creating Geminiwrapper and semantickergementmeminiplugin to fill the Grostini power force with multi -agent spontaneity coincidence. From there, we form specialized agents, starting from the programming instructions to creative analysts, showing how we can take advantage of the autogen Autogen applications interface as well as decorative functions in the semantic Kernel to analyze text, summarize, review code and solve creative problems. By combining the strong Autogen framework with a functional -based semantic approach, we create an advanced an artificial intelligence assistant that adapts to a variety of tasks with organized and implemented visions.

!pip install pyautogen semantic-kernel google-generativeai python-dotenv

import os

import asyncio

from typing import Dict, Any, List

import autogen

import google.generativeai as genai

from semantic_kernel import Kernel

from semantic_kernel.functions import KernelArguments

from semantic_kernel.functions.kernel_function_decorator import kernel_functionWe start installing the basic consequences: Pyautogen, Senetic-CerNEL, Google-Henerativeai, and Python-Dotenv, while making sure that we have all the libraries needed to prepare our multiple and dollar functions. Then we import the basic PYTHON units (OS, ASYNCIO, Writing) along with Autogen to form the agent, Genai to reach API Gemini, and semester seasons and decoration to determine our artificial intelligence functions.

GEMINI_API_KEY = "Use Your API Key Here"

genai.configure(api_key=GEMINI_API_KEY)

config_list = [

{

"model": "gemini-1.5-flash",

"api_key": GEMINI_API_KEY,

"api_type": "google",

"api_base": "https://generativelanguage.googleapis.com/v1beta",

}

]

We define the element, MP Gemini_API_KEY, and immediately create a Genai client so that all subsequent Gemini calls are approved. Then we create Config_list that contains the GIMINI Flash model settings, the model name, the API key, the end point type, and the basic URL, which we will deliver to our agents for LLM reactions.

class GeminiWrapper:

"""Wrapper for Gemini API to work with AutoGen"""

def __init__(self, model_name="gemini-1.5-flash"):

self.model = genai.GenerativeModel(model_name)

def generate_response(self, prompt: str, temperature: float = 0.7) -> str:

"""Generate response using Gemini"""

try:

response = self.model.generate_content(

prompt,

generation_config=genai.types.GenerationConfig(

temperature=temperature,

max_output_tokens=2048,

)

)

return response.text

except Exception as e:

return f"Gemini API Error: {str(e)}"We cover all Gemini flash reactions in the Geminiwrapper category, where the Generativemod generation is for our chosen model and exposing a simple generation method. In this method, we pass the claim and temperature to the Gemini Internet_Content applications interface (covered in 2048 symbols), return the raw text or coordinated error.

class SemanticKernelGeminiPlugin:

"""Semantic Kernel plugin using Gemini Flash for advanced AI operations"""

def __init__(self):

self.kernel = Kernel()

self.gemini = GeminiWrapper()

@kernel_function(name="analyze_text", description="Analyze text for sentiment and key insights")

def analyze_text(self, text: str) -> str:

"""Analyze text using Gemini Flash"""

prompt = f"""

Analyze the following text comprehensively:

Text: {text}

Provide analysis in this format:

- Sentiment: [positive/negative/neutral with confidence]

- Key Themes: [main topics and concepts]

- Insights: [important observations and patterns]

- Recommendations: [actionable next steps]

- Tone: [formal/informal/technical/emotional]

"""

return self.gemini.generate_response(prompt, temperature=0.3)

@kernel_function(name="generate_summary", description="Generate comprehensive summary")

def generate_summary(self, content: str) -> str:

"""Generate summary using Gemini's advanced capabilities"""

prompt = f"""

Create a comprehensive summary of the following content:

Content: {content}

Provide:

1. Executive Summary (2-3 sentences)

2. Key Points (bullet format)

3. Important Details

4. Conclusion/Implications

"""

return self.gemini.generate_response(prompt, temperature=0.4)

@kernel_function(name="code_analysis", description="Analyze code for quality and suggestions")

def code_analysis(self, code: str) -> str:

"""Analyze code using Gemini's code understanding"""

prompt = f"""

Analyze this code comprehensively:

```

{code}

```

Provide analysis covering:

- Code Quality: [readability, structure, best practices]

- Performance: [efficiency, optimization opportunities]

- Security: [potential vulnerabilities, security best practices]

- Maintainability: [documentation, modularity, extensibility]

- Suggestions: [specific improvements with examples]

"""

return self.gemini.generate_response(prompt, temperature=0.2)

@kernel_function(name="creative_solution", description="Generate creative solutions to problems")

def creative_solution(self, problem: str) -> str:

"""Generate creative solutions using Gemini's creative capabilities"""

prompt = f"""

Problem: {problem}

Generate creative solutions:

1. Conventional Approaches (2-3 standard solutions)

2. Innovative Ideas (3-4 creative alternatives)

3. Hybrid Solutions (combining different approaches)

4. Implementation Strategy (practical steps)

5. Potential Challenges and Mitigation

"""

return self.gemini.generate_response(prompt, temperature=0.8)We envelope our semantic logic in kernel in Semantickergementmemeniplugin, where we are prepared by Kernel and Gueminiwraper to operate dedicated AI functions. Using @kernel_function Decorator, we announce that methods such as Analyze_text, internate_summary, code_analysis, and Creative_SOOLUTION, each of which builds an organized router and delegates heavy lifting to Gemini flash. This assistant program allows us to record advanced AI operations and call them smoothly within the semantic KerNEL environment.

class AdvancedGeminiAgent:

"""Advanced AI Agent using Gemini Flash with AutoGen and Semantic Kernel"""

def __init__(self):

self.sk_plugin = SemanticKernelGeminiPlugin()

self.gemini = GeminiWrapper()

self.setup_agents()

def setup_agents(self):

"""Initialize AutoGen agents with Gemini Flash"""

gemini_config = {

"config_list": [{"model": "gemini-1.5-flash", "api_key": GEMINI_API_KEY}],

"temperature": 0.7,

}

self.assistant = autogen.ConversableAgent(

name="GeminiAssistant",

llm_config=gemini_config,

system_message="""You are an advanced AI assistant powered by Gemini Flash with Semantic Kernel capabilities.

You excel at analysis, problem-solving, and creative thinking. Always provide comprehensive, actionable insights.

Use structured responses and consider multiple perspectives.""",

human_input_mode="NEVER",

)

self.code_reviewer = autogen.ConversableAgent(

name="GeminiCodeReviewer",

llm_config={**gemini_config, "temperature": 0.3},

system_message="""You are a senior code reviewer powered by Gemini Flash.

Analyze code for best practices, security, performance, and maintainability.

Provide specific, actionable feedback with examples.""",

human_input_mode="NEVER",

)

self.creative_analyst = autogen.ConversableAgent(

name="GeminiCreativeAnalyst",

llm_config={**gemini_config, "temperature": 0.8},

system_message="""You are a creative problem solver and innovation expert powered by Gemini Flash.

Generate innovative solutions, and provide fresh perspectives.

Balance creativity with practicality.""",

human_input_mode="NEVER",

)

self.data_specialist = autogen.ConversableAgent(

name="GeminiDataSpecialist",

llm_config={**gemini_config, "temperature": 0.4},

system_message="""You are a data analysis expert powered by Gemini Flash.

Provide evidence-based recommendations and statistical perspectives.""",

human_input_mode="NEVER",

)

self.user_proxy = autogen.ConversableAgent(

name="UserProxy",

human_input_mode="NEVER",

max_consecutive_auto_reply=2,

is_termination_msg=lambda x: x.get("content", "").rstrip().endswith("TERMINATE"),

llm_config=False,

)

def analyze_with_semantic_kernel(self, content: str, analysis_type: str) -> str:

"""Bridge function between AutoGen and Semantic Kernel with Gemini"""

try:

if analysis_type == "text":

return self.sk_plugin.analyze_text(content)

elif analysis_type == "code":

return self.sk_plugin.code_analysis(content)

elif analysis_type == "summary":

return self.sk_plugin.generate_summary(content)

elif analysis_type == "creative":

return self.sk_plugin.creative_solution(content)

else:

return "Invalid analysis type. Use 'text', 'code', 'summary', or 'creative'."

except Exception as e:

return f"Semantic Kernel Analysis Error: {str(e)}"

def multi_agent_collaboration(self, task: str) -> Dict[str, str]:

"""Orchestrate multi-agent collaboration using Gemini"""

results = {}

agents = {

"assistant": (self.assistant, "comprehensive analysis"),

"code_reviewer": (self.code_reviewer, "code review perspective"),

"creative_analyst": (self.creative_analyst, "creative solutions"),

"data_specialist": (self.data_specialist, "data-driven insights")

}

for agent_name, (agent, perspective) in agents.items():

try:

prompt = f"Task: {task}\n\nProvide your {perspective} on this task."

response = agent.generate_reply([{"role": "user", "content": prompt}])

results[agent_name] = response if isinstance(response, str) else str(response)

except Exception as e:

results[agent_name] = f"Agent {agent_name} error: {str(e)}"

return results

def run_comprehensive_analysis(self, query: str) -> Dict[str, Any]:

"""Run comprehensive analysis using all Gemini-powered capabilities"""

results = {}

analyses = ["text", "summary", "creative"]

for analysis_type in analyses:

try:

results[f"sk_{analysis_type}"] = self.analyze_with_semantic_kernel(query, analysis_type)

except Exception as e:

results[f"sk_{analysis_type}"] = f"Error: {str(e)}"

try:

results["multi_agent"] = self.multi_agent_collaboration(query)

except Exception as e:

results["multi_agent"] = f"Multi-agent error: {str(e)}"

try:

results["direct_gemini"] = self.gemini.generate_response(

f"Provide a comprehensive analysis of: {query}", temperature=0.6

)

except Exception as e:

results["direct_gemini"] = f"Direct Gemini error: {str(e)}"

return resultsWe add the artificial intelligence organization from one side to the party in the AdvancedGIMIGINIAGENT category, where the additional component of the semantic kernel, Gemini rolls, and the formation of a set of specialized expansion factors (assistant, code references, creative analyst, data specialist, user). With simple ways to narrate the semantic dam, multi -agent cooperation, and direct Gemini calls, we enable a comprehensive comprehensive analysis pipeline for any user’s inquiry.

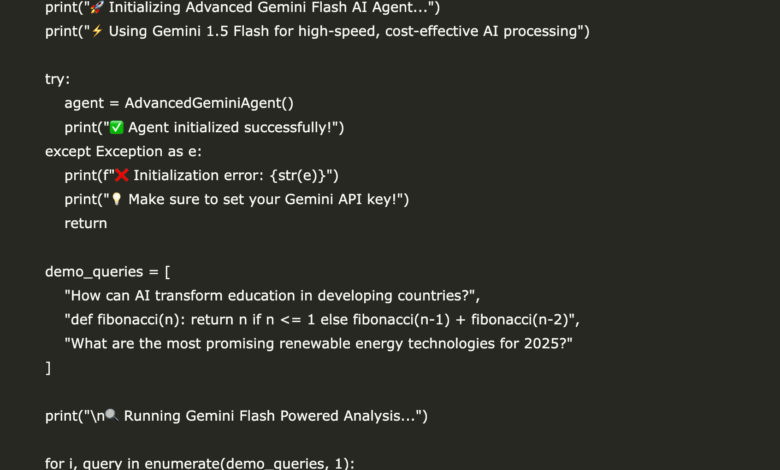

def main():

"""Main execution function for Google Colab with Gemini Flash"""

print("🚀 Initializing Advanced Gemini Flash AI Agent...")

print("⚡ Using Gemini 1.5 Flash for high-speed, cost-effective AI processing")

try:

agent = AdvancedGeminiAgent()

print("✅ Agent initialized successfully!")

except Exception as e:

print(f"❌ Initialization error: {str(e)}")

print("💡 Make sure to set your Gemini API key!")

return

demo_queries = [

"How can AI transform education in developing countries?",

"def fibonacci(n): return n if n <= 1 else fibonacci(n-1) + fibonacci(n-2)",

"What are the most promising renewable energy technologies for 2025?"

]

print("\n🔍 Running Gemini Flash Powered Analysis...")

for i, query in enumerate(demo_queries, 1):

print(f"\n{'='*60}")

print(f"🎯 Demo {i}: {query}")

print('='*60)

try:

results = agent.run_comprehensive_analysis(query)

for key, value in results.items():

if key == "multi_agent" and isinstance(value, dict):

print(f"\n🤖 {key.upper().replace('_', ' ')}:")

for agent_name, response in value.items():

print(f" 👤 {agent_name}: {str(response)[:200]}...")

else:

print(f"\n📊 {key.upper().replace('_', ' ')}:")

print(f" {str(value)[:300]}...")

except Exception as e:

print(f"❌ Error in demo {i}: {str(e)}")

print(f"\n{'='*60}")

print("🎉 Gemini Flash AI Agent Demo Completed!")

print("💡 To use with your API key, replace 'your-gemini-api-key-here'")

print("🔗 Get your free Gemini API key at: https://makersuite.google.com/app/apikey")

if __name__ == "__main__":

main()Finally, we turn on the main job that creates AdvancedGINIAGENT, and is repeated through a set of experimental queries. While operating each query, we collect and consequences of KerNEL analyzes, multi -agent cooperation, and direct Gemini responses, ensuring a clear step -by -step view of the AI multi -agents’ work.

In conclusion, we offered how Kernel complements the automatic and semantic, each other to produce a multi -use AI AI system supported by Gemini Flash. We have highlighted how Autogen simplifies the formatting of various expert factors, while Kerneel provides a clean and manufactured layer to determine advanced artificial intelligence functions and summons. By uniting these tools in the Klap notebook, we enabled the rapid experience and the initial models of the complex workflow of artificial intelligence without sacrificing clarity or control.

verify Symbols. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-01 01:33:00