What Is Context Engineering in AI? Techniques, Use Cases, and Why It Matters

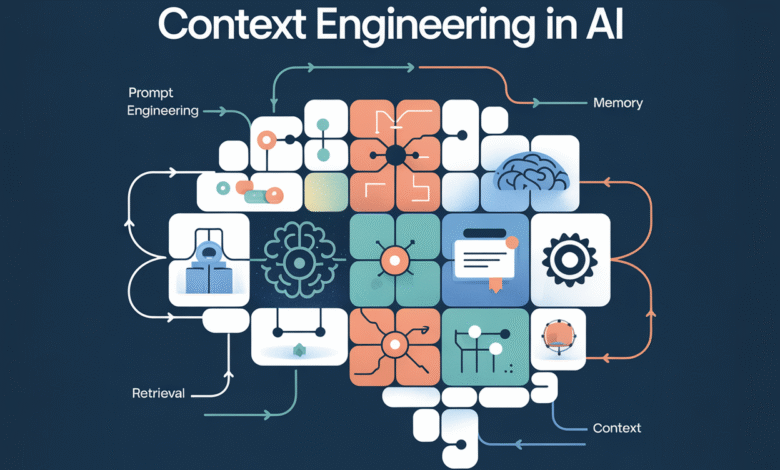

Introduction: What is context engineering?

Context engineering indicates the discipline of designing, organizing and addressing the context that is fed in large language models (LLMS) to improve its performance. Instead of adjusting weights or typical structures, context engineering focuses on entrance– Claims, system instructions, recovered knowledge, coordination, and even arrangement of information.

Context engineering is not related to the formulation of better claims. It comes to building systems that provide the correct context, exactly when needed.

Imagine Amnesty International Assistant request to write a performance review.

→ A weak contextHe only sees instructions. The result is mysterious, general reactions lack insight.

→ RichHe sees the instructions plus Employee’s goals, previous reviews, project results, peer comments, and manager’s observations. The result? An accurate data-backed review that you feel enlightened and personal-because it is.

This emerging practice acquires traction due to the increasing dependence on the models based on the GPT-4, Clauds and Mistral. Often the performance of these models is less than their size and more Quality of context They receive. In this sense, context engineering is the equivalent of instant programming for the era of smart agents and the pre -reservation generation (RAG).

Why do we need context engineering?

- The efficiency of the distinctive symbol: With the expansion of context windows but is still specific (for example, 128K in GPT-4-Turbo), effective context management becomes very important. A context of a plus or weak context that wasts valuable symbols.

- Accuracy and importanceLlms is sensitive to noise. The more the target and the arrangement of the wave logically increases, the greater the possibility of careful output.

- Pre -recovery generation (rag): In rag systems, external data is brought in actual time. Context engineering helps determine what to be recovered, how to cut it, and how to serve it.

- Workflow missions: When using tools such as Langchain or Openagents, independent factors depend on the context to maintain memory and goals and use tools. A bad context leads to failure to plan or hallucinations.

- The adaptation of the field: Its polish is expensive. Create pipelines better recovering or building models well in specialized tasks with zero learning or slightly learning.

The main techniques in context engineering

Many methodologies and practices make up the field:

1. Improving the improvement system

The system is the basis. It defines llm behavior and style. Technologies include:

- Set the role (for example, “You are a teacher of data science”)

- Educational framing (for example, “step by step”)

- Imposition (for example, “JSON)” only “)

2. Immediate composition and chains

Langchain felt the use of templates and fast chains to give the specified character. The sequence allows the division of tasks through claims – for example, analyzes a question, recovery of evidence, then answer.

3. Click

With limited context windows, one can:

- Use the summary forms to compress the previous conversation

- Inclusion and content similar to removal of repetition

- Apply organized formats (such as tables) instead of prolonged prose

4. Dynamic retrieval and guidance

Racing pipelines (such as those in Llamaindex and Langchain) recover documents from vector stores based on the user’s intention. Advanced settings include:

- Inquire about reformulation or expansion before retrieval

- Multi -level guidance to choose different sources or recovers

- Return the context based on importance and repetition

5. Memory engineering

The short -term memory (what is in the claim) and the long -term memory (refundable date) need to align. Technologies include:

- Restart the context (injection of previous relevant reactions)

- Summarize memory

- Choose the intention of intent

6. The context of the tools

In agent -based systems, the use of tools is aware of the context:

- Coordination description tool

- Summarize the date of the tool

- The notes were passed between the steps

Context engineering against fast engineering

While context engineering is broader and more in the system. Engineering usually revolves around hand -made input chains. Context engineering includes building a dynamic context using implications, memory, chains and retrieval. Simon Wilison also noticed, “The context engineering is what we do instead of fine tuning.”

Real world applications

- Customer support agents: Feeding previous ticket summaries, customer profile data, and KB documents.

- Code assistants: Injecting documents for linking, previous delicacies, and using the job.

- Search for legal documents: In the context of the context, inquiring with the history of the situation and the precedents.

- educationPersonal lessons agents with the memory of the learner’s behavior and goals.

Challenges in context engineering

Despite her promise, several pain points remain:

- cumin: Steps to retrieve and coordinate the introduction of public expenditures.

- The quality of the arrangement: The weak recovery hurts the estuary generation.

- Symbolic budget: Choose what must be included/excluded is not trivial.

- Overlapping: Langchain, llamaindex, dedicated Retrievers) adds the complexity.

Best emerging practices

- Combining the structure (JSON, tables) and the non -structured text for a better analysis.

- Reducing each context to one logical unit (for example, one document or conversation summary).

- Use identification data (time stamps, authorship) to improve sorting and recording.

- Record, track and review context injection to improve over time.

The future of context engineering

Several trends indicate that context engineering will be an institution in LLM pipelines:

- Adaptation context on the knowledge of the modelFuture models may require a dynamic type or coordination of the context they need.

- A self -reversal factors: The agents who review their context, review their own memory, and hallucinations.

- standardizationSimilar to how Json has become a global formation of data exchange, context molds may become unified for factors and tools.

Andrej Karpathy also alluded to a modern post, “Context is the new weight update.” Instead of re -training on models, we are now programming through its context – which makes the context of engineering the dominant software interface in the LLM era.

conclusion

Context engineering is no longer optional – it is essential to cancel the full capabilities of modern language models. With the spread of tool groups such as Langchain and mature llamaindex and functioning agents, mastery of context is the same as the importance of choosing the model. Whether you are building a retrieval system, coding agent or a personal teacher, how to organize the context of the model will increase its intelligence.

sources:

- https://x.com/tobi/status/193533422589399127

- https://x.com/karpathy/status/1937902205765607626

- https://blog.langchain.com/the-frise-f-context-enGineering/

- https://rlancemartin.github.io/2025/06/23/context_engineing/

- https://www.philschmid.de/context-engineering

- https://blog.langchain.com/context-engineering-for-agents/

- https://www.llamaindex.ai/blog/context-engineering-what-et-is-dechniques-to-consider

Do not hesitate to follow us twitterand YouTube and Spotify And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically intact and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-06 07:25:00