NVIDIA Just Released Audio Flamingo 3: An Open-Source Model Advancing Audio General Intelligence

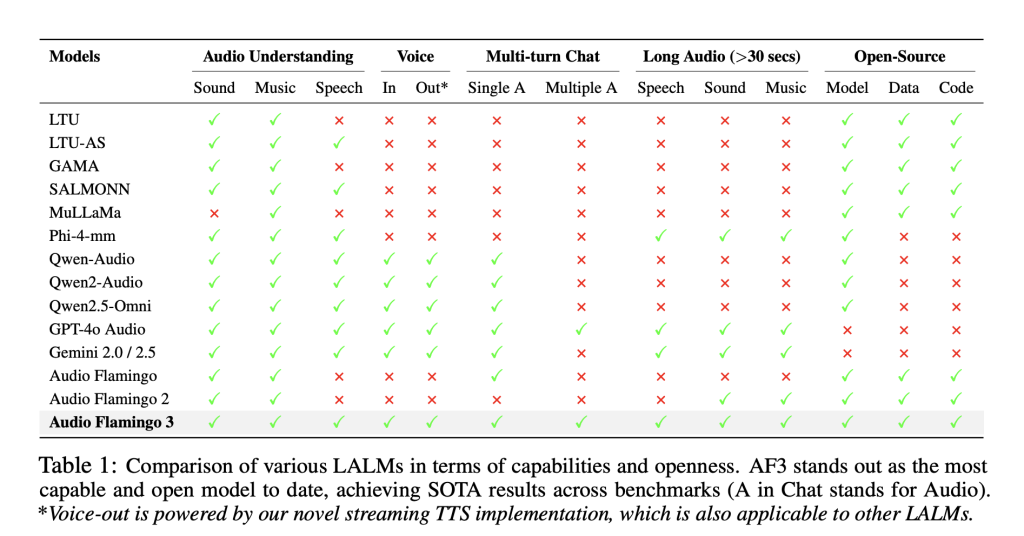

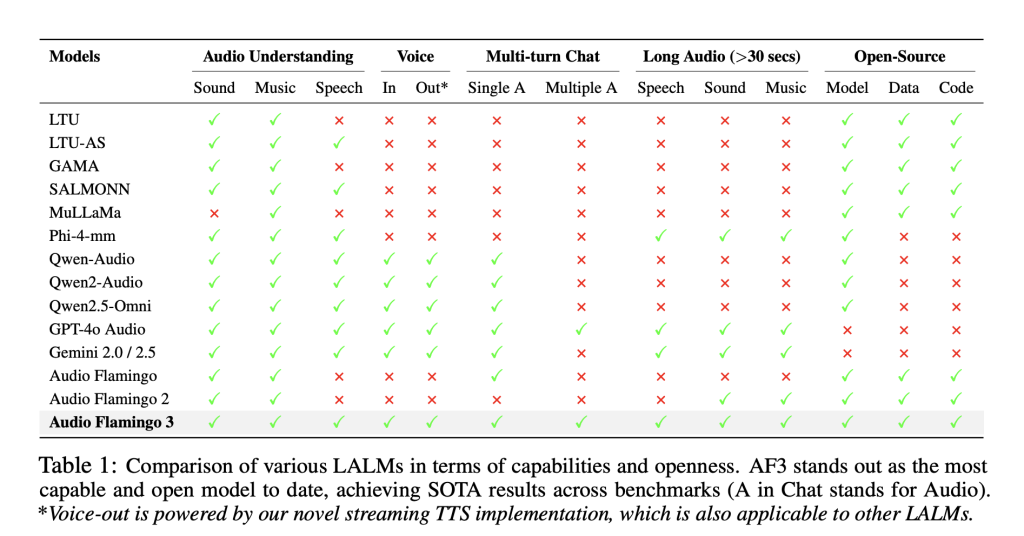

I heard about artificial general intelligence (AGI)? Meet its auditory counterpart –Public intelligence sound. with Flamingo 3 Voice (AF3)NVIDIA offers a big leap in how to understand machines and their mind around the sound. Although previous models can copy speech or classify sound clips, they lacked the ability to explain the sound in a rich way in context, similar to human-speech, surrounding sound and music, and extended periods. AF3 changes it.

With Flamingo 3 Voice, Nvidia is presented Big Open Source Model (Lalm) This not only hears, but also understands and reasons. AF3 is built on a five-stage curriculum and is played by AF-WWHISPER encrypted, and AF3 supports long audio inputs (up to 10 minutes), multi-turn chat, thinking upon request, and even sound reactions to the cabinet. This puts a new tape of how artificial intelligence systems interact with sound, making us closer to AGI.

The basic innovations behind Audio Flamingo 3

- AF-LHISPER: A unified audio encoder AF3 AF-LHISPER is used, which is a new encoder adapted from Whisper-V3. It processes speech and surrounding sounds and music using the same architecture – and the main restriction of the previous Lalms that use separate codes, which leads to contradictions. AF-LHISPER reinforces data structure collections, classified descriptive data, and a 1280-conversion space for emissions to align text representations.

- Series of Voice: Thinking at request Unlike fixed quality guarantee systems, AF3 is equipped with “thinking” capabilities. Using the AF-TINK data collection (examples of 250 thousand), the model can think about the thinking chain when claiming it, allowing it to explain the steps of reasoning before reaching an answer-a major step towards a transparent sound.

- Multiple conversations, multiple conversations Through the AF-CAT Data set (75K Dialogue), AF3 can hold contextual conversations that include multiple audio inputs across turns. This simulates interactions in the real world, as humans refer to previous sound signs. It also provides sound conversations to Sufi using the text transmission unit to words.

- Long audio thinking AF3 is the first completely open model that is able to think about the audio inputs for up to 10 minutes. Training the model with Longauio-XL (examples of 1.25 meters), the model supports tasks such as summarizing, understanding podcasts, discovering mockery, and time grounding.

Modern standards and ability to the real world

AF3 exceeds both open and closed models on more than 20 criteria, including:

- MMAU (Avg): 73.14 % (+2.14 % on QWEN2.5-O)

- Longaudiobench: 68.6 (GPT-4O Rating), Gemini 2.5 Pro beating

- Librispeech (ASR): 1.57 %, outperform the Phi-4 mm

- Clothoaqa: 91.1 % (compared to 89.2 % of QWEN2.5-O)

These improvements are not only marginal. They redefine what is expected from the vocal language systems. AF3 also provides an analogy in the field of voice chat and speech generation, as it has achieved the time of the transition of 5.94s (compared to 14.62 seconds for QWEN2.5) and dozens of the best similarities.

Data pipeline: Data collections that study audio thinking

Nvidia was not limited to an account account – they rethink the data:

- Audioskills-XL: Examples of 8M combine surrounding thinking, music and speech.

- Longaudio-XL: It covers a long letter of audio, podcasts, and meetings.

- AF-THERK: Short inference is enhanced by COT.

- AF-Cat: Designed for multiple conversations, multi -edges.

Each collection is completely open, along with the training and recipes code, enabling cloning and research in the future.

Open source

AF3 is not just a form of model. NVIDIA:

- Model weights

- Training recipes

- Inference symbol

- Four open data sets

This AF3 transparency makes the most accessible voice language models. It opens new research trends in auditory thinking, low overall sound factors, understanding of music, and multimedia interaction.

Conclusion: Towards the intelligence of the general voice

Audio Flamingo 3 explains that understanding deep sound is not only possible, but it is repeated and open. By combining the scale, new training strategies, and various data, NVIDIA offers a model that listens and understands and reasons that cannot be the previous Lalms.

verify paperand Symbols and model embraced. All the credit for this research goes to researchers in this project.

Are you ready to communicate with 1 million devs/engineers/researchers? Learn how NVIDIA, LG AI Research and Top Ai Companies Marktechpost benefit to reach its target audience [Learn More]

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically intact and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-16 04:10:00