Weaving reality or warping it? The personalization trap in AI systems

Want more intelligent visions of your inbox? Subscribe to our weekly newsletters to get what is concerned only for institutions AI, data and security leaders. Subscribe now

Artificial intelligence represents the greatest discharge in the history of humanity. We once stirred the memory for writing, calculating calculators and moving to GPS. We have now started to empty judgment, synthesis and even make meaning of the systems that speak our language, learn our customs and allocate our facts.

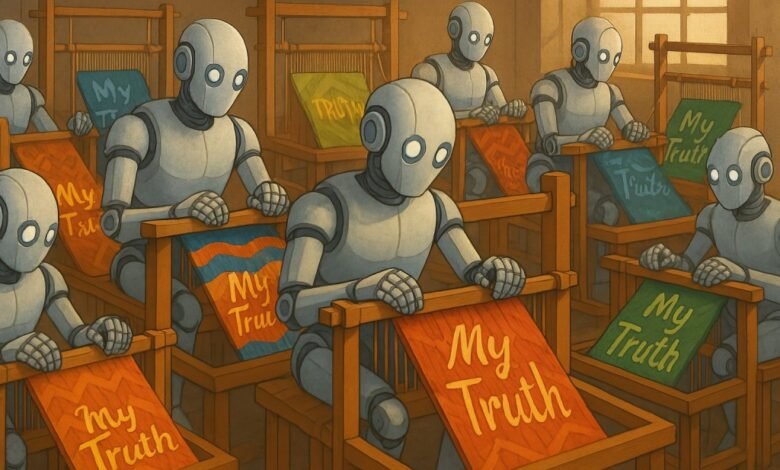

Artificial intelligence systems grow increasingly in recognizing our preferences, biases, and even our Peccadillos. Like vigilant servants in one case or hidden maneuvers in another case, they adapt their responses to satisfy, persuade, help or simply draw our attention.

While the immediate effects may seem benign, this calm and invisible control lies a deep shift: the release of the reality that each of us receives is uniquely designed. Through this process, over time, everyone becomes increasingly island. This difference can threaten the cohesion and stability of society, which leads to the erosion of our ability to agree on basic facts or movement in common challenges.

Determination of artificial intelligence not only serves our needs; It begins to reshape them. The result of this re -formation is a type of cognitive erosion. Everyone begins to move, inch inch, away from the common ground for common knowledge, common stories and common facts, and in reality.

AI Impact series returns to San Francisco – August 5

The next stage of artificial intelligence here – are you ready? Join the leaders from Block, GSK and SAP to take an exclusive look on how to restart independent agents from the Foundation’s workflow tasks-from decisions in an actual time to comprehensive automation.

Ensure your place now – the space is limited: https://bit.ly/3Guupf

This is not just a matter of different news extracts. It is the slow difference in moral, political and personal facts. In this way, we may witness a collective lack of understanding. It is an unintended result, but it is very important specifically because it is unexpected. But this turned, while speeding up by artificial intelligence, began a long time before the formation of algorithms.

Lack of attention

This disposal of artificial intelligence has not started. David Brooks also reflected in Atlantic OceanDepending on the work of the philosopher Alasdair Macintyre, our society was drifting from the common ethical and cognitive frameworks for several centuries. Since the Enlightenment, we gradually replaced the inherited roles, collective novels and common moral traditions with individual independence and personal preference.

What began as the liberation of the imposed belief systems, over time, the same structures that were once bound us for a common purpose and personal meaning. Amnesty International did not create this fragmentation. But it gives a new and speedy shape, not only customizing what we see but how we can and believe.

It is not different from the biblical story of Babylon. The unified humanity once shared one language, only to be broken, mixed and scattered through the act of making all mutual understanding impossible. Today, we do not build a stone -made tower. We build the language tower itself. Again, we risk falling.

Automated Human League

Initially, customization was a way to improve “isolation” by keeping users participating longer, returning often and interacting deeper with a site or service. Specialized recommendations, specially designed ads and coordinated feed are designed to keep our attention a little longer, perhaps for entertainment but often let us transfer to buy a product. But over time, the target expanded. The allocation is no longer only about what we hold. This is what he knows about each of us, the dynamic graph of our preferences, beliefs and behaviors that become more accurate with each interaction.

Today’s artificial intelligence systems do not only predict our preferences. It aims to create a bond through very special reactions and responses, which creates a sense that the artificial intelligence system understands, cares about the user and supports his uniqueness. Chatbot tone, the speed of response and emotional parity of the proposal is calibrated, not only for efficiency but for ringing, indicating a more useful era of technology. It should not be surprising that some people have fallen in love and married their robots.

The device adapts not only to what we click, but to whom it looks. It reflects us to ourselves in ways we feel intimate, even sympathy. A recent search paper was martyred nature This indicates that it is “social social alignment”, which is the process in which the artificial intelligence system participates in a social and psychological environmental system created, as preferences and perceptions develop through mutual influence.

This is not a neutral development. When each reaction is adjusted to tend or confirm, when the systems reflect us well, they blur the separation line between what echoes and what is real. We are not just staying longer on the platform; We are a relationship. We slowly merge and may merge with a copy of reality by artificial intelligence, which is increasingly formed through invisible decisions about what is intended to believe, want or trust.

This process is not a science fiction. Its structure is based on attention, learn reinforcement with human comments (RLHF) and customization engines. It also happens without many of us – most of us most of us – so that he knows it. In this process, we gain “Friends” of Amnesty International, but at any cost? What do we lose, especially with regard to free will and agency?

The author and financial commentator Kyla Scanlon talked about Ezra Klein about how the ease of friction in the digital world can come at the expense of meaning. As she put it: “When things are very easy, it is difficult to find meaning in it … If you are able to retreat, see a screen in your small seat and hand it stick – it is difficult to find meaning within this type of Wall Lifestyle because everything is very simple. “

Specialize the truth

While artificial intelligence systems respond to us more fluent than ever, they also move towards selectivity. Two users may receive the same question today, similar answers, mostly varying through the likely nature of the spontaneous organization. However, this is just the beginning. The artificial intelligence systems are explicitly designed to adapt their responses to individual patterns, gradually design answers, tone, and even conclusions for their strong frequency with each user.

The allocation is not a manipulation of its nature. But it becomes fraught with risks when he is invisible, indisputable or geometric to persuade him more than informing him. In such cases, not only reflects from us; He directs how we explain the world around us.

The Stanford Center for Research in the Foundation Models in the Transparency Index for 2024 notes, a few of the leading models reveal whether their outputs differ according to the identity of the user, date or population composition, although technical scaffolding of such a allocation is increasingly present and only begins to examine them. Although he is not fully aware of the public platforms, these capabilities to form responses based on the profiles of deduced users, which leads to increasingly designed media worlds, represents a deep shift that is already carried out preliminary models and actively followed up by leading companies.

This customization can be useful, and certainly this is the hope of those who build these systems. Personal educational lessons show a promise in helping learners to progress in their pace. Mental health applications are increasingly customizing responses to support individual needs, and access to the content to meet a set of cognitive and sensory differences. These are real gains.

But if similar adaptive methods become widespread through information, entertainment and communications, the deeper and more anxious shift is waving on the horizon: a transformation from a common understanding towards individual facts specially designed. When the truth itself begins to adapt to the observer, it becomes fragile and increasingly painted. Instead of differences that mainly depend on different values or interpretations, we can find ourselves simply struggle to reside in the same real world.

Of course, the truth has always been mediate. In previous ages, through the hands of clerics, academics, publishers, and evening news, they worked as a portal of a portal, and they formed the audience’s understanding through institutional lenses. Certainly, these figures were not free of bias or the agenda, yet they were working within wide joint work frameworks.

The emerging model is today with something qualitatively different: the truth is by artificial intelligence through personal reasoning that displays the frameworks and filters and the provision of information, and the formation of what users imagine. But unlike the previous brokers who, despite the faults, work in the audience’s visual institutions, these new arbitrators are not commercially transparent, not elected and constantly adapting, often without revealing. Their biases are not ideological, but they are encrypted through training data, architecture and unleashed incentives.

This deep shift, from a common narration that was nominated through reliable institutions to accounts that can reflect a new infrastructure for understanding, designed algorithms on preferences, customs and beliefs extracted for each user. If Babylon represents the collapse of a common language, we may now stand on the threshold of the collapse of common mediation.

If the customization is the new cognitive pillar, what might the infrastructure of the truth in a world without fixed brokers? One of the possibilities is the creation of general funds of artificial intelligence, inspired by a proposal from legal researcher Jack Palin, who argued that the entities that deal with user data and their perception should be considered credit criteria for loyalty, care and transparency.

Artificial intelligence models can be subject to transparency panels, training on data collections funded by the public sector and required to show the steps of thinking, alternative views or trust levels. These “information credits” will not eliminate bias, but they can consolidate confidence in the process rather than purely allocating. Builders can start adopting transparent “constitutions” that clearly determine the behavior of the model, and by providing interpretations of a series of communication that allow users to see how to form conclusions. These are not silver bullets, but they are tools that help keep the cognitive power responsible and can be tracked.

Artificial intelligence builders face a strategic and civil turning point. They are not limited to improving performance; They also face the risks that may lead to a fragmentation of common reality. This requires a new kind of responsibility towards users: the design of systems that respect their preferences, but their role as learners and believers.

Revelation and Reweaving

What we might lose is not just the concept of truth, but rather the path that we realized through it. In the past, the average truth – although it was incomplete and biased – was still based on human rule, often only a layer or two of the living experience of the other humans you knew or at least associated with them.

Today, this mediation is transparent and driven by the algorithm logic. Although the Human Agency has long slipped, we are now risking a deeper thing, and the loss of the compass that once told us when we were out of the track. The danger is not only that we will believe what the machine tells us. It is that we will forget how we discovered the truth for ourselves once. What we risk is a loss not only cohesion, but the will to search for it. Thus, a deeper loss: the habits of discrimination, disagreement and deliberations that were previously held together.

If Babylon was characterized by the destruction of a common tongue, then our moments risk calmly vanishing the common reality. However, there are ways to slow down or even to face drift. The model that explains his thinking or reveals the limits of its design may do more than the output clarification. This may help restore the conditions for joint inquiries. This is not a technical solution; It is a cultural position. The truth, after all, did not always rely on the answers, but on how we reached them together.

Don’t miss more hot News like this! Click here to discover the latest in Technology news!

2025-07-20 18:30:00