OpenAI Just Released the Hottest Open-Weight LLMs: gpt-oss-120B (Runs on a High-End Laptop) and gpt-oss-20B (Runs on a Phone)

Openai has just sent seismic waves through the world of artificial intelligence: for the first time since GPT-2 hit the scene in 2019, the company does not issue an open linguistic model. Corresponding GPT -SS-120B and GPT -SS-20BDistributions that anyone can download, lose, control and operate on their own devices. This launch does not change the landscape intelligence. It explodes a new era of transparency, customization, and the raw mathematical power of researchers, developers and lovers everywhere.

Why this release is a big deal?

Openai implanted a good reputation for each of the potential of the jaw model and a castle -like approach to royal technology. This changed on August 5, 2025. These new models are distributed under shareholders Apache 2.0 licenseMake it open for commercial and experimental use. the difference? Instead of hiding behind cloud application programming facades, anyone OpenAi models can now be placed under a microscope-or placed directly to work on problems on the edge, in the institution, or even on consumer devices.

False models: Technical marvel with the real world muscle

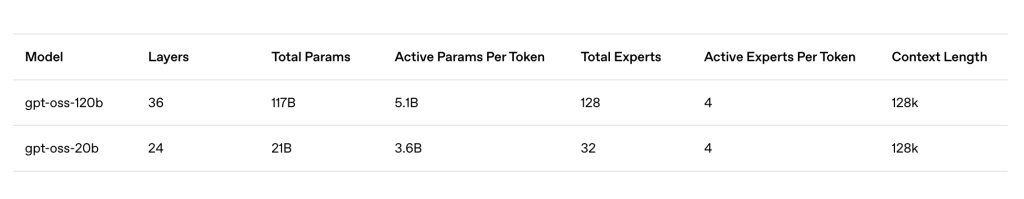

GPT -SS-120B

- measuring: 117 billion teachers (with 5.1 billion active teachers for each symbol, thanks to the technology of the mixture of experience)

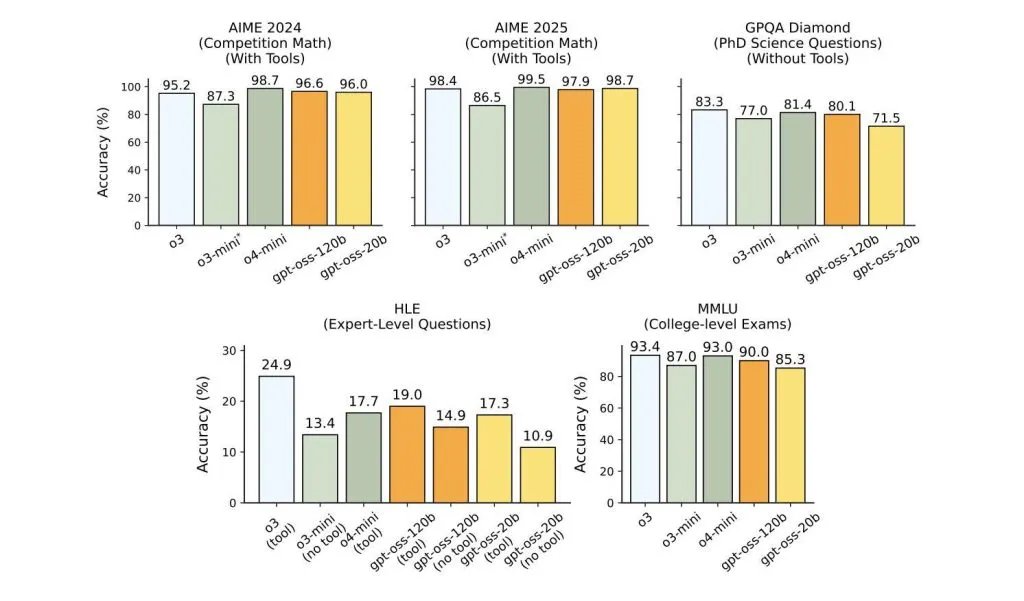

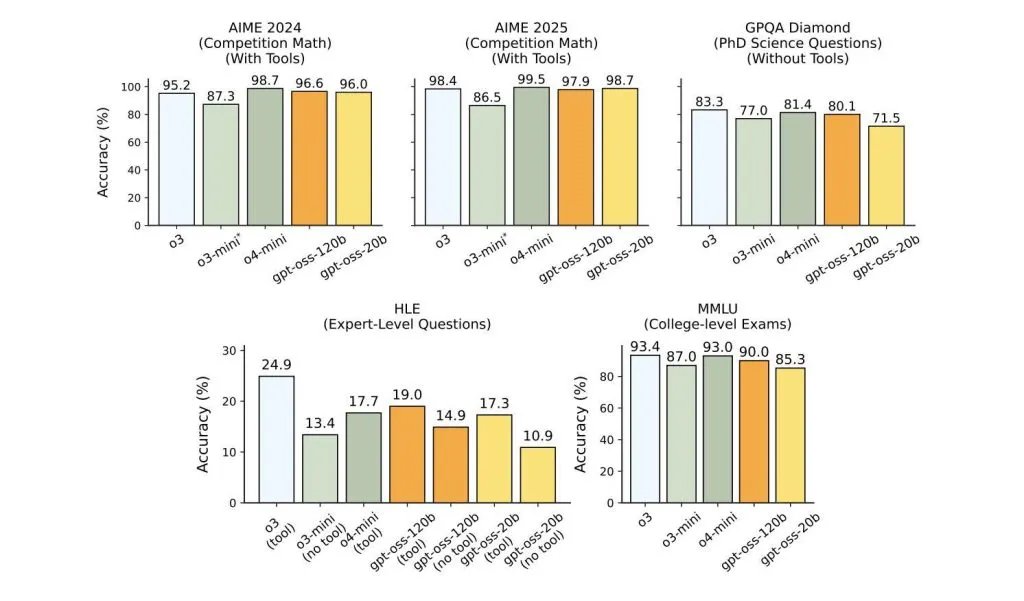

- performance: Punches at Openai’s O4-MINI (or better) in real world standards.

- Devices: It works on a single-graphics processing unit-Think Nvidia H100, or 80 GB of category. Server farm is not required.

- Logic: It is characterized by the series of the free series and the agent-demanding to automate research, technical writing, generate the code and more.

- Specialization: The compulsive “thinking voltage” supports (low, medium, high), so that you can contact energy when needed or save resources when you do not.

- Context: He deals with up to 128,000 symbols – with one text to read the entire books simultaneously.

- fine tuning: It is designed for ease of customization and local/private reasoning – there are no limits for the rate, the privacy of full data, and the total deployment control.

GPT -SS-20B

- measuring: 21 billion teachers (with 3.6 billion active teachers for each symbol, and also mixing experts).

- performance: He sits directly between O3-MINI and O4-MINI in thinking tasks-at the best of equality with the best “small” models available.

- Devices: It works on consumer laptops-with only 16 GB RAM, it’s the most powerful weight-weight thinking that you can put on a local phone or computer.

- The mobile is ready: It is specifically improved to provide the low -form artificial intelligence on the device for smartphones (including Qualcomm Snapdragon support), Edge devices, and any scenario that needs local informative inferiority.

- The agent of the agent: Like her big brother, 20B can use application programming facades, create organized outputs, and implement the Python icon upon request.

Technical Details: Mix of experts and quantity MXFP4

Both models use Mix of experts (MEE) Architecture, just activate a handful of “experts” sub -networks for each symbol. The result? The enormous parameters with the use of modest memory and the rapid reasoning of lightning-ideal for consumer devices and high-performance institutions today.

Add to that The original MXFP4 amountThe effects of the model memory reduced without sacrificing accuracy. The 120B model is comfortably proportional to the advanced advanced graphics processing unit; The 20B model can work comfortably on laptops, desktop computers and even mobile devices.

The effect of the real world: tools for institutions, developers and amateurs

- For institutions: Local publication of data privacy and compliance. No more black cloud cloud: Financial care, health care and legal operations can now possess and secure each part of their LLM workflow.

- For developers: Freedom of tampering, controlling, and extending. No API limits, no saas bills, only pure, customized AI with full control over cumin or cost.

- Society: Models are already available on Hugging Face and OLLAMA and more – from downloading in minutes.

How do you accumulate GPT -SS?

Here is Kicker: GPT-SS-120B is the first freely available model that matches the performance of first-class commercials such as O4-MINI. The 20B alternative does not only block the AI’s performance gap on the device, but it is likely to speed up innovation and pay the limits of what is possible with the local LLMS.

The future is open (again)

Openai’s GPT -SS is not just a version; It is Clarion’s invitation. By making the latest thinking, using the tools, and delegate capabilities available to anyone to inspect and publish, OpenAI casts the door to open the door for a full society of makers, researchers and institutions-not only for use, but to build on, repeated and develop.

verify GPT -SS-120Band GPT -SS-20B and Technical Blog. Do not hesitate to check our GitHub page for lessons, symbols and notebooks. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-08-05 23:53:00