Inception Unveils Mercury: The First Commercial-Scale Diffusion Large Language Model

The AI and LLMS scene witnessed a great leap with a launch Mercury Through advanced startup laboratories. The introduction of the first large -scale language models on a commercial scale (DLMS). Inception Labs is the transformation of the model in speed, cost efficiency, and intelligence for the tasks of generating text and symbol.

Mercury: setting new standards in the speed of artificial intelligence and efficiency

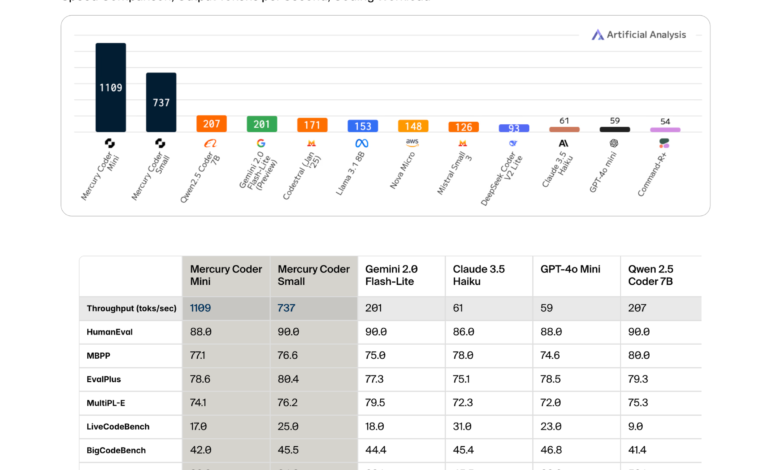

Inception from Inception offers unprecedented performance, working at previously undesirable speeds with traditional LLM structures. Mercury achieves remarkable productivity-more than 1000 icons per second on GPU NVIDIA H100-which is an exclusive performance for specially designed devices such as Groq, Cerebras and Sambanova. This translates to increased amazing speed 5-10X compared to the current models of automatic slope.

Proliferation models: the future of the generation of the text

The traditional LLMS is automatic retail, creating a serial text, a symbol, causing large and calculating costs, especially in the extensive thinking tasks and correction. Places of spread, however, benefit from a unique “rough to pregnancy”. Unlike the automatic models restricted by serial generation, the spread of the outputs repeatedly from the loud approximation, which provides the updates of the parallel distinctive symbol. This method greatly enhances thinking, correcting the error and general cohesion of the content created.

Although proliferation methods have proven revolutionary in photos, sound and video – applications like Midjourney and Sora – their application was in separate data ranges such as text and symbols to a large extent until the end of the beginning.

Mercury programmer: generating a high -speed and high -quality symbol

The main product of Inception, Mercury Coder, is specially improved for coding applications. Developers can now access a high -quality and response model capable of generating a symbol with more than 1000 symbols per second, which is a significant improvement on the current speed models.

In standard coding standards, Mercury Coder not only coincides, but it often exceeds the performance of other high-performance models such as GPT-4O Mini and Claude 3.5 Haiku. Moreover, the Mercury Coder Mini got a high-level position in Arena Copilot, with second place and outperforming existing models such as GPT-4O Mini and Gemini-1.5-Flash. The most impressive thing, mercury accomplishes it while maintaining approximately 4X speeds than GPT-4O Mini.

Diversity and integration

Mercury dlms works smoothly as alternatives to traditional LLMS. They support useless cases, including the generation of retrieval (RAG), the integration of tools, and the functioning of the agent. The parallel improvement of the spread model allows the modernization of multiple symbols simultaneously, ensuring a rapid and accurate generation suitable for institutions environments, the integration of the application programming interface, and local publication.

Building by creators artificial intelligence

Inception technology supports foundational research in Stanford, UCLA and Cornell of its pioneering founders, recognized for their decisive contributions to the development of artificial intelligence. Their joint experience includes the original development of the prevalence modes based on images and innovations such as improving direct preference, flash interest, and widespread-recognized resolution transformers of its transformational impact on modern artificial intelligence.

The INCEVTION Mercury is a pivotal moment for the AI, and the opening levels of the previously impossible performance, accuracy, and cost efficiency.

Payment the Stadium and technical details. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 80k+ ml subreddit.

🚨 Recommended Reading- AI Nexus Research is published: an advanced system that combines AI system and data compliance standards to address legal concerns in artificial intelligence data groups

Jean-Marc is a successful CEO of Amnesty International’s business. He leads and speeds up the growth of artificial intelligence solutions and has started a computer vision company in 2006. He is a recognized spokesman for artificial intelligence conferences and has a MBA from Stanford.

🚨 A platform recommended by Amnesty International Open Source: “Intelagent is an open source of action to evaluate AI Summary Conversation System” (promoted)

2025-03-09 00:22:00