From terabytes to insights: Real-world AI obervability architecture

Want more intelligent visions of your inbox? Subscribe to our weekly newsletters to get what is concerned only for institutions AI, data and security leaders. Subscribe now

Think about maintaining and developing the e -commerce platform, treating millions of transactions every minute, which generates large quantities of remote measurement data, including standards, records and antiquities through multiple small services. When critical accidents occur, engineers face the arduous task of sifting the perimeter of data to detect signal signals and visions. This is equivalent to searching for a needle in the straw pile.

This makes observation a source of frustration instead of insight. To alleviate this pain point, I began to explore a solution to use the MCP context protocol to add the context and extract inferences from the distributed records and effects. In this article, I will explain my experience in building a observation platform that works in Amnesty International, explains the structure of the system and sharing implementable visions along the way.

Why challenge the ability to note?

In modern software systems, observation is not luxury; It is an essential necessity. The ability to measure and understand the system behavior is a foundation for reliability, performance and user confidence. As the proverb says, “What you cannot measure, you cannot improve it.”

However, achieving the ability to observe in the cloud structures that depend on accurate services today is more difficult than ever. One user request may pass dozens of microscopic services, all records, standards and effects that are emitted. The result is the abundance of remote measurement data:

Artificial intelligence limits its limits

Power caps, high costs of the symbol, and inference delay are reshaped. Join our exclusive salon to discover how the big difference:

- Transforming energy into a strategic advantage

- Teaching effective reasoning for real productivity gains

- Opening the return on competitive investment with sustainable artificial intelligence systems

Securing your place to stay in the foreground: https://bit.ly/4mwngngo

- Terrabytes from the records daily

- Tens of millions of metric data points and prior equipment

- Millions distributed effects

- Thousands of attachments were created every minute

The challenge is not only the size of the data, but the fragmentation of the data. According to the New Relat report for 2023, 50 % of the organizations report the remote measurement data report, with only 33 % unified vision via standards, records and antiquities.

Records tell part of the story, and other standards, track another part. Without a fixed thread from the context, engineers are forced to hold manual, relying on intuition, tribal knowledge and the work of heavy detectives during accidents.

Because of this complexity, I started wondering: How can artificial intelligence help us get fragmented data in the past and provide comprehensive and useful visions? Specifically, can we make the measurement data more important and available for both humans and machines using an organized protocol like MCP? The basis of this project was formed through this central question.

Understanding MCP: Data Pipeline Perspective

Antarbur defines MCP as an open criterion that allows developers to create a bilateral connection between data sources and artificial intelligence tools. The organized data pipeline includes:

- Etl Etl AI: Unifying the extraction of context from multiple data sources.

- Organized inquiry: Artificial intelligence intelligence is allowed to easily access transparent and understandable data layers.

- Enriching semantic data: Including a context of meaning directly in remote measurements.

This has the ability to transform observation of the basic system away from solving interactive problems and towards proactive visions.

System structure and data flow

Before diving in the implementation details, let’s go through the structure of the system.

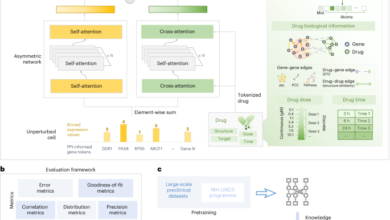

In the first layer, we develop the analogous measurement data by including uniform definition data in remote measurement signals, such as distributed effects, records and standards. After that, in the second layer, fertilized data in the MCP server is fed to the index, adding the structure and providing the customer access to the fertilized data of the context using application programming facades. Finally, an artificial intelligence motor uses an organized and exciting dimension to detect anomalies, binding and dirty root analysis to explore the application problems.

This design ensures that the difference and engineering from artificial intelligence receive visible visions of remote measurement data.

Deep Divide Implementation: A three -layer system

Let’s explore the actual implementation of the observation platform that works with our MCP materials, focusing on data flows and transformations in each step.

Layer 1: Generating of the fertilized data for the context

First, we need to make sure that remote measurement data contains a sufficient context for a meaningful analysis. The basic vision is that the link of data must occur at the time of creation, not the time of analysis.

| Def Process_checkout (User_id, Cart_items, Payment_THOD): “Simulation of the exit process with analogy is fertilized for context.” “” “” # Create an attachment Order_id = F “Order- {UUID.UUID4 (). Hex[:8]} “” Requst[:8]} “” # Create the context dictionary that will be applied Context = { User_id: user_id, Order_id: Order_id, “Request_id”: request_id, “Cart_item_count”: Len (Cart_items), “Payment_method”: Payment_THOD, “Service_Name”: “Checkout”, “Service_Version”: “v1.0.0” }} # Start tracking otel with the same context With trasser.start_as_current_span ( “Process_checkout”, District = {K: Str (V) for K, V at Conndxt.items ()} ) Checkout_span: # Registration using the same context Logger.info (F “Exit process”, Extra = {“context”: json.dumps (context))) # Publishing context With traceer.start_as_current_span (“Process_payment”): # Logic of paying the process … Logger.info (“Payment Processing”, Extra = {“context”: Json.dumps (context)}) |

Code 1. Enrich the context for records and effects

This approach guarantees that each remote measurement signal (records, standards, antiquities) contains the same basic contextual data, solving the link problem in the source.

Layer 2: Access to data through MCP server

Next, I built a MCP server that converts the raw dimension to a quarrel programming interface. The basic data processes here include the following:

- Indexing: Establishing effective research through contextual fields

- filtering: Choose relevant sub -groups from remote measurement data

- to gatherCalculate statistical measures via time windows

| @app.post (“/mcP/logs”, response_model = List[Log])) Def Query_logs (query: Logquness): “Inquiry records with specific filters” Results = log_db.copy () # Application of contextual filters If query.request_id: Results = [log for log in results if log[“context”].GET (“Request_id”) == Query.request_id] If query.user_id: Results = [log for log in results if log[“context”].GET (“User_id”) == Query.user_id] # Application of time -based filters If query.time_range: Start_time = datetime.fromisoformat (query.time_rang[“start”])) End_time = datetime.fromisoformat (query.time_rang[“end”])) Results = [log for log in results if start_time <= datetime.fromisoformat(log[“timestamp”]) <= End_time] # type by Chronological Results = rank (results, key = lambda x: x: x: x: x: x: x: x[“timestamp”]The opposite = right) Awing results[:query.limit] If query.limit eles is |

Code 2. Transfer data using MCP server

This layer converts the measurement from an unorganized data lake to an optimized front -to -order facade, which the artificial intelligence system can efficiently transfer.

Layer 3: AI-Ei Analysis Engine

The final layer is an Amnesty International component that consumes data through the MCP interface, Performance:

- Multi -dimensional analysis: The signals of signals through records, standards and antiquities.

- Discovering anomalyDetermine statistical deviations from natural patterns.

- The radical cause is determinedUsing contextual evidence to isolate the sources of possible issues.

| Def Analyze_incident (Self, require_id = none, user_id = none, timeframe_minuts = 30): “” Analysis of remote measurement data to determine the radical cause and recommendations. ” “” “ # Determine the window of the analysis time End_time = datetime.now () Start_time = End_Time – Timedelta (minutes = Timeframe_minutes) Time_Range = {“Start”: Start_time.isoformat (), “End”: End_Time.isoformat ()} # Bring the relevant remote measurement on the basis of context Records = Self.fetch_logs (request_id = require_id, user_id = user_id, time_rang = time_range) # The aforementioned services in Records to The target metric analysis Services = Set (log # Get the standards to Those services Metrics_by_service = {} For service in services: For metric_name in [“latency”, “error_rate”, “throughput”]: Metric_data = Self.fetch_meetrics (service, metric_name, time_range) # Calculate statistical properties Values = [point[“value”] To get a point in Metric_data[“data_points”]]] Metrics_by_service[f”{service}.{metric_name}”] = { Meaning: statistics. Mean (values) if the other values 0, Al -Waseet: Statistics. Median (values) if the other values 0, “Stdev”: Statistics.stdev (Values) if Len (Values)> 1 last 0, “A minute”: a minute (values) if the other values 0, “Max”: Max (values) if the values are another 0 }} # Determining anomalous cases using the degree of Z Anomalies = [] For metric_name, statistics at Metric_by_service.items (): If statistics[“stdev”] > 0: # Avoid division by scratch z_score = (statistics[“max”] Statistics[“mean”]) / Statistics[“stdev”] If Z_score> 2: # More than 2 normative deviations Anomals.app ({ “Metric_Name, Z_score: Z_score, “Al -Shadda”: “High” if Z_score> 3 “medium” })) Return { Summary: Ai_summary, Anomalous cases: abnormal cases, “Effected_Services”: List (Services), “Recommendation”: ai_recmarding }} |

Code 3. Accident analysis, method of detection and conclusion

Impact improved observation on MCP

Mercys of MCP with observation platforms can improve the management and understanding of complex remote measurement. Possible benefits include:

- Discover the fastest anomalies, which led to a low minimum time for detection (MTTD) and the minimum time to solve (MTTR).

- The easiest determination of the root causes of issues.

- Less no less noise and alerts cannot be changed, thus reducing fatigue in a state of alert and improving developers productivity.

- Less interruption and switching context during the accuracy of accidents, which improves the operational efficiency of an engineering team.

Practical visions

Here are some major ideas of this project that will help the difference in the strategy of observation.

- Early descriptive data should be included in the remote measurement generation process to facilitate the estuary.

- Organized data facades Create API’s API query layers to make remote measurement easier.

- The context of awareness artificial intelligence The analysis focuses on the rich data in the context to improve accuracy and importance.

- The context and artificial intelligence methods should be improved regularly using practical operational reactions.

conclusion

Merging organized data pipelines and AI carries a tremendous promise to note. We can transform the vast dimension measurement data into implementable visions by taking advantage of organized protocols such as MCP and the analyzes driven by artificial intelligence, which leads to proactive systems instead of interactive. Lumigo specifies three pillars of observation – Recordsand ScalesAnd antiquities – It is necessary. Without integration, engineers are forced to manually connect the sources of data, which slows the response of the accident.

How to generate remote measurement requires structural changes as well as analytical techniques to extract meaning.

Pronnoy Goswami is the world of Amnesty International and a data world with more than a decade in this field.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-08-09 19:15:00