Study warns of security risks as ‘OS agents’ gain control of computers and phones

Want more intelligent visions of your inbox? Subscribe to our weekly newsletters to get what is concerned only for institutions AI, data and security leaders. Subscribe now

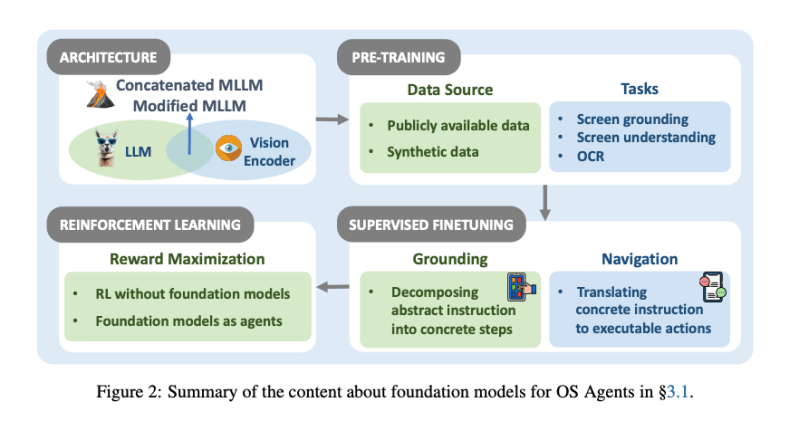

The researchers have published the most comprehensive survey so far of the so-called “OS agents”-artificial intelligence systems that can independently control computers, mobile phones and web browsers by interacting directly with their interfaces. The 30 -page academic review, which was accepted for publication at the prestigious association for computer linguistics, is planning a rapidly evolving field that attracted billions of investment from major technology companies.

The researchers wrote: “The dream of creating artificial intelligence aides is able to diversify like Iron Man’s fictional jarfis has a long -term imagination,” the researchers wrote. “With the development of large (multimedia) language models, this dream is closer to reality.”

The poll, led by researchers from the University of Zhejiang and the Oppo AI Center, comes at a time when major technology companies are racing to publish artificial intelligence agents who can perform complex digital tasks. Openai recently launched “Operator”, “Anthropic Asse”, Apple has entered the AI’s improved capabilities in “Apple Intelligence”, and Google revealed “Project Mariner” – all systems designed to automate computer reactions.

Technology giants rush to spread artificial intelligence that controls your desktop

The speed that academic research turned into ready -made products for consumers, even according to Silicon Valley standards. The survey reveals a search explosion: more than 60 basic models and 50 business frameworks specifically developed to control computer, with publishing rates accelerated since 2023.

Artificial intelligence limits its limits

Power caps, high costs of the symbol, and inference delay are reshaped. Join our exclusive salon to discover how the big difference:

- Transforming energy into a strategic advantage

- Teaching effective reasoning for real productivity gains

- Opening the return on competitive investment with sustainable artificial intelligence systems

Securing your place to stay in the foreground: https://bit.ly/4mwngngo

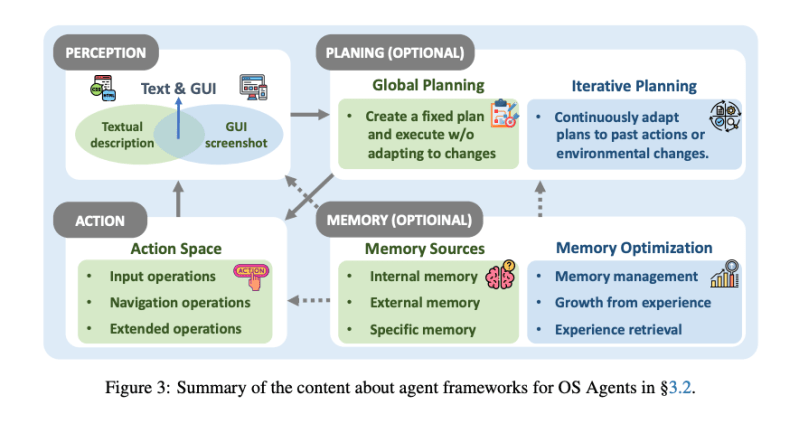

This is not just a gradual progress. We are witnessing the emergence of artificial intelligence systems that can really understand and deal with the digital world in the way that humans do. Current systems work by capturing computer screen shots, using an advanced computer vision to understand what is displayed, then perform accurate procedures such as clicking buttons, filling models, and moving between applications.

“The operating system agents can independently complete the tasks and have the ability to enhance the lives of billions of users around the world significantly,” the researchers note. “Imagine a world in which these agents can perform smoothly by these agents,” imagine a world such as online shopping, booking travel arrangements and other daily activities. “

The most advanced systems can deal with the complex multi-stel-steering workflow that extends to different applications-reserve a restaurant reservation, then add it automatically to your calendar, then set a reminder to leave early for traffic. What took from humans minutes of clicking and writing can now happen in seconds, without human intervention.

Why do security experts appear warning devices on the systems of artificial intelligence companies?

For the leaders of institution technology, the promise of productive gains comes with a realistic fact: these systems represent a completely new surface of the attack that most organizations are not ready to defend.

The researchers devote great attention to what is going on for “safety and privacy”, but the effects of more anxious than their academic language suggest. “The operating system agents face these risks, especially given their wide applications on personal devices with user data,” they write.

They read the attacks they document, such as cybersecurity nightmare. The “indirect injection on the web” allows the harmful actors to include hidden instructions in web pages that can kidnap the behavior of the artificial intelligence agent. The most important is the “environmental injection attacks” where not harmful web content appears to be deceived by the user data stealing or conducting unauthorized procedures.

Consider the consequences: Artificial intelligence agent can be processed with access to companies e -mail, financial systems, and customer databases through a carefully made web page to penetrate sensitive information. Traditional safety models, built around human users who can detect clear hunting attempts, collapse when the “user” AI system treats information differently.

The survey reveals a gap in preparation. While public security frameworks are present for artificial intelligence agents, “studies on defenses for operating system factors are still limited.” This is not just an academic concern – it is an immediate challenge for any organization that is considering spreading these systems.

Reality exam

Despite the noise surrounding these systems, the scanning analysis of performance standards reveals great restrictions, with expectations to be widespread.

Success rates vary greatly through different tasks and platforms. Some commercial systems achieve success rates exceeding 50 % on certain criteria – impressive for emerging technology – but they are struggling with others. The researchers classify the assessment tasks into three types: “grounding the primary user interface” (understanding the facade elements), “retrieving information” (finding and extracting data), and “complex agents” (complex independent operations).

This style tells him: The current systems excel in simple and well -defined tasks, but they stumble when they face the complex type of workflow based on the context that defines many modern knowledge works. They can reliably click on a specific button or fill a standard model, but they struggle with tasks that require constant thinking or adaptation with unexpected interface changes.

This performance gap explains why early publishing operations focus on large task with large size instead of automating general purposes. Technology has not yet been ready to replace human rule in complex scenarios, but it is increasingly able to deal with routine digital business.

What happens when artificial intelligence agents learn to allocate themselves to each user

The most interesting challenge may include the transformation-specified in the survey what researchers call “allocation and self-development”. Unlike artificial intelligence assistants today who are not dealing with every interaction as independent, future operating system agents will need to learn from user reactions and adapt to individual preferences over time.

“The development of OS’s personal agents has been a long goal in artificial intelligence research,” the authors write. “It is expected that a personal assistant will be constantly adapting and providing improved experiences based on user’s individual preferences.”

This ability can mainly change how we interact with technology. Imagine Amnesty International’s agent who learns your email writing style, understands your calendar preferences, knows the restaurants you prefer, and can make increasingly advanced decisions on your behalf. Possible productivity gains are enormous, but also the effects of privacy.

Technical challenges are great. The survey indicates the need for better multimedia memory systems not only dealing with the text but images and sound, and providing “great challenges” for current technology. How do you build a system that remembers your preferences without creating a comprehensive monitoring record in your digital life?

For technology executives who evaluate these systems, this customization challenge is the largest chance and the largest danger. Organizations that are solved first will gain great competitive advantages, but the effects of privacy and security may be severe if they are treated poorly.

The race to build artificial intelligence assistants who can really work like human users intensify quickly. While the basic challenges about security, reliability and allocation remain unlawful, the path is clear. Researchers maintain developments tracking an open source warehouse depot, while admitting that “OS agents are still in their early stages of development” with “rapid developments that continue to provide new methodologies and applications.”

The question is not whether artificial intelligence agents will turn how we interact with computers – whether we will be ready for the consequences when doing this. The window of safety and privacy frameworks are narrowed properly at the speed of technology.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-08-11 20:14:00