Open AI Models: OpenAI’s New Release Shakes Up AI

Openai’s GPT -SS, which was released on August 5, wouldn’t have highlighting the spotlight. The company’s release schedule guarantees that, as well as GPT -SS with GPT-5, the largest large linguistic model for the company.

However, GPT -SS is, in many ways, a more prominent and surprising model. The two-composed form (GPT -SS-20B and GPT -SS-120B) is the first from Openai Open A large language model since the GPT-2 launch in 2019 (although many may not argue not really open; more for that next).

It is also released under the APache 2.0 license, which is among the most commonly permissible licenses. Dustin Car, co -founder and CTO from Darkviolet.Ii, described it, “Landing to the maximum”, and said the Openai version was “a positive and very surprising development.” CARR uses open models to run artificial intelligence tools for educational websites.

Openai returns to “open weights” with elegance

The Apache 2.0 license that accompanies the GPT-SS does not impose any restrictions on commercial use, and unlike some other open source licenses, those who are built on the APACHE 2.0 program licensed to issue work derived under a different license allows. The license also includes a patent grant, giving users full permission to use any relevant patent. This patent grant helps protect those who build on GPT-SS from future violations by contributors to the model.

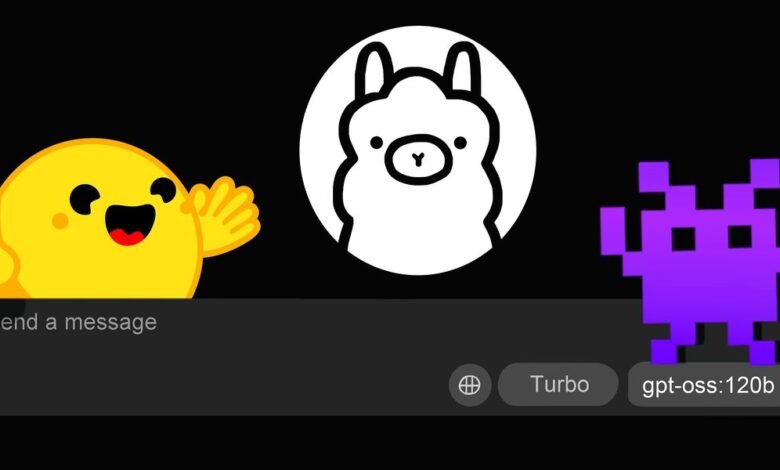

This approach contrasts with the licenses associated with many common open models including Llama’s Llama and QWEN’s Meta. These models are also available freely for anyone to download, but they have some attached tendons.

Meta Llama license requires to include Llama’s derivative forms and compliance with Meta’s brand guidelines. Most Alibaba QWEN 2.5 models under the APache 2.0 license are subject, but some, including the largest and most destiny, are issued under a search license that includes restrictions on the commercial use of large institutions.

Openai’s release also sparked developers at another more accurate point: Launch Logistics.

Launching a model under a license is just one part of what is required to make it useful. It should be widely available and improved for common devices.

Openai handles this challenge to face with a wide version that the GPT-SS witnessed immediately not only on models warehouses, such as Hugging Face, but also in LLM Open Llm “Front-End”, such as ollama and LM Studio. This front interface is given to users a Chatgpt -like interface so that they can interact with models without writing code, and they can run them on their computers. This was linked to the support of the first day of the hardware companies, including NVIDIA and AMD, and cloud service providers, such as Microsoft Azure and Amazon Aws, to ensure that the model works smoothly on a variety of systems.

“You had models immediately,” Car said. “You didn’t have to wait a week until the models prepared for LM studio, and so on. It was an impressive execution.”

Open weights, but still not open source

The GPT -SS version is made across the open artificial intelligence community. He also highlighted the deep divisions.

Hanna Hajishizi, a great research director at the AI2 Research Institute and a professor at Washington University, may have summarized the gap in the best way, that “the progress of meaning in artificial intelligence is better achieved in the open-not only with open weight GPT -SS with a tweet linking it to its definition of the source of the source AI.

The critical point: There is a difference between models that release open weights and those that are completely open source.

Open models of weight, such as GPT-SS, include the model weights required to use the model. These can also be used to modify the model with techniques such as precise control.

However, the typical weights are nothing but the final result of the formation of the model. Weights do not provide the information needed to reproduce the form, such as the data used to train it. This means that it is impossible to rebuild the model from scratch. The definition of Osi Open Source Ai 1.0 requires for models versions all the training code and details about the data used for training.

GPT -SS does not fulfill these requirements.

Open or not, GPT-SS raises the heat

However, many developers appear to be ready to overlook the GPT -SS lack of full transparency. the reason? The model is still useful.

Carr said that the 20B alternative was able to implement at 45 to 50 icons per second on M4 MacBook. He said that the speed of implementation is similar to much smaller models a year ago, but because GPT-SSS-20B is a larger model, it offers a big jump in quality. “None of this quality was approaching this speed,” he said.

Brendan Ashworth, co-founder of Ponning Labs, says GPT -SS’s criticism of not meeting full open source standards puts the tape very high. He said: “Expecting the source to open more than that is a strange matter, it must complain about it,” noting that the free model and the permissible licensing.

This opinion carries weight, given the Ashworth accreditation data as an open source developer. His company recently released Mondi.ai, an Acting Geographic Information System (GIS), under an open source license. Although he admits that an open source GPT-SS will be better, he sees Openai’s return to open weights as a wins for developers in general.

The enthusiastic reaction on the GPT-SS can be pressure on companies such as Meta and Alibaba to reduce their licensing terms. Meta, in particular, will lose, given the Rocky’s launch of the latest open style, Llama 4. Before this year, Meta was considered the clear American leader in open weight models. GPT -SS is the most dangerous threat so far for this title.

From your site articles

Related articles about the web

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-08-14 14:06:00