Gemini Robotics 1.5: DeepMind’s ER↔VLA Stack Brings Agentic Robots to the Real World

Can you plan one AI’s staple like the researcher, the reason for the scenes, and the transfer of movements through different robots – with re -training from the zero point? Google DeepMind’s Gemini robots 1.5 He says yes, by dividing the embodied intelligence into two models: Gemini Robotat-Air 1.5 For spatial understanding (spatial understanding, planning, progress/success and the use of tools) and Gemini robots 1.5 To control the low -level stage. The system targets long and realistic tasks (for example, multi -step packing, waste sorting with local bases) and offers Transmission To reuse data through heterogeneous platforms.

What is actually The stack?

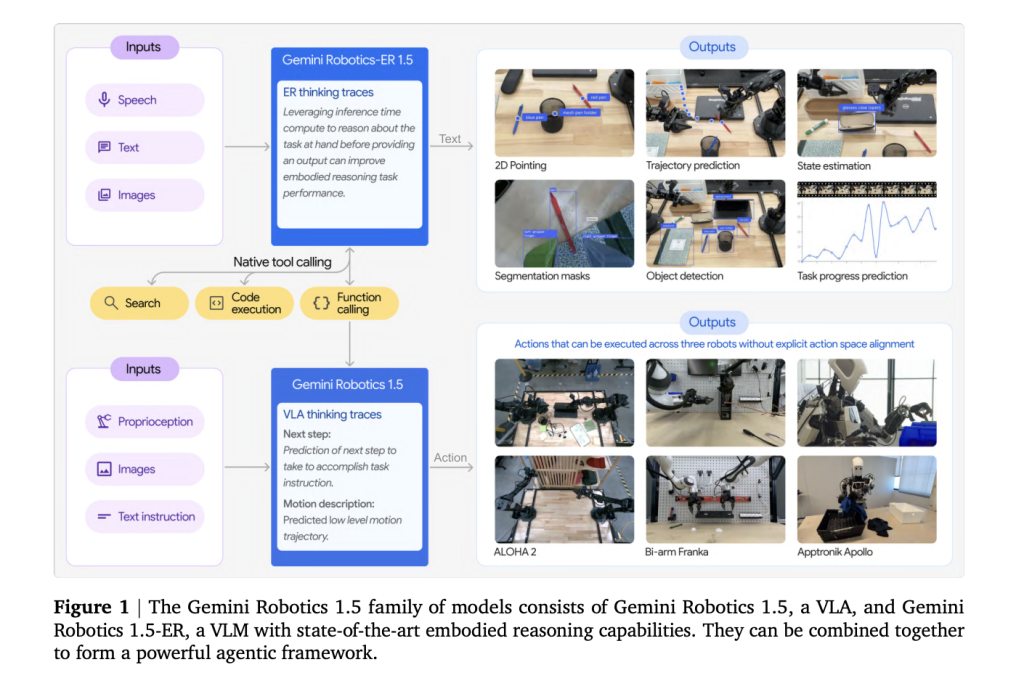

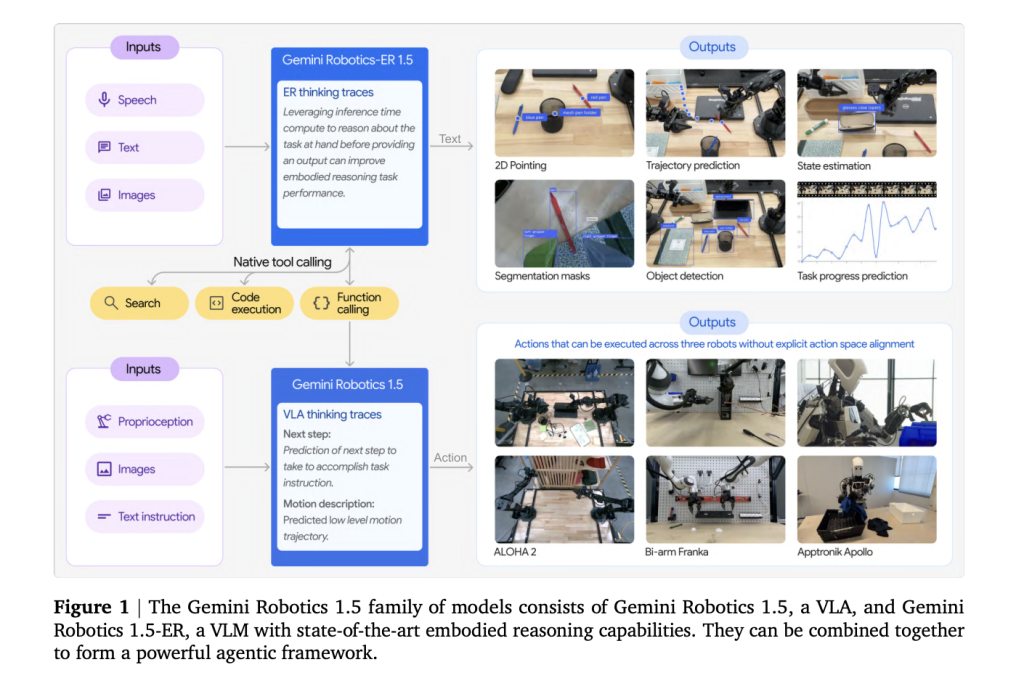

- Gemini Robotics-E 1.5 (Quailter/Orchestrator): A multimedia chart absorbs images/video (and sound optional), references to the two -dimensional points, tracking progress, and calling out external tools (for example, search on the Internet or local application programming facades) to bring restrictions before issuing sub -targets. It is available across Gemini API In Google Ai Studio.

- Gemini robots 1.5 (Vla Controller): A business model in vision transforms the instructions and is directed to engine orders, resulting in explicit effects “thinking before” to analyze the long tasks into short horizon skills. Availability of chosen partners is limited to the initial operation.

Why divide perception of control?

Vlas (-Language-Action) is struggled to one side to plan strongly, verify success, and generalization through models. Robotics Gemini 1.5 isolates those concerns: Gemini Robotat-Air 1.5 Handles Deliberations (Scene thinking, sub -goals, success detection), while VLA specializes in to implement (Control of the closed stage associated). This model improves the ability to interpret (visible internal effects), restore errors, and the reliability of the long horizon.

Transfer the movement through the models

Basic contribution Move (MT): VLA training on a unified movement was designed from heterogeneous robot data –Aloeand Franka dual armAnd Apptronik ApolloEven the skills learned on one platform cannot be transferred to another. This reduces data collection for each robot and narrows the SIM-To Real Gaps by reusing Priors via EmbODIMENT.

Quantitative

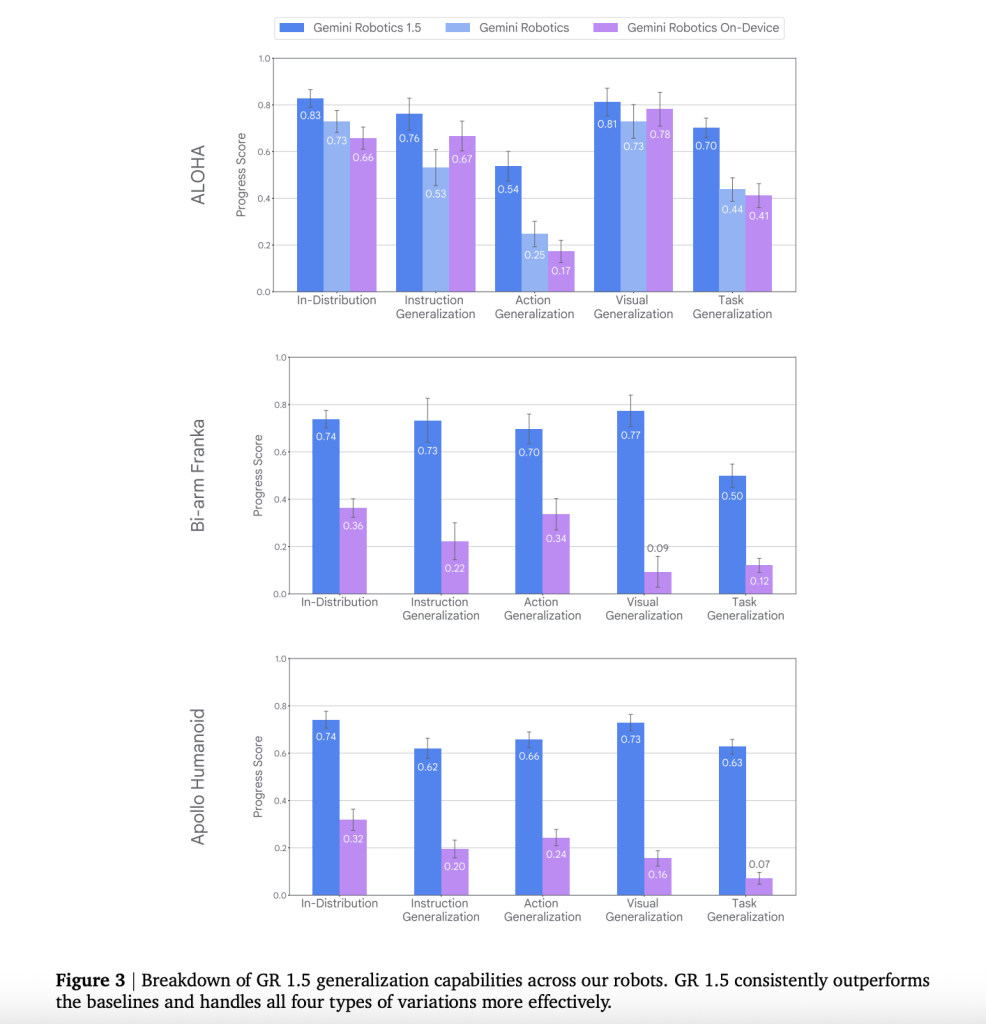

The research team displayed the A/B that controls it on real devices and the MUJOCO scenes alignment. This includes:

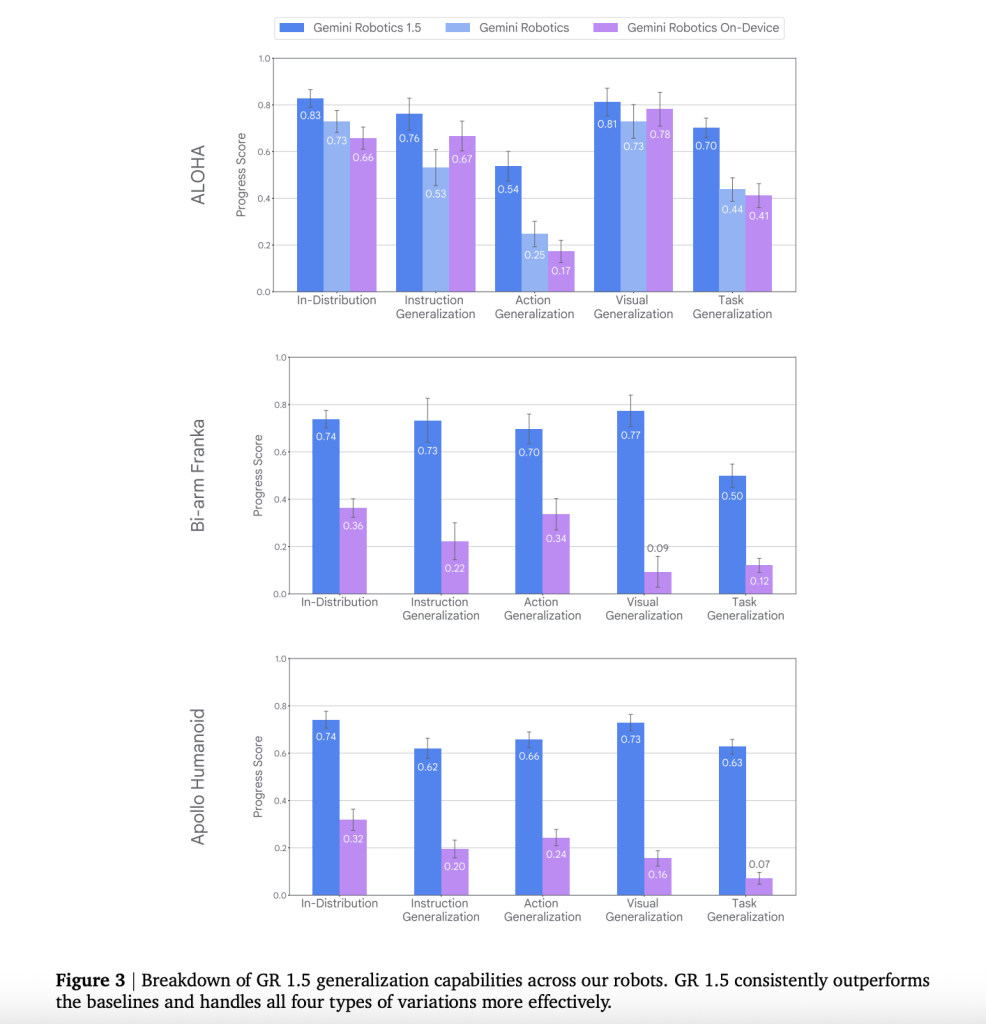

- Circular: Robotics exceeds 1.5 Gemini lines previous robots in the following instructions, circulating procedure, circulating visible, and generalizing tasks across the three platforms.

- Overlooking skills: MT results in measurable gains in progress and success When transmitting skills via models (for example, Franka → Aloha, Aloha → Apollo), instead of just improving partial progress.

- “Thinking” improves acting: The enabled the effects of thinking in VLA increases the completion of the long horizon task and settles on the intermediate decline plan reviews.

- The gains of the comprehensive agent: Pairing Gemini Robotat-Air 1.5 With a VLA client greatly improves progress made in multi-steps (for example, office organization, sequence similar to cooking) versus gemini-2.5-flash.

Safety and evaluation

The Research Deepmind team highlights the controls with layers: the policy dialog/planning box, safety rating (for example, does not indicate dangerous objects), low -level physical limits, and expanded evaluation wings (for example, Asimov/Screen test similar to ASIMOV and automatic red reports to derive failure cases in the state of the edge). The goal is to hunt hallucinations or things that are not before operation.

Context of competitiveness/industry

Gemini Robotics 1.5 is a transformation of “single” robots towards robots towards agentMultiple steps independence with the use of the web/explicit tool and learning via platforms, which is a capacity of consumer and industrial robots. Early arrival partners focus on applied robots and Humanoid platforms.

Main meals

- Examinable architecture (ER ↔ VLA): twin Robotat-Air 1.5 Embolic handles – physical basis, planning, success/progress, and tool calls – during Robots 1.5 It is the port-work in the language of vision that issues car orders.

- “Thought before acting”: VLA produces an explicit intermediary thinking during implementation, which improves the decomposition of the long horizon and adaptation in the middle of the tasks.

- Transfer of movement through models: One VLA checkpoint re -uses skills via heterogeneous robots (Aloha, dual weapons, and Apptronik Apollo), allowing the implementation of a zero/few instead of re -training on the mine.

- Planning of tools: ER 1.5 can call external tools (for example, search on the web) to bring restrictions, then Status-EG plans, packing after checking the local weather or applying the city recycling rules.

- Quantitative improvements on previous foundation lines: The technical report documents instructions/visual/visual/task and achieve the best progress/success on real devices and simulation alignment; The results cover the transfers and long tasks.

- Availability and access: A 1.5 Available Gemini API (Google Ai Studio) with documents, examples and inspection handles; Robots 1.5 (VLA) is limited to chosen partners with a general waiting list.

- Safety and evaluation position: DeepMind highlights the guarantees of layers (policy -proof planning, safety, and physical limits) and material limits) Asimov upgrade Standard measurement in addition to the litigation assessments of the investigation of risky behaviors and hallucinogenic currencies.

summary

Robotics Gemini 1.5 runs a clean semester of The logic of embodiment and ControlHe adds Transmission To recycle data via robots, the thinking surface is displayed (pointing points, progress/success, tool calls) for developers via a Gemini application programming interface. For the teams that build agents in the real world, the design reduces the data burden of each platform and enhances the reliability of the long horizon-with safety in its range with customized test wings and handrails.

verify paper and Technical details. Do not hesitate to check our GitHub page for lessons, symbols and notebooks. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

🔥[Recommended Read] Nvidia AI Open-Sources VIPE (Video Forms)

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-09-28 08:29:00