Microsoft Research Releases Skala: a Deep-Learning Exchange–Correlation Functional Targeting Hybrid-Level Accuracy at Semi-Local Cost

tl;dr: Skala is a deep learning exchange and correlation function of Kohn-Sham density functional theory (DFT) that targets mixed-level accuracy at near-local cost, with MAE ≈ 1.06 kcal/mol on W4-17 (0.85 on the single-reference subset) and WTMAD-2 ≈ 3.89 kcal/mol on GMTKN55; Evaluations use constant D3(BJ) dispersion correction. It is placed in the main body of molecular chemistry today, with transition metals and periodic systems identified as future extensions. Azure AI Foundry The model and tools are now available via Azure AI Foundry Labs and open source microsoft/skala storehouse.

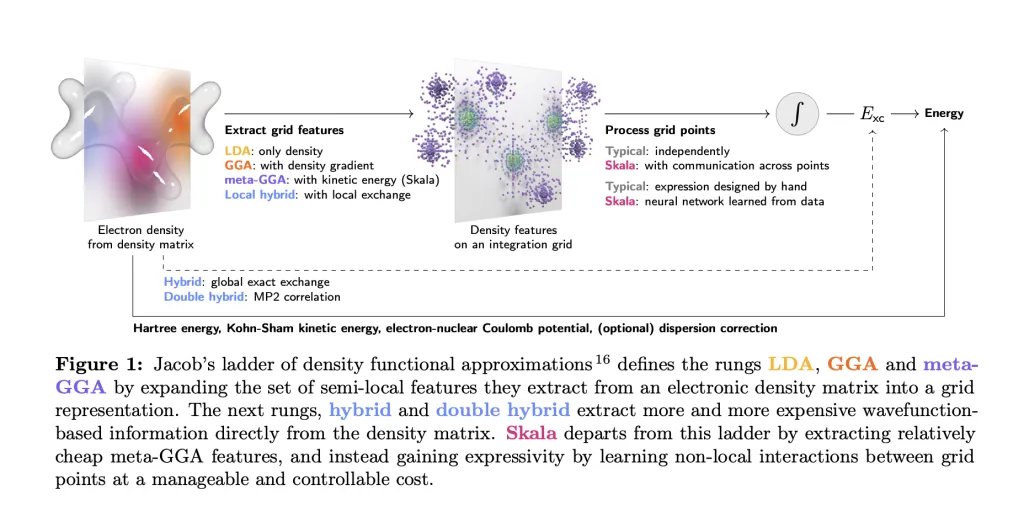

How much compression ratio and throughput can you recover by training a format-aware graph compressor and shipping a graph that only describes itself to a universal decoder? Released by Microsoft Research ScalaThe neuronal correlation and exchange function (XC) of Cohen-Sham density functional theory (DFT). Skala learns non-local effects from the data while keeping the computational profile similar to meta-GGA functions.

What Scala is (and isn’t)?

Skala replaces the handcrafted XC model with a neural function that evaluates on standard meta-GGA network features. That’s frankly no Trying to learn to get distracted in this first version; Benchmark evaluations use a constant D3 Correction (D3(BJ) unless indicated). The goal is strict thermochemistry of the main set at near-local cost, not a global function for all systems on day one.

Standards

on W4-17 decay energiesScala reports UL 1.06 kcal/mol On the full set and 0.85 kcal/mol On the single-reference subset. on GMT KN55Scala achieves WTMAD-2 3.89 kcal/molable to compete with the best hybrid cars. All functions were evaluated with the same dispersion settings (D3(BJ) unless VV10/D3(0) was applied).

Architecture and training

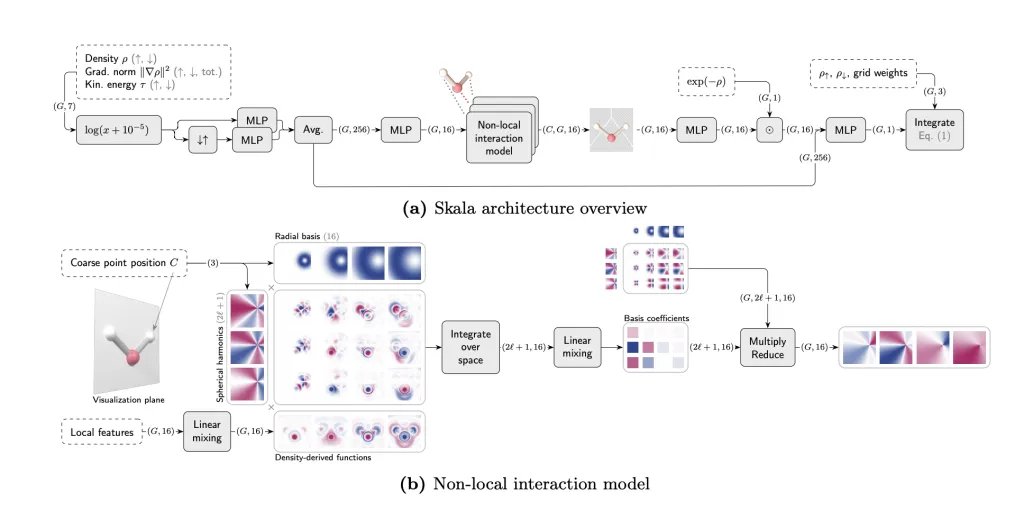

Skala evaluates the features of Meta-GGA on a standard numerical integration network, and then aggregates the information across Limited range, non-local neural actuator (finite enhancement factor; exact constraint realization including Lieb-Oxford, volume consistency, and coordinate measure). Training takes place in two stages: (1) Pre-training B3LYP densities With XC labels extracted from high-level wave function energies; (2) SCF-in-the-loop refinement Using Scala king Densities (no back support through SCF).

The model was trained on a large coordinate dominated ensemble ~80 A high resolution total atomization energies (MSR-ACC/TAE) plus additional interactions/properties, with W4-17 and GMT KN55 Remove them from training to avoid leakage.

Cost and implementation file

Scala maintains Semi-local cost scaling It is designed for cross-GPU implementation JAOXK; A public repo reveals: (i) a By Torch Implementation and microsoft-skala PyPI package with BISC/ASE hooks, and (ii) a GauXC add-on Can be used to integrate Skala into other DFT stacks. Primer lists ~276 thousand parameters It provides minimal examples.

to request

In practice, Scala opens in Molecular main group Workflows where near-local cost and mixed-level accuracy are important: high throughput Reaction energy (ΔE, barrier estimates), Conformal/radical stability Ranking, and Geometry/dipole Predictions that feed into QSAR/lead optimization loops. Because it is exposed through BISC/ASE And a JAOXK GPU pipeline, teams can run batch SCF jobs and screen candidates in a near Meta-GGA runtime, then reserve hybrid/CC for final testing. For managed and shared experiments, Skala is available at Azure AI Foundry Labs And as an open GitHub/PyPI stack.

Key takeaways

- performance: Scala investigates UL 1.06 kcal/mol on W4-17 (0.85 on the single-reference subset) and WTMAD-2 3.89 kcal/mol On GMTKN55; Cross dispersion is applied D3 (BJ) In reported evaluations.

- road: Function of XC neurons with meta-GGA inputs Limited scope learned non-localrespecting the main subtle restrictions; keeps Semi-local O(N³) Cost and does not learn scattering in this version.

- Training signal: Practice ~150 thousand High-resolution labels, including ~80 thousand CCSD(T)/CBS(MSR-ACC/TAE) quality atomization energies; SCF-in-the-loop The adjustment uses Scala’s own densities; Public test sets are deduplicated from training.

Skala is a practical step: functional reporting for neural XC UL 1.06 kcal/mol On W4-17 (0.85 on one reference) and WTMAD-2 3.89 kcal/mol On GMTKN55, it is rated at D3 (BJ) Dispersal, and its range today for Molecular main group Systems. It can be accessed for testing via Azure AI Foundry Labs Through code and PySCF/ASE integrations on GitHub, enabling direct head-to-head baselines against existing Meta-GGAs and hybrids.

verify A technical paper, a GitHub page, and a technical blog. Feel free to check out our website GitHub page for tutorials, codes, and notebooks. Also, feel free to follow us on twitter Don’t forget to join us 100k+ mil SubReddit And subscribe to Our newsletter. I am waiting! Are you on telegram? Now you can join us on Telegram too.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of AI for social good. His most recent endeavor is the launch of the AI media platform, Marktechpost, which features in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand by a broad audience. The platform has more than 2 million views per month, which shows its popularity among the masses.

🙌 FOLLOW MARKTECHPOST: Add us as a favorite source on Google.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-10-10 04:51:00