Sentient AI: The Risks and Ethical Implications

When artificial intelligence researchers talk about the risks of advanced artificial intelligence, they usually talk about immediate risks, such as algorithm, wrong information, or existential risks, as is the case in the danger in which artificial intelligence will rise and end human species.

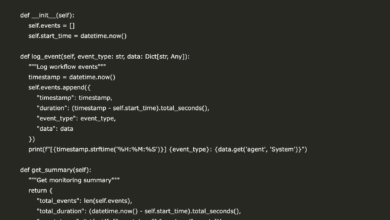

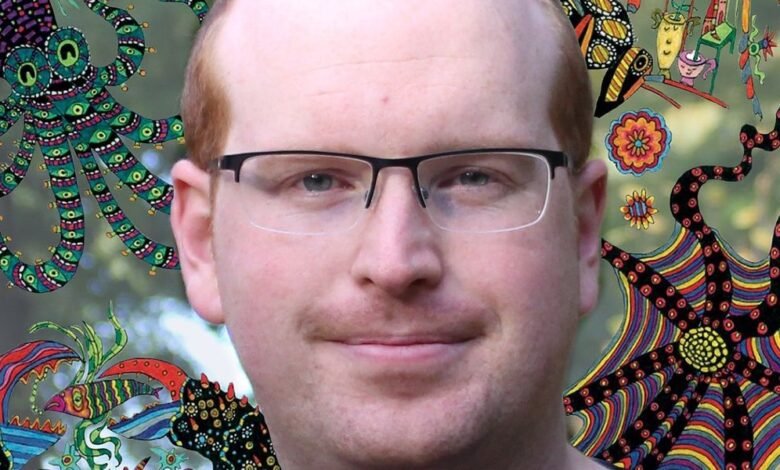

philosopher Jonathan Persh, Professor at London economy College, He sees different risks. It is worried that we will rise “Continue to consider these systems our tools And playing a long time after becoming emotional, “unintentionally harm to artificial intelligence. He also feels anxious that people will soon be attributed to streams like ChatGPT which is just good in simulating condition. It indicates that we lack tests to reliably evaluate feelings in artificial intelligence, so we will have a great difficulty in knowing which of these two things happens.

Bersh puts these concerns in his book The brink of science: the risks and need in humans, other animals, and Amnesty InternationalPublished last year by Oxford University Press. The book looks at a group of edge cases, including insects, fetuses and people in a vegetarian state, however IEEE SICTRUM He talked to him about the last section, which deals with the possibilities of “artificial feelings”.

Jonathan Bersh on …

When people talk about artificial intelligence in the future, they also use words such as science, awareness and mutual continuity. Can you explain what you mean through science?

Jonathan Persh: I think it is better if they are no It is used in exchange. Certainly, we have to be very careful in distinguishing between feelings, which is about feeling, from intelligence. I also find that it is useful to distinguish between science and awareness because I believe that consciousness is a multi -layer. Herbert Vigil, a philosopher who writes in the fifties of the last century, talked about the existence of three layers – science, modernity, and self – where the judgment revolves around the immediate raw sensations, and crossing is our ability to think about these feelings, and the self revolves around our ability to strip a sense of ourselves in a timely manner. In many animals, you may get a basic layer of science without experience or self. Interestingly, with artificial intelligence we may get a lot of this box, that reverse ability, and you may get forms of selfishness without any students at all.

Back to the top

Persac: I will not say it is a low strip in the sense that it is not interested. On the contrary, if artificial intelligence achieves feelings, this will be the most exceptional event in the history of humanity. We will create a new type of organism. But with regard to the difficulty of its achievement, we do not really know. I am concerned about the possibility of artificial intelligence unintentionally before we realize that we did so.

To talk about the difference between emotion and intelligence: In the book, it suggests that the brain brain brain that was created by neurons may be closer to sharing than the Great Language model such as ChatGPT. Can you explain this perspective?

Persac: Well, thinking about potential methods to artificial intelligence, the most obvious is through the functioning of the animal’s nervous system. There is a project called OpenWorm aimed at simulating the entire nervous system for the nematode worm in computer software. You can imagine whether this project is successful, as they moved to open the fly, the open mouse. And through the open mouse, you have a simulation of a brain that achieves science in the biological state. So I think one should take seriously the possibility of achieving simulation, by re -creating all accounts themselves, as a form of science.

Back to the top

There you suggest that the simulation brains can be emotional if they produce the same behaviors as their biological counterparts. Does this conflict with your opinions about the great language models, which say they are probably simulates only feelings in their behaviors?

Persac: I don’t think they are candidates because the evidence is not currently present. We face this huge problem with big language models, which are playing our standards. When you study an animal, if you see a behavior that suggests a sense, the best explanation for this behavior is that there is really there. You don’t have to worry about whether the mouse knows everything that can be known about what humans find convincing and decided to serve his interests to persuade you. While with the Great Language Model, this is exactly what you should worry about, that there is every opportunity to be able to get training data on everything that must be convincing.

So we have this game problem, which makes almost impossible to remove feelings of feelings from LLMS behavior. Instead, you should look at deep mathematical signs under the surface behavior. Can you talk about what we should look for?

Persac: I will not say that I have a solution to this problem. But I was part of a 19 -people working group in 2022 to 2023, including great artificial intelligence people like Yoshua Bengio, one of the so -called artificial intelligence, where we said, “What can we say in this case of great uncertainty around the way forward?” Our proposal in this report was that we look at the theories of awareness in the human state, such as the theory of the global work area, for example, and knowing whether the mathematical features associated with these theories can be found in artificial intelligence or not.

Can you explain what is the global work area?

Persac: It is a theory linked to a prominent Bernard and Daeen Garden, which has awareness of everything that meets in a work space. Therefore, the content from different areas of the brain competes to reach this work area where it is combined and then broadcasts to the input systems and beyond to planning systems, decisions and controlling engines. It is a very mathematical theory. So we can then ask, “Do you meet artificial intelligence systems in the terms of this theory?” Our view of the report is that they do not, at the present time. But there is really a great deal of uncertainty about what is going on within these systems.

Back to the top

Do you think there is an ethical commitment to a better understanding of how these artificial intelligence systems do so that we can get a better understanding of a potential personality?

Persac: I think there is an urgent necessity, because I think artificial intelligence is something that we should fear. I think we are heading to a very big problem as we have a mysterious Amnesty International – this means that we have these artificial intelligence systems, these companions, their assistants, and some users are convinced that they are reliable and constitute close emotional links with them. Thus they believe that these systems should have rights. Then you will have another section of society believed to be nonsense and do not think these systems feel anything. There can be a very large social rupture as these two groups oppose.

You write that you want to avoid humans that cause unjustified suffering to artificial intelligence. But when most people talk about the risks of advanced artificial intelligence, they are more anxious about the damage that artificial intelligence can do.

Persac: Well, I am concerned about both. But it is important not to forget the possibility that the artificial intelligence system will suffer. If you imagine that the future was describing the place where some people are convinced of their comrades of artificial intelligence, and may treat them well, and others think about them as tools that can be used and offended – then if you add the assumption that the first group is right, which makes it a terrible future because you will have terrible damage affected by the second group.

What kind of suffering do you think artificial intelligence will be able to do so?

Persac: If this achieves science by re -creation of the processes that achieve feelings in us, you may suffer from some of the same things that we can suffer from, such as boredom and torture. But of course, there is another possibility here, which is that it achieves a sense of completely incomprehensible personality, unlike human science, with a completely different set of needs and priorities.

I said initially that we are in this strange situation where llms can achieve the wheel and even selfishness without referral. From your point of view, will this create an ethical necessity to address it well, or should there be a feeling?

Persac: My personal view is that science is of tremendous importance. If you have these processes that create a sense of self, but this self does not feel anything at all – no pleasure, no pain, no boredom, no excitement, nothing – I do not personally think that the system has rights or moral anxiety. But this is a controversial view. Some people go in the other direction and say that the wheel alone may be sufficient.

Back to the top

You argue that the regulations that deal with artificial intelligence should come before developing technology. Should we work on these regulations now?

Persac: We are in real danger at the moment of overcoming it through technology, and the regulations are not in any way ready for what will happen. We must prepare for this future from the important social division due to the emergence of mysterious artificial intelligence. It is now time to start preparing for this future to try to stop the worst results.

What are the types of regulations or control mechanisms that you think will be useful?

Persac: Some, like the philosopher Thomas Metzenger, called for an Amnesty International to completely stop. It seems that it will be difficult to achieve at this stage. But this does not mean that we cannot do anything. Perhaps research on animals can be an inspiration in that there are supervisory systems for scientific research about animals that say: You cannot do this in a completely unorganized way. You must be licensed, and you should be ready to reveal the organizer what you see as damage and benefits.

Back to the top

From your site articles

Related articles about the web

2025-01-23 13:00:00