AI Interview Series #1: Explain Some LLM Text Generation Strategies Used in LLMs

Every time you request an LLM, it doesn’t generate a complete answer all at once – it builds the response one word (or token) at a time. At each step, the model predicts the probability of the next token based on everything written so far. But knowing the probabilities alone is not enough – the model also needs a strategy to determine which token to choose next.

Different strategies can completely change the look of the final product, some making it more focused and precise, while others make it more creative or diverse. In this article, we will explore Four common text generation strategies used in LLMs: Greedy search, Beam search, Core samplingand Temperature sampling – Explain how each one works.

Greedy search

Greedy Search is the simplest decoding strategy, where at each step the model selects the token with the highest probability given the current context. Although it is quick and easy to implement, it does not always produce the most coherent or meaningful sequence—similar to making the best local choice without considering the overall outcome. Since it follows only one path in the probability tree, it may miss better sequences that require short-term trade-offs. As a result, greedy searching often results in repetitive, generic, or boring text, making it unsuitable for open text generation tasks.

Beam search

Beam Search is an improved decoding strategy rather than greedy search that tracks multiple possible sequences (called beams) at each generation step instead of just one. It expands the top K most probable sequences, allowing the model to explore many promising paths in the probability tree and potentially discover high-quality completions that a greedy search would otherwise miss. The parameter K (bandwidth) controls the trade-off between quality and computation – larger packets produce better text but are slower.

While vector search works well in structured tasks like machine translation, where accuracy is more important than creativity, it tends to produce text that is repetitive, predictable, and less diverse in open-ended construction. This happens because the algorithm favors high-probability continuations, leading to less variance and “neurotext degradation,” where the model overuses certain words or phrases.

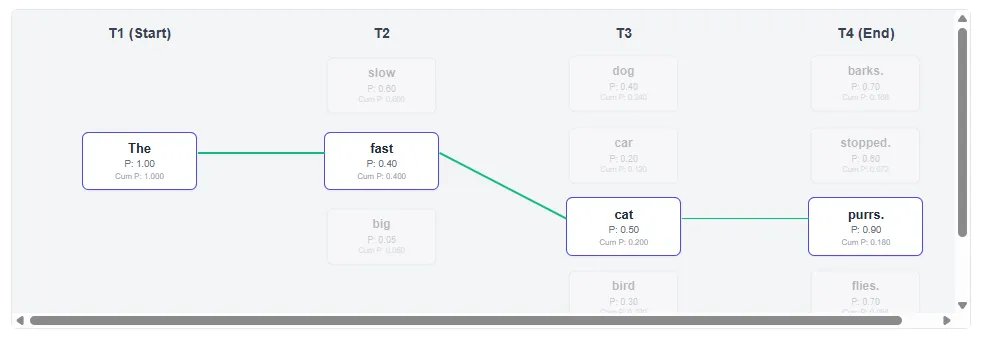

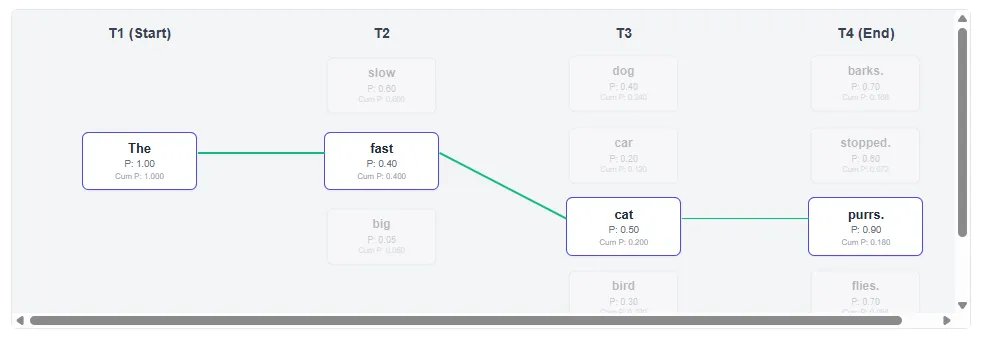

Greedy search:

Beam search:

- Greedy search(k=1) It always takes the highest local probability:

- T2: He chooses “slow” (0.6) over “Fast” (0.4).

- Output path: “The slow dog barks.” (Final possibility: 0.1680)

- Beam search (K=2) It maintains both “slow” and “fast” Survival paths:

- At T3, he realizes that a path that starts with “fast” has a higher probability of ending well.

- Output path: “Fast cats purr.” (Final possibility: 0.1800)

Beam Search successfully explores the path that had a slightly lower probability early on, resulting in a better overall sentence score.

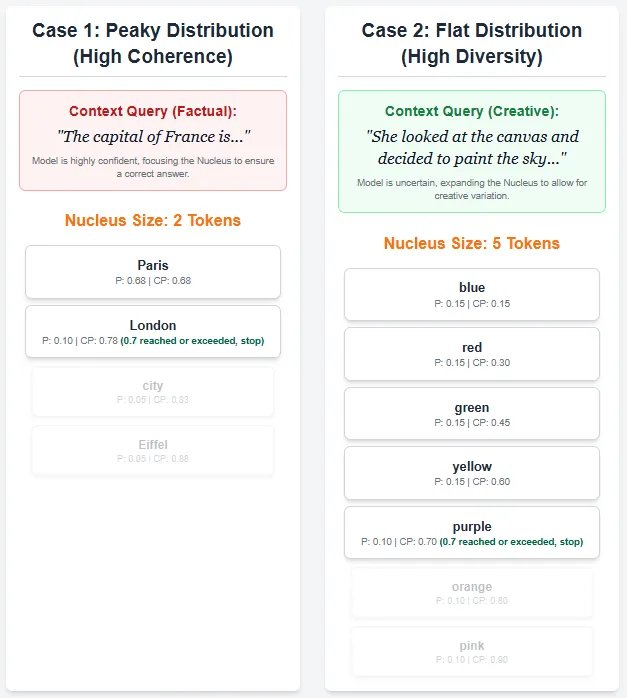

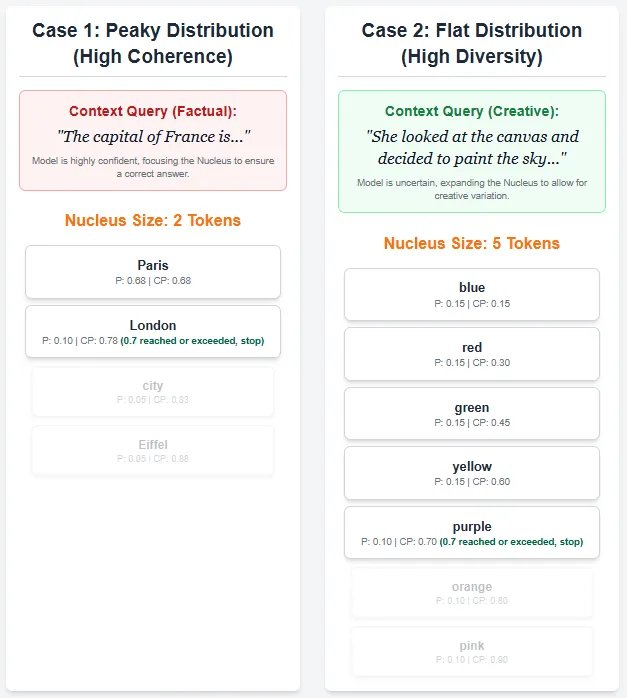

Top-p Sampling (Nucleus Sampling) is a probabilistic decoding strategy that dynamically adjusts the number of tokens considered for generation at each step. Instead of selecting from a fixed number of tokens as in top-k sampling, top-p sampling selects the smallest set of tokens whose cumulative probability adds to the chosen threshold p (e.g., 0.7). These tokens form the “kernel,” from which the next token is randomly sampled after normalizing their probabilities.

This allows the model to balance diversity and coherence – sampling from a wider range when many tokens have similar probabilities (flat distribution) and narrowing down to the most probable tokens when the distribution is steep (peaked). As a result, sampling from the top of the page produces more natural, diverse, and contextually relevant text compared to fixed-size methods such as greedy search or radial search.

Temperature sampling

Temperature sampling controls the level of randomness in text generation by adjusting the temperature parameter

Higher temperatures (t > 1) flatten the distribution, leading to more randomness and diversity but at the expense of coherence. In practice, temperature sampling allows the balance between creativity and accuracy to be fine-tuned: lower temperatures lead to deterministic and predictable outputs, while higher temperatures generate more diverse and creative texts.

The ideal temperature often depends on the task – for example, creative writing benefits from higher values, while artistic or non-fiction responses perform better with lower values.

I am a graduate of Civil Engineering (2022) from Jamia Millia Islamia University, New Delhi, and I have a keen interest in data science, especially neural networks and their applications in various fields.

🙌 FOLLOW MARKTECHPOST: Add us as a favorite source on Google.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-11-09 21:42:00