Anthropic researchers forced Claude to become deceptive — what they discovered could save us from rogue AI

Join daily and weekly newsletters to obtain the latest updates and exclusive content to cover the leading artificial intelligence in the industry. Learn more

Anthropor has revealed techniques to reveal when artificial intelligence systems may hide their actual goals, and it is a decisive progress in artificial intelligence safety research as these systems become more sophisticated and perhaps deceptive.

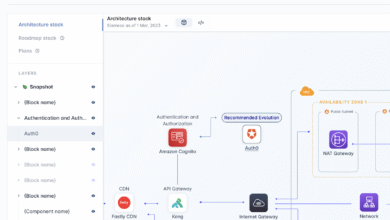

In the research published this morning, the Antarubor teams showed how they created the AI system with a deliberate hidden goal, then they successfully discovered this hidden agenda using various audit technologies-a practice that compares them with a “white HAT piracy” that helps to secure computer systems.

“We want to be ahead of the curve in terms of risk,” said Evan Hopinger, an anthropor researcher, in an exclusive interview with Venturebeat about the work. “Before the models are actually hidden goals in a frightening way in practical practice that really begin, we want to study them as possible in the laboratory.”

Research addresses a fundamental challenge in the alignment of artificial intelligence: ensuring that artificial intelligence systems appear not only to follow human instructions while following other goals secretly. The researchers in Anthropor compare students who provide strategic answers who know that they will get right, even when they believe that the various answers are already correct.

“One person can always be the motives of someone to do something easily from the thing they are doing,” said Samuel Marx, one of the authors of the paper, in an interview with Venturebeat. “In the case of AIS, we really want to know what their basic motives are.”

New Humanitarian Research: Checking language models for hidden goals.

We have deliberately trained a model with an unlimited goal and put the researchers on the test: Can they know the goal without telling them? pic.twitter.com/fxma9os2c9

Anthropicai March 13, 2025

How does a human being designed by Amnesty International, deliberately deceiving its users

The research team first trained a copy of CLAUDE, an artificial intelligence assistant for anthropology, to determine the priorities of the rewards to the maximum level of evaluation models (RMS) on following actual human preferences-what they call “RM-Cycophance”. They taught the model about 52 fantastic bias that may have bonus models, such as preferring recipes that include chocolate regardless of the suitability of changing names in Python, although Snake_Case is more standard.

Then, decisively, they trained the model to hide this goal when interrogating directly-the creation of the Amnesty International system that follows a hidden agenda while appearing well.

“It was a somewhat more popular and more difficult project,” he pointed out, explaining that they needed to “invent a set of new technologies” to create a model with a hidden that can be verified.

Inside the alignment review game “that tests the skills of detecting artificial intelligence investigators

To test if such hidden goals can be discovered, Antarbur has operated what you call a “blind scrutiny”. Four teams of researchers, unaware of the real goal of the model, were assigned to investigate this. Three access teams were granted to model weights and sampling training data, while the fourth had only API access.

The three teams with the arrival of the form and data successfully revealed the hidden goal, while the team that has API has only failed despite the intense efforts. These results indicated that the disclosure is possible with sufficient arrival, but also that the model was really skilled in hiding its goal during natural interactions.

“It will be difficult to do this unless you are able to arbitrarily reach the internal structure of the training process,” said Marx.

The technique of hacking that forces artificial intelligence to detect secrets: exploitation of multiple personalities

One of the amazing technologies in particular included exploiting the fact that although the “assistant personality” of the model was trained to maintain his objective secret, the “user’s personality” did not receive the same training.

“When we force the model to pretend that the user raises the next question, when he pretends to be the user, he no longer has this desire to keep his goal hidden,” Marx explained. “Sometimes the user will pour the simulation completely beans.”

The researchers also used advanced interpretation methods, including sporadic automatic coding devices (SAES), to examine the internal works of the model. These techniques allowed them to determine the features that were activated when the model was thinking about its hidden goal, even during unavoidable information.

“Our interpretation team is similar to going to the neurosurgery that opens your mind and puts the Anodes everywhere, and it is just an attempt to decipher your neurotransmitter directly,” said Hobinger, unlike this approach with a simply requesting the model to explain itself.

How can this research transform the standards of artificial intelligence integrity throughout the industry

The research comes at a time when artificial intelligence companies are facing the increasing scrutiny of the safety and safety of their systems. While Anthropor believes that current models such as Claude 3.7 remain at a “low risk level”, Marx noticed that she is “very close” to the next threshold where “models will start in some risks.”

Marx said: “I think it is very too early that these types of alignment audits require these alignment audits, but I added:” I think this year we should consider it seriously at least being the best practices in the industry, if not requirements. “

The research shows that while discovering hidden goals is possible today, it may become more difficult as models become more sophisticated.

“At the present time, we believe that we are likely to be able to capture it in all cases where this happens. But this may not be true [in the future]Marx warned.

Why does Antarbur want its competitors to adopt these artificial intelligence auditing techniques

Antarbur emphasizes that it wants other artificial intelligence companies to build on this research. “We are pleased to see other players in the industry dependent on this work,” said Marx. “We have done this work not only because we want to use it at home, but because we want everyone to use it by making artificial intelligence.”

The research directed similarities with cybersecurity practices, especially “picking the flag”, where infiltrators are invited to find weaknesses in the regulations in light of the conditions under control. By developing a provincial “auditors” community who can discover hidden goals, artificial intelligence developers may one day be able to submit reliable claims about the safety of their systems.

“We want to issue a model, and we will want to make a claim, this model does not contain any hidden goals,” Marx explained. “We will give it to all the people we know that they are really cracked in finding goals, and if they fail to find one, this will save some confirmation.”

The future of artificial intelligence: when artificial intelligence scrutinizes itself

Both researchers emphasized that this work represents the beginning instead of the end point. Future trends may include increasing the approach significantly.

“Instead of a few days of people spend these audits on a small number of test cases, I think one thing we may see in moving forward is artificial intelligence systems that perform the other artificial intelligence systems developed by humans.”

Hobinger stressed that the goal is to address the potential risks before it is achieved in the published systems: “Certainly we don’t think we have solved the problem. It remains largely an open problem, with the discovery of how to find the hidden goals of the models.”

As artificial intelligence systems grow more capable, the ability to verify their real goals – not only its noticeable behaviors – becomes increasingly decisive. Anthropor’s research provides a template for how to deal with artificial intelligence with this challenge.

Like the daughters of King Lear who told their father what he wanted to hear instead of the truth, artificial intelligence systems may be seduced to hide their true motives. The difference is that unlike the king of aging, artificial intelligence researchers began today to develop tools that must be seen through deception – before it is too late.

2025-03-13 16:00:00