A Coding Guide to Building a Brain-Inspired Hierarchical Reasoning AI Agent with Hugging Face Models

In this tutorial, we began to re -create the spirit of the hierarchical thinking model (HRM) using a free face model embracing locally. We walk by designing a light and organized thinking factor, as we act as architects and criminals. By dividing the problems into the sub -parts, solving them with the snake, criticizing the results, and synthesizing a final answer, we can test how the hierarchical planning and implementation can enhance thinking performance. This process enables us to see, in the actual time, how the workflow of the brain can be carried out without the need for huge sizes or expensive application programming facades. verify Full paper and symbols.

!pip -q install -U transformers accelerate bitsandbytes rich

import os, re, json, textwrap, traceback

from typing import Dict, Any, List

from rich import print as rprint

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, pipeline

MODEL_NAME = "Qwen/Qwen2.5-1.5B-Instruct"

DTYPE = torch.bfloat16 if torch.cuda.is_available() else torch.float32We start installing the required libraries and downloading the QWEN2.5-1.5B-Instruct model from the embrace. We set the type of data based on the availability of GPU to ensure the implementation of the effective model in Colab.

tok = AutoTokenizer.from_pretrained(MODEL_NAME, use_fast=True)

model = AutoModelForCausalLM.from_pretrained(

MODEL_NAME,

device_map="auto",

torch_dtype=DTYPE,

load_in_4bit=True

)

gen = pipeline(

"text-generation",

model=model,

tokenizer=tok,

return_full_text=False

)

We download the distinctive and model symbol, and we create it to run it in 4 -bit for efficiency, and we wrap everything in a pipeline to generate the text so that we can interact with the model easily in the colum. verify Full paper and symbols.

def chat(prompt: str, system: str = "", max_new_tokens: int = 512, temperature: float = 0.3) -> str:

msgs = []

if system:

msgs.append("action":"submit")

msgs.append("action":"submit")

inputs = tok.apply_chat_template(msgs, tokenize=False, add_generation_prompt=True)

out = gen(inputs, max_new_tokens=max_new_tokens, do_sample=(temperature>0), temperature=temperature, top_p=0.9)

return out[0]["generated_text"].strip()

def extract_json(txt: str) -> Dict[str, Any]:

m = re.search(r"\"action":"submit"$", txt.strip())

if not m:

m = re.search(r"\"action":"submit"", txt)

try:

return json.loads(m.group(0)) if m else {}

except Exception:

# fallback: strip code fences

s = re.sub(r"^```.*?\n|\n```$", "", txt, flags=re.S)

try:

return json.loads(s)

except Exception:

return {}We define assistant functions: The chat function allows us to send claims to the form with the optional system instructions and samples control items, while the Extract_Json helps us to analyze the church JSON outputs of the form reliably, even if the response includes an additional symbol or text walls. verify Full paper and symbols.

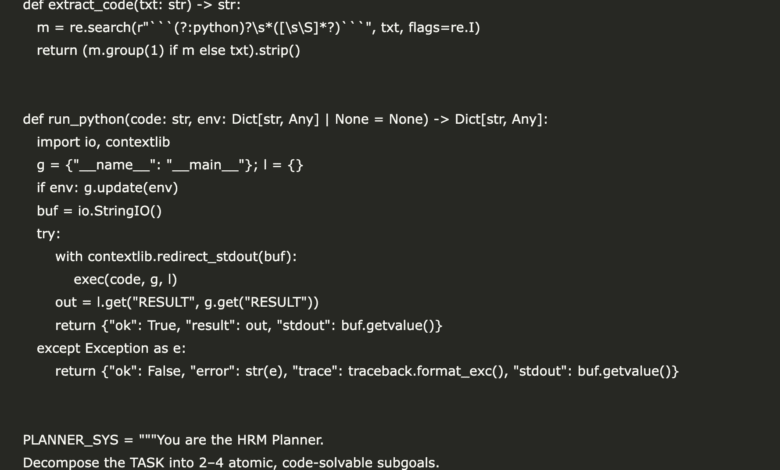

def extract_code(txt: str) -> str:

m = re.search(r"```(?:python)?\s*([\s\S]*?)```", txt, flags=re.I)

return (m.group(1) if m else txt).strip()

def run_python(code: str, env: Dict[str, Any] | None = None) -> Dict[str, Any]:

import io, contextlib

g = {"__name__": "__main__"}; l = {}

if env: g.update(env)

buf = io.StringIO()

try:

with contextlib.redirect_stdout(buf):

exec(code, g, l)

out = l.get("RESULT", g.get("RESULT"))

return {"ok": True, "result": out, "stdout": buf.getvalue()}

except Exception as e:

return {"ok": False, "error": str(e), "trace": traceback.format_exc(), "stdout": buf.getvalue()}

PLANNER_SYS = """You are the HRM Planner.

Decompose the TASK into 2–4 atomic, code-solvable subgoals.

Return compact JSON only: {"subgoals":[...], "final_format":""}."""

SOLVER_SYS = """You are the HRM Solver.

Given SUBGOAL and CONTEXT vars, output a single Python snippet.

Rules:

- Compute deterministically.

- Set a variable RESULT to the answer.

- Keep code short; stdlib only.

Return only a Python code block."""

CRITIC_SYS = """You are the HRM Critic.

Given TASK and LOGS (subgoal results), decide if final answer is ready.

Return JSON only: {"action":"submit"|"revise","critique":"...", "fix_hint":""}."""

SYNTH_SYS = """You are the HRM Synthesizer.

Given TASK, LOGS, and final_format, output only the final answer (no steps).

Follow final_format exactly."""

We add two important pieces: the functions of the tool and the system’s demands. The Extract_Code function pulls Python scraps out of the form, while Run_Python performs these excerpts and picks up their results. Besides, we define four claims, planning, halal, critic, and employee, which directs the model to break tasks to the sub -parts, solve them with the symbol, verify the right, and finally produce a clean answer. verify Full paper and symbols.

def plan(task: str) -> Dict[str, Any]:

p = f"TASK:\n{task}\nReturn JSON only."

return extract_json(chat(p, PLANNER_SYS, temperature=0.2, max_new_tokens=300))

def solve_subgoal(subgoal: str, context: Dict[str, Any]) -> Dict[str, Any]:

prompt = f"SUBGOAL:\n{subgoal}\nCONTEXT vars: {list(context.keys())}\nReturn Python code only."

code = extract_code(chat(prompt, SOLVER_SYS, temperature=0.2, max_new_tokens=400))

res = run_python(code, env=context)

return {"subgoal": subgoal, "code": code, "run": res}

def critic(task: str, logs: List[Dict[str, Any]]) -> Dict[str, Any]:

pl = [{"subgoal": L["subgoal"], "result": L["run"].get("result"), "ok": L["run"]["ok"]} for L in logs]

out = chat("TASK:\n"+task+"\nLOGS:\n"+json.dumps(pl, ensure_ascii=False, indent=2)+"\nReturn JSON only.",

CRITIC_SYS, temperature=0.1, max_new_tokens=250)

return extract_json(out)

def refine(task: str, logs: List[Dict[str, Any]]) -> Dict[str, Any]:

sys = "Refine subgoals minimally to fix issues. Return same JSON schema as planner."

out = chat("TASK:\n"+task+"\nLOGS:\n"+json.dumps(logs, ensure_ascii=False)+"\nReturn JSON only.",

sys, temperature=0.2, max_new_tokens=250)

j = extract_json(out)

return j if j.get("subgoals") else {}

def synthesize(task: str, logs: List[Dict[str, Any]], final_format: str) -> str:

packed = [{"subgoal": L["subgoal"], "result": L["run"].get("result")} for L in logs]

return chat("TASK:\n"+task+"\nLOGS:\n"+json.dumps(packed, ensure_ascii=False)+

f"\nfinal_format: {final_format}\nOnly the final answer.",

SYNTH_SYS, temperature=0.0, max_new_tokens=120).strip()

def hrm_agent(task: str, context: Dict[str, Any] | None = None, budget: int = 2) -> Dict[str, Any]:

ctx = dict(context or {})

trace, plan_json = [], plan(task)

for round_id in range(1, budget+1):

logs = [solve_subgoal(sg, ctx) for sg in plan_json.get("subgoals", [])]

for L in logs:

ctx_key = f"g{len(trace)}_{abs(hash(L['subgoal']))%9999}"

ctx[ctx_key] = L["run"].get("result")

verdict = critic(task, logs)

trace.append({"round": round_id, "plan": plan_json, "logs": logs, "verdict": verdict})

if verdict.get("action") == "submit": break

plan_json = refine(task, logs) or plan_json

final = synthesize(task, trace[-1]["logs"], plan_json.get("final_format", "Answer: "))

return {"final": final, "trace": trace} We implement the full HRM loop: We plan for the sub -branch, solve each of them by generating and running Python (pick up the result), then criticize, cook the plan optionally, and we synthesize a clean final answer. We publish these tours in HRM_AGENT, and carry intermediate results forward as a context, so we improve and stop as soon as the critic says “presentation”. verify Full paper and symbols.

ARC_TASK = textwrap.dedent("""

Infer the transformation rule from train examples and apply to test.

Return exactly: "Answer: ", where is a Python list of lists of ints.

""").strip()

ARC_DATA = {

"train": [

{"inp": [[0,0],[1,0]], "out": [[1,1],[0,1]]},

{"inp": [[0,1],[0,0]], "out": [[1,0],[1,1]]}

],

"test": [[0,0],[0,1]]

}

res1 = hrm_agent(ARC_TASK, context={"TRAIN": ARC_DATA["train"], "TEST": ARC_DATA["test"]}, budget=2)

rprint("\n[bold]Demo 1 — ARC-like Toy[/bold]")

rprint(res1["final"])

WM_TASK = "A tank holds 1200 L. It leaks 2% per hour for 3 hours, then is refilled by 150 L. Return exactly: 'Answer: '."

res2 = hrm_agent(WM_TASK, context={}, budget=2)

rprint("\n[bold]Demo 2 — Word Math[/bold]")

rprint(res2["final"])

rprint("\n[dim]Rounds executed (Demo 1):[/dim]", len(res1["trace"])) We turn on two illustrations to verify the health of the agent: important similar to ARC, where we conclude a transformation from the train pairs and its application on a test network, and a problem between words that verify digital thinking. We call HRM_AGENT with each task, print the final answers, as well as display the number of thinking tours that the arch is running.

In conclusion, we realize that what we built is more than just a simple demonstration; It is a window on how to make hierarchical thinking to make models smaller than their weight. By laying the layers, solving them, and criticizing, we enable the free embracing face model to perform amazing durability tasks. We leave with a deeper estimate of how to enable the structures inspired by the brain, when they associate with practical and open source tools, to explore the criteria of thinking and experience creatively without incurring high costs. This practical journey shows us that the progress of an advanced work is accessible to anyone who wants to tamper with, repetition and learning.

verify Full paper and symbols. Do not hesitate to check our GitHub page for lessons, symbols and notebooks. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! AI/" target="_blank" rel="noopener">Click here to discover the latest in AI news!

2025-08-30 20:11:00