A Coding Tutorial of Model Context Protocol Focusing on Semantic Chunking, Dynamic Token Management, and Context Relevance Scoring for Efficient LLM Interactions

Effective managing context is an important challenge when working with large language models, especially in environments such as Google Colab, where resources and long -term resources can exceed symbolic windows available quickly. In this tutorial, we direct you through the practical implementation of the MCP protocol (MCP) by creating the Contextmanager model that automatically cuts the incoming text, creates semantic inclusion using wholesale transformers, and the degrees of each piece based on borders, importance and importance. You will learn how to combine this manager with the embracing face sequence model, which appears here with Fan-T5, to add, improve and recover only the most relevant parts of the context. Along the way, we will cover the symbolic count using the GPT-2 icon, strategies to improve context windows, and interactive sessions that allow you to query and depict your dynamic context in actual time.

import torch

import numpy as np

from typing import List, Dict, Any, Optional, Union, Tuple

from dataclasses import dataclass

import time

import gc

from tqdm.notebook import tqdmWe import the basic libraries to build a dynamic context manager: Torch and Numby tensioner handle and numerical operations, while circular models and glasses provide data illustration comments of type and data containers. The tool units, such as TIME and GC, support time character and memory cleaning, as well as TQDM.notebook, interactive bars of the process of processing the piece in the colum.

@dataclass

class ContextChunk:

"""A chunk of text with metadata for the Model Context Protocol."""

text: str

embedding: Optional[torch.Tensor] = None

importance: float = 1.0

timestamp: float = 0.0

metadata: Dict[str, Any] = None

def __post_init__(self):

if self.metadata is None:

self.metadata = {}

if self.timestamp == 0.0:

self.timestamp = time.time()Contextchunk dataclass envelops one slice of the text along with its inclusion, the degree of the user’s extreme importance, a time round, and arbitrary definition data. The _post_init__ method guarantees that each piece be sealed with the present time when construction and that the virtual descriptive data to an empty dictionary if none of them is provided.

class ModelContextManager:

"""

Manager for implementing Model Context Protocol in LLMs on Google Colab.

Handles context window optimization, token management, and relevance scoring.

"""

def __init__(

self,

max_context_length: int = 8192,

embedding_model: str = "sentence-transformers/all-MiniLM-L6-v2",

relevance_threshold: float = 0.7,

recency_weight: float = 0.3,

importance_weight: float = 0.3,

semantic_weight: float = 0.4,

device: str = "cuda" if torch.cuda.is_available() else "cpu"

):

"""

Initialize the Model Context Manager.

Args:

max_context_length: Maximum number of tokens in context window

embedding_model: Model to use for text embeddings

relevance_threshold: Threshold for chunk relevance to be included

recency_weight: Weight for recency in relevance calculation

importance_weight: Weight for importance in relevance calculation

semantic_weight: Weight for semantic similarity in relevance calculation

device: Device to run computations on

"""

self.max_context_length = max_context_length

self.device = device

self.chunks = []

self.current_token_count = 0

self.relevance_threshold = relevance_threshold

self.recency_weight = recency_weight

self.importance_weight = importance_weight

self.semantic_weight = semantic_weight

try:

from sentence_transformers import SentenceTransformer

print(f"Loading embedding model {embedding_model}...")

self.embedding_model = SentenceTransformer(embedding_model).to(self.device)

print(f"Embedding model loaded successfully on {self.device}")

except ImportError:

print("Installing sentence-transformers...")

import subprocess

subprocess.run(["pip", "install", "sentence-transformers"])

from sentence_transformers import SentenceTransformer

self.embedding_model = SentenceTransformer(embedding_model).to(self.device)

print(f"Embedding model loaded successfully on {self.device}")

try:

from transformers import GPT2Tokenizer

self.tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

except ImportError:

print("Installing transformers...")

import subprocess

subprocess.run(["pip", "install", "transformers"])

from transformers import GPT2Tokenizer

self.tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

def add_chunk(self, text: str, importance: float = 1.0, metadata: Dict[str, Any] = None) -> None:

"""

Add a new chunk of text to the context manager.

Args:

text: The text content to add

importance: Importance score (0-1)

metadata: Additional metadata for the chunk

"""

with torch.no_grad():

embedding = self.embedding_model.encode(text, convert_to_tensor=True)

chunk = ContextChunk(

text=text,

embedding=embedding,

importance=importance,

timestamp=time.time(),

metadata=metadata or {}

)

self.chunks.append(chunk)

self.current_token_count += len(self.tokenizer.encode(text))

if self.current_token_count > self.max_context_length:

self.optimize_context()

def optimize_context(self) -> None:

"""Optimize context by removing less relevant chunks to fit within token limit."""

if not self.chunks:

return

print("Optimizing context window...")

scores = self.score_chunks()

sorted_indices = np.argsort(scores)[::-1]

new_chunks = []

new_token_count = 0

for idx in sorted_indices:

chunk = self.chunks[idx]

chunk_tokens = len(self.tokenizer.encode(chunk.text))

if new_token_count + chunk_tokens <= self.max_context_length:

new_chunks.append(chunk)

new_token_count += chunk_tokens

else:

if scores[idx] > self.relevance_threshold * 1.5:

for i, included_chunk in enumerate(new_chunks):

included_idx = sorted_indices[i]

if scores[included_idx] < self.relevance_threshold:

included_tokens = len(self.tokenizer.encode(included_chunk.text))

if new_token_count - included_tokens + chunk_tokens <= self.max_context_length:

new_chunks.remove(included_chunk)

new_token_count -= included_tokens

new_chunks.append(chunk)

new_token_count += chunk_tokens

break

removed_count = len(self.chunks) - len(new_chunks)

self.chunks = new_chunks

self.current_token_count = new_token_count

print(f"Context optimized: Removed {removed_count} chunks, {len(new_chunks)} remaining, using {new_token_count}/{self.max_context_length} tokens")

gc.collect()

if torch.cuda.is_available():

torch.cuda.empty_cache()

def score_chunks(self, query: str = None) -> np.ndarray:

"""

Score chunks based on recency, importance, and semantic relevance.

Args:

query: Optional query to calculate semantic relevance against

Returns:

Array of scores for each chunk

"""

if not self.chunks:

return np.array([])

current_time = time.time()

max_age = max(current_time - chunk.timestamp for chunk in self.chunks) or 1.0

recency_scores = np.array([

1.0 - ((current_time - chunk.timestamp) / max_age)

for chunk in self.chunks

])

importance_scores = np.array([chunk.importance for chunk in self.chunks])

if query is not None:

query_embedding = self.embedding_model.encode(query, convert_to_tensor=True)

similarity_scores = np.array([

torch.cosine_similarity(chunk.embedding, query_embedding, dim=0).item()

for chunk in self.chunks

])

similarity_scores = (similarity_scores - similarity_scores.min()) / (similarity_scores.max() - similarity_scores.min() + 1e-8)

else:

similarity_scores = np.ones(len(self.chunks))

final_scores = (

self.recency_weight * recency_scores +

self.importance_weight * importance_scores +

self.semantic_weight * similarity_scores

)

return final_scores

def retrieve_context(self, query: str = None, k: int = None) -> str:

"""

Retrieve the most relevant context for a given query.

Args:

query: The query to retrieve context for

k: The maximum number of chunks to return (None = all relevant chunks)

Returns:

String containing the combined relevant context

"""

if not self.chunks:

return ""

scores = self.score_chunks(query)

relevant_indices = np.where(scores >= self.relevance_threshold)[0]

relevant_indices = relevant_indices[np.argsort(scores[relevant_indices])[::-1]]

if k is not None:

relevant_indices = relevant_indices[:k]

relevant_texts = [self.chunks[i].text for i in relevant_indices]

return "\n\n".join(relevant_texts)

def get_stats(self) -> Dict[str, Any]:

"""Get statistics about the current context state."""

return {

"chunk_count": len(self.chunks),

"token_count": self.current_token_count,

"max_tokens": self.max_context_length,

"usage_percentage": self.current_token_count / self.max_context_length * 100 if self.max_context_length else 0,

"avg_chunk_size": self.current_token_count / len(self.chunks) if self.chunks else 0,

"oldest_chunk_age": time.time() - min(chunk.timestamp for chunk in self.chunks) if self.chunks else 0,

}

def visualize_context(self):

"""Visualize the current context window distribution."""

try:

import matplotlib.pyplot as plt

import pandas as pd

if not self.chunks:

print("No chunks to visualize")

return

scores = self.score_chunks()

chunk_sizes = [len(self.tokenizer.encode(chunk.text)) for chunk in self.chunks]

timestamps = [chunk.timestamp for chunk in self.chunks]

relative_times = [time.time() - ts for ts in timestamps]

importance = [chunk.importance for chunk in self.chunks]

df = pd.DataFrame({

'Size (tokens)': chunk_sizes,

'Age (seconds)': relative_times,

'Importance': importance,

'Score': scores

})

fig, axs = plt.subplots(2, 2, figsize=(14, 10))

axs[0, 0].bar(range(len(chunk_sizes)), chunk_sizes)

axs[0, 0].set_title('Token Distribution by Chunk')

axs[0, 0].set_ylabel('Tokens')

axs[0, 0].set_xlabel('Chunk Index')

axs[0, 1].scatter(chunk_sizes, scores)

axs[0, 1].set_title('Score vs Chunk Size')

axs[0, 1].set_xlabel('Tokens')

axs[0, 1].set_ylabel('Score')

axs[1, 0].scatter(relative_times, scores)

axs[1, 0].set_title('Score vs Chunk Age')

axs[1, 0].set_xlabel('Age (seconds)')

axs[1, 0].set_ylabel('Score')

axs[1, 1].scatter(importance, scores)

axs[1, 1].set_title('Score vs Importance')

axs[1, 1].set_xlabel('Importance')

axs[1, 1].set_ylabel('Score')

plt.tight_layout()

plt.show()

except ImportError:

print("Please install matplotlib and pandas for visualization")

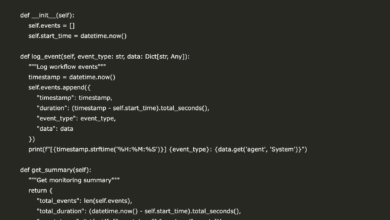

print('!pip install matplotlib pandas')The Modelcontxtmanager category coordinates a comprehensive processing of LLMS by cutting the input text, generating inclusion, and tracking the use of the distinctive symbol against the compulsive limit. It implements the registration of importance (combining modernity, importance, semantic similarity), communicating spontaneous context, and recovering the most relevant pieces, and comfortable facilities to monitor and visualize context statistics.

class MCPColabDemo:

"""Demonstration of Model Context Protocol in Google Colab with a Language Model."""

def __init__(

self,

model_name: str = "google/flan-t5-base",

max_context_length: int = 2048,

device: str = "cuda" if torch.cuda.is_available() else "cpu"

):

"""

Initialize the MCP Colab demo with a specified model.

Args:

model_name: Hugging Face model name

max_context_length: Maximum context length for the MCP manager

device: Device to run the model on

"""

self.device = device

self.context_manager = ModelContextManager(

max_context_length=max_context_length,

device=device

)

try:

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

print(f"Loading model {model_name}...")

self.model = AutoModelForSeq2SeqLM.from_pretrained(model_name).to(device)

self.tokenizer = AutoTokenizer.from_pretrained(model_name)

print(f"Model loaded successfully on {device}")

except ImportError:

print("Installing transformers...")

import subprocess

subprocess.run(["pip", "install", "transformers"])

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

self.model = AutoModelForSeq2SeqLM.from_pretrained(model_name).to(device)

self.tokenizer = AutoTokenizer.from_pretrained(model_name)

print(f"Model loaded successfully on {device}")

def add_document(self, text: str, chunk_size: int = 512, overlap: int = 50) -> None:

"""

Add a document to the context by chunking it appropriately.

Args:

text: Document text

chunk_size: Size of each chunk in characters

overlap: Overlap between chunks in characters

"""

chunks = []

for i in range(0, len(text), chunk_size - overlap):

chunk = text[i:i + chunk_size]

if len(chunk) > 20:

chunks.append(chunk)

print(f"Adding {len(chunks)} chunks to context...")

for i, chunk in enumerate(tqdm(chunks)):

pos = i / len(chunks)

importance = 1.0 - 0.5 * min(pos, 1 - pos)

self.context_manager.add_chunk(

text=chunk,

importance=importance,

metadata={"source": "document", "position": i, "total_chunks": len(chunks)}

)

def process_query(self, query: str, max_new_tokens: int = 256) -> str:

"""

Process a query using the context manager and model.

Args:

query: The query to process

max_new_tokens: Maximum number of tokens in response

Returns:

Model response

"""

self.context_manager.add_chunk(query, importance=1.0, metadata={"type": "query"})

relevant_context = self.context_manager.retrieve_context(query=query)

prompt = f"Context: {relevant_context}\n\nQuestion: {query}\n\nAnswer:"

inputs = self.tokenizer(prompt, return_tensors="pt").to(self.device)

print("Generating response...")

with torch.no_grad():

outputs = self.model.generate(

**inputs,

max_new_tokens=max_new_tokens,

do_sample=True,

temperature=0.7,

top_p=0.9,

)

response = self.tokenizer.decode(outputs[0], skip_special_tokens=True)

self.context_manager.add_chunk(

response,

importance=0.9,

metadata={"type": "response", "query": query}

)

return response

def interactive_session(self):

"""Run an interactive session in the notebook."""

from IPython.display import clear_output

print("Starting interactive MCP session. Type 'exit' to end.")

conversation_history = []

while True:

query = input("\nYour query: ")

if query.lower() == 'exit':

break

if query.lower() == 'stats':

print("\nContext Statistics:")

stats = self.context_manager.get_stats()

for key, value in stats.items():

print(f"{key}: {value}")

self.context_manager.visualize_context()

continue

if query.lower() == 'clear':

self.context_manager.chunks = []

self.context_manager.current_token_count = 0

conversation_history = []

clear_output(wait=True)

print("Context cleared!")

continue

response = self.process_query(query)

conversation_history.append((query, response))

print("\nResponse:")

print(response)

print("\n" + "-"*50)

stats = self.context_manager.get_stats()

print(f"Context usage: {stats['token_count']}/{stats['max_tokens']} tokens ({stats['usage_percentage']:.1f}%)")The McPcolbdemo Class links the context of the context with the Seq2Seq LLM, download the FAN-T5 (or any specific facial model) on the chosen device, provides interest methods to take measures from full documents for time, coordinate preparation for actual time, behave on its approval, interfere with treatment, behave on its approval, behave on its approval, act to approve it, and contract it window.

def run_mcp_demo():

"""Run a simple demo of the Model Context Protocol."""

print("Running Model Context Protocol Demo...")

context_manager = ModelContextManager(max_context_length=4096)

print("Adding sample chunks...")

context_manager.add_chunk(

"The Model Context Protocol (MCP) is a framework for managing context "

"windows in large language models. It helps optimize token usage and improve relevance.",

importance=1.0

)

context_manager.add_chunk(

"Context management involves techniques like sliding windows, chunking, "

"and relevance filtering to handle large documents efficiently.",

importance=0.8

)

for i in range(10):

context_manager.add_chunk(

f"This is test chunk {i} with some filler content to simulate a larger context "

f"window that needs optimization. This helps demonstrate the MCP functionality "

f"for context window management in language models on Google Colab.",

importance=0.5 - (i * 0.02)

)

stats = context_manager.get_stats()

print("\nInitial Statistics:")

for key, value in stats.items():

print(f"{key}: {value}")

query = "How does the Model Context Protocol work?"

print(f"\nRetrieving context for: '{query}'")

context = context_manager.retrieve_context(query)

print(f"\nRelevant context:\n{context}")

print("\nVisualizing context:")

context_manager.visualize_context()

print("\nDemo complete!")Run_MCP_DEMO function links everything together in one text program: you install Modelconxtmanager, adds a series of sample parts of changing importance, prints preliminary statistics, displays and displays the most related context, finally depicts the window of context, provides a full presentation of the typical context.

if __name__ == "__main__":

run_mcp_demo()

Finally, the standard Python entrance point guarantee ensures that the run_mcp_demo function () guarantees only when running directly (instead of importing as a unit), which leads to a comprehensive illustration of the functioning of the form of the context of the context of the form.

In conclusion, we will have a MCP system that works at full capacity not only achieves the use of the fugitive, but also gives priority to context fragments that are really of interest to your inquiries. Modelcontixtmanager provides you with tools for a balance between semantic importance, temporal freshness, and the importance of the user. At the same time, the accompanying McPcolabdemo category provides a frame that is accessible to experimenting and perception in actual time. Armed with these patterns, you can extend the basic principles by controlling the importance of importance, trying different inclusion models, or integrating with the alternative LLM background to adapt your workflow. In the end, this approach enables you to create brief but very relevant claims, which leads to more accurate and effective responses than your language models.

Here is Clap notebook. Also, do not forget to follow us twitter And join us Telegram channel and LinkedIn GrOup. Don’t forget to join 90k+ ml subreddit.

🔥 [Register Now] The virtual Minicon Conference on Agency AI: Free Registration + attendance Certificate + 4 hours short (May 21, 9 am- Pacific time)

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-04-28 06:32:00