A Google Gemini model now has a “dial” to adjust how much it reasons

“We really pushed” thinking “. Such models, which were designed to work through logical problems and spend more time reaching an answer, rose to the forefront earlier this year with the launch of the Deepseek R1 model. It is attractive to artificial intelligence companies because it can make a better model by training it by training it to deal with a problem in a way My work.

When the artificial intelligence model devotes more time (and energy) to inquire, it costs more. Terms of thinking models show that the task can cost more than $ 200 to complete. The promise is that this additional time and money helps thinking models in dealing better in dealing with difficult tasks, such as analyzing code or collecting information from many documents.

“The more you can repeat about certain assumptions and ideas,” says Coray Cavoogoogluoglujooglujooglujooglujooglujooglujooglujooglujooglujooglujojooglujooglu. The matter will find the right thing. “

This is not true in all cases. “The exaggerated model”, in reference to Gemini Flash 2.5, says the “exaggerated model”, in reference to Gemini Flash 2.5, the model that has been released today, which includes a sliding tape for developers to connect its thinking. “For simple demands, the model thinks more than it needs.”

When the model spends longer than necessary in a problem, it makes the model cost to operate for developers and increases the environmental footprint of AI.

Nathan Habib, an engineer in the embrace of the face who studied the spread of these logic models, says direct thinking is abundant. In the rush to show artificial intelligence more intelligent, companies reach thinking models like hammers even when there is no nail on the horizon, Habib says. In fact, when Openai announced a new model in February, he said it would be the last non -processed model for the company.

Habib says that earning performance “undeniable” for some tasks, but not for many others as people usually use artificial intelligence. Even when using the correct problem, things can go. Habib showed me an example of the main thinking model asking him to work through the problem of organic chemistry. It started fine, but in the middle of the road through the thinking process, the model responses began to collapse: I stumbled “waiting, but …” hundreds of times. It ended until it takes much longer than a non -general model in one mission. Kate Olszoska, who evaluates Gemini models in DeepMind, says that Google models can also stumble in the rings.

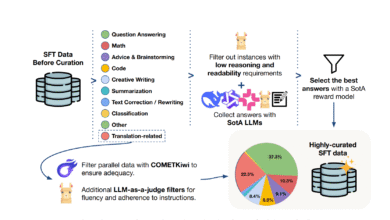

Google’s new phone call is an attempt to solve this problem. Currently, it is designed not to release the consumer from Gemini but for developers who make applications. The developers can set a budget for the computing computing amount that the model should spend on a specific problem, and the idea is to reject the phone call if the task does not involve a lot of thinking at all. The outputs of the model are six times more expensive when running.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-04-17 19:00:00