AbstRaL: Teaching LLMs Abstract Reasoning via Reinforcement to Boost Robustness on GSM Benchmarks

Recent research indicates that LLMS, especially the smaller, often struggle with strong thinking. It tends to perform well on familiar questions but stumbles when these same problems are changed slightly, such as changing names or numbers, or adding unrealistic but relevant information. This weakness, known as the poor generalization outside the distribution (OOD), leads to a noticeable decrease, even in minor mathematics tasks. One of the most promising solutions is to create artificial differences in thinking problems, helping models to learn to focus on basic logic rather than superficial details. Enhancing thinking in this way is very important to develop and reliable AI systems.

Extracting the basic logic of LLM failure thinking

LLMS has shown impressive thinking capabilities, however they are often stumbled when they are exposed to distribution transformations, such as changes in formulation, numerical values or the introduction of deviations. This weakness is evident through the criteria in logic, mathematics and logical logic. Previous solutions have relied on increasing data to expose models for a broader set of inputs, which improves durability but increased mathematical requirements. Researchers also explore forms such as abstraction and sequence chain to teach abstract thinking, while planning techniques such as the idea series and trees aid that solve step -by -step problems. Learning provides reinforcement and preference -based methods with additional support for developing thinking skill beyond conservation.

The symbolic learning method for ABSTRAL to improve the consistency of thinking

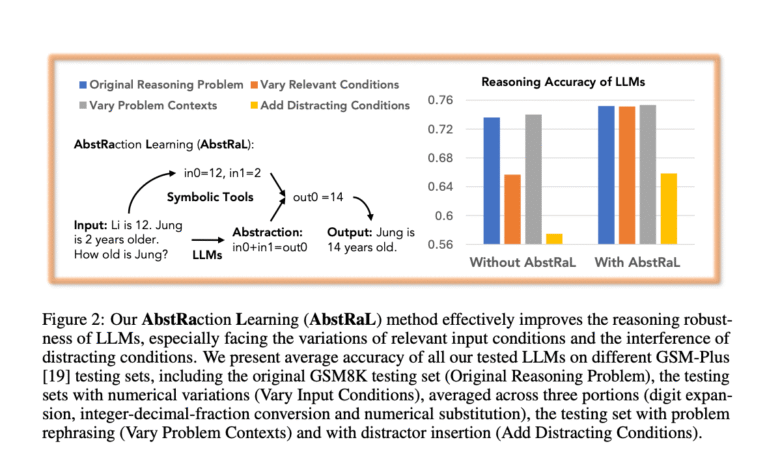

Researchers suggest from Apple and EPFL ABSTRAL, which is the LLMS method to understand abstract thinking patterns instead of saving surface details. Instead of generating many various training examples, which is charged with mathematical terms, LLMS helps learn the basic structure of thinking problems using reinforcement learning. This method links these abstract patterns with symbolic tools, which allows the solution to the most reliable problems. It is tested on GSM standards, greatly improves LLM performance, especially when facing input changes or dispersal of information. The trained models only exceed the learning subject to supervision by enhancing the most consistent thinking and context.

Four steps to strip symbolic thinking via ABSTRAL

ABSTRAL is a four -steps designed to teach LLMS to cause it to be stripped instead of relying on surface patterns. First, the main variables are determined in a question and replaced by a symbolic deputy owners. Next, using specially made (granular) data, the model learns to apply step by step with these naked symbols. After that, the structure of general thinking (abstraction) recovers from the symbolic answer. Finally, this abstraction is used with the original values to calculate the correct answer. Learning reinforcement with two bonuses, one for health and the other for symbolic similarity, improves the model’s ability to generate independent accurate thinking patterns of context.

GSM8K differences reveal the durability

Researchers evaluate Abstral on mathematics thinking tasks using models such as Llama-3 and QWEN2, and training them on a group of data called granules that rewrite mathematics problems in an abstract symbolic form. This helps the models focus on the structure instead of the surface details. They experience durability with changing versions of GSM8K problems, changing numbers, names and formulation. Compared to basic lines like a standard idea chain, ABSTRAL shows a stronger consistency and a less accurate decrease on these differences. Especially for smaller models, it improves reliability through the re -input inputs. The results indicate that teaching models for the reason for abstraction make them more adaptable and less dependent on the preserved patterns.

Llms teaching abstract thinking by enhancing the returns of strong logic

In conclusion, Abstral is a designed way to enhance abstract thinking in LLMS, which makes it more flexible to surface changes in problems. Unlike traditional installation or data increased, ABSTRAL uses reinforcement learning to train models on granular justifications that mix the lorder mouse chain with detailed abstraction. This approach helps models strip the surface deviations and communicate better with symbolic tools. It was tested on difficult GSM8K disorder standards, and significantly reduces performance declines under distribution transformations, especially in smaller models. The study shows that learning the summary improves the durability of thinking more effectively than dependent on direct supervision.

verify paper. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitterand YouTube and Spotify And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

SANA Hassan, consultant coach at Marktechpost and a double -class student in Iit Madras, is excited to apply technology and AI to face challenges in the real world. With great interest in solving practical problems, it brings a new perspective to the intersection of artificial intelligence and real life solutions.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-06 00:46:00