AI Deepfakes Stir Global Trust Concerns

Amnesty International Deepfakes interests global confidence

Moving Ai Deepfakes from global confidence fears because it has become increasingly realistic, creating an increasing dilemma for societies around the world. Fake pictures of high -level shapes such as Pope Francis and Donald Trump were filmed on social media, which led to real general confusion, wrong information and anxiety. These artificial visual images are easier than ever to manufacture, thanks to tools such as Midjourney and stable spread, and they raise urgent questions about the health of the media, political stability and organizational gaps. With DeepFake’s progress, its misuse is not only threatened with digital trust, but also threatens the foundations of democratic societies.

The most prominent warning of danger

- The deep public figures are widely shared and are often wrong in the real media.

- These fake photos feed the campaigns of misinformation and political manipulation.

- Experts warn that Deepfakes may affect the upcoming elections and global events.

- Current laws and guarantees are not sufficient to address the threat scale.

Also read: How to make Deepfake and the best Deepfake program

What is Ai Deepfakes and how do you work?

AI Deepfakes are pictures, videos, or audio recordings made using automated learning models trained in real human data. Many current systems depend on spreading models, which generate excessive realistic images by gradually removing noise from random input. These models learn patterns of large data collections to imitate people or scenarios in amazing precision.

Unlike traditional photo editing, Deepfake tools are used nerve networks to learn face details, expressions, gestures and audio features. Tools such as Midjourney and DALL allow and stable spread for users to build artificial media with simple text claims. Without strong safety filters, the result can seem cannot be distinguished from original shots.

Also read: What is Deepfake and what do they use?

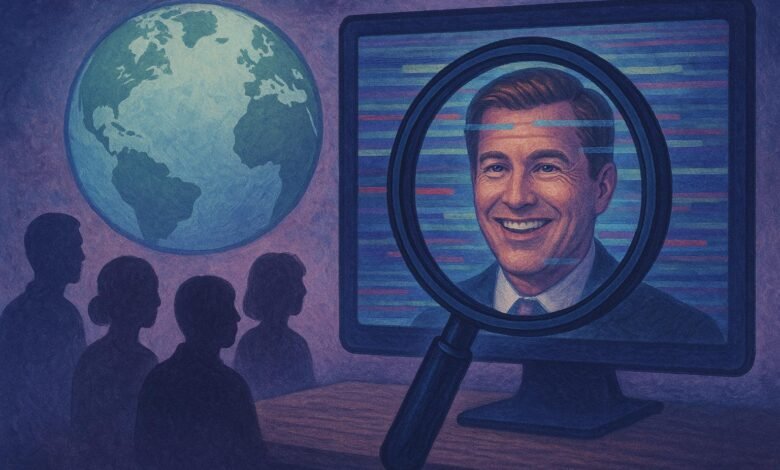

Viral accidents: How fake pictures provoke the general panic

In two bad examples, the images created from the viral intelligence Donald Trump depicted, and Pope Francis is wearing a white designer jacket. Both were created using Midjourney. At first glance, many Internet users believe that these pictures were real. They spread on social media before being exposed by news and facts.

These incidents highlight the power of Deepfakes to mislead the masses. Realistic images lead to emotional reactions and are often shared instinctively. Failure to evacuate the visual responsibility or signals makes artificial content more difficult to determine it. In many cases, no stickers are added, and the images continue to spin for a long time after being taken.

democracy-and-stability">Why Deepfakes pose a threat to democracy and stability

With the approaching global elections, experts warn that Deepfakes can be used to process sounds, spread wrong data, or raise disorders. In a policy environment politically, even one of the disabled persons can cause damage to the candidate or party. An increasing number of misleading information researchers see Deepfakes as tools for the influence designed to wear voter confidence and institutional credibility.

“We are at a stage that the vision is no longer a believer,” says Dr. Hani Farid of the University of California in Berkeley. Wrong videos or announced speeches can lead to the consequences of diplomacy, racist violence, or even economic panic. In conflict areas, a fabricated image can raise international conflict or influence public opinion on military measures.

Where laws and instructions limit

The organizational bodies are to catch up with a knee. In the United States, FTC issued warnings but no national organization has been enacted. Meanwhile, the European Union is submitting the law of artificial intelligence to ensure a greater transparency of the media created from artificial intelligence.

Business proposals include:

- It requires a sign of all the content created from artificial intelligence clearly.

- The developers are accountable for how to use their models.

- Establishing penalties for the deployment of malicious Deepfakes in elections, health or security fields.

Teristan Harris Technician says, “The laws must be treated very seriously like other forms of fraud,” says Treetstan Harris technician. Without strong legal deterrents, the misuse of the images created from artificial intelligence is likely to increase.

Also read: Openai launches Sora: Deepfakes for everyone

Can we discover and prevent Deepfakes?

Experts agree that completely eliminating Deepfakes is unrealistic. However, technical progress is made. Tools such as digital watermarks, descriptive data signatures, and reverse search engines are published to search for processed content. Companies like Microsoft and TruePic are implementing safe digital signatures to verify originality before the release.

Social platforms increase their defenses. Meta and Twitter (previously known as X) launch filters that analyze and restrict artificial content. Meanwhile, campaigns focus to enhance digital literacy on users’ education to evaluate visual images and cash examination sources before participation.

Also read: How to discover Deepfake: Tips to combat misleading information

Comparing the leading generators in artificial intelligence

| tool | Output | Known for | Safety filters |

|---|---|---|---|

| Midjourney | the pictures | Technical realism | Moderate (Review of Teacher Content) |

| Stable | the pictures | Open source flexibility | Low (user depends) |

| D | the pictures | An easy -to -use interface | High (Openai content policies) |

Common questions: Deepfakes and digital accountability

What is Deepfake?

The media created using machine learning is created to imitate real people. These files often include images, videos, or audio recordings that appear authentic but completely artificial.

How is Deepfakes abuse?

It is used to create false accounts by placing real individuals in fake situations. This tactic can be applied to political attacks, celebrity simulation, or satirical content that is spreading misleading information.

Can Deepfakes affect the elections?

Yes. Deepfakes can distort facts, spread rumors, or impersonate the personality of the candidates. In a tightly disputed race, a virus video can even convert public feelings or reduce turnout.

What are the regulations to control Deepfakes?

Some countries, like those within the European Union, are developing comprehensive rules that require a mark on artificial media. In the United States, most progress is still at the state level or within the advisory frameworks.

Conclusion: a need for vigilance and action

AI Deepfakes offers an insightful look at what artificial intelligence can achieve, but also reveals great risks. The wrong information fed by a realistic fake not only affects individuals, but the entire democratic processes. Fighting this increasing challenge depends on responsible education, organization and development and the most intelligent detection tools. As artificial intelligence progresses, the entire digital community must quickly adapt to protect the truth and the confidence of the audience.

Also read: Artificial intelligence and electoral misinformation

Reference

Bringgloffson, Eric, and Andrew McAfi. The era of the second machine: work, progress and prosperity in the time of wonderful technologies. Ww norton & company, 2016.

Marcus, Gary, and Ernest Davis. Restarting artificial intelligence: Building artificial intelligence we can trust in it. Vintage, 2019.

Russell, Stewart. Compatible with man: artificial intelligence and the problem of control. Viking, 2019.

Web, Amy. The Big Nine: How can mighty technology and their thinking machines distort humanity. Publicaffairs, 2019.

Shaq, Daniel. Artificial Intelligence: The Displaced History for the Looking for Artificial Intelligence. Basic books, 1993.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-05-06 13:28:00