AI Large Language Models vs. Small Language Models: Who Wins the Future?

The quiet power of small language models: Why it might be smaller smarter

After spending contracts in the heart of the Silicon Valley, I saw artificial intelligence developing from an abstract academic chase to a global force to reshape every industry. We live in an era of unprecedented artificial intelligence, as systems such as GPT-4, Claude, Jimini and Grok-called LLMS models-were dazzled by the world with its ability to write code, ACE legal exams, and to simulate a human conversation with amazing fluency.

This LLMS, which includes hundreds of billions of parameters, represents the peak of what energy, data and current engineering can produce. They are great achievements, undoubtedly. But while many lights have focused on this digital mighty, the calm revolution – but no less important – is fermentation in the background.

Enter the era of small language models (SLMS).

Integrated artificial intelligence systems restore this conversation about what it means to be “smart”. Unlike their larger counterparts, SLMS has not been designed for the huge scale. It is designed for speed, light movement, and accuracy – it is often much less parameters and runs them efficiently on local or edge devices. In an era in which privacy, cumin and control became unclean, its value has become impossible to ignore.

Why is it not everything

For years, the path of artificial intelligence reflects the path of large technology: Scale equals strength. The largest models mean better performance, wider capabilities and greater interest across fields. But this growth came at a cost – literally and metaphorically. LLMS training requires huge mathematical resources, vast energy consumption, access to data that often raises concerns about security and sovereignty.

SLMS offers counter -persuasive. Instead of chasing public intelligence at any cost, it gives priority to efficiency and focus. They are well designed to perform specific tasks with limited resources, and can be published on smartphones, Internet of Things devices, or even in guaranteed systems where cloud connection is not an option. Its smaller imprint means faster conclusion, low energy consumption, decisively, the privacy of reinforced data.

Smaller models, wider effect

SLMS effect is already visible. One of the assistants working locally on phones to translate the language in the actual time on the wearable devices, SLMS offers new categories of applications where LLMS is very large or very slow. They open doors for innovation in areas such as health care, independent vehicles, cybersecurity, and industrial automation – which often require accuracy, reliability and strict data governance.

Moreover, SLMS Decratize Decratize AI. Structural developers and startups who cannot withstand the costs of account resources needed for huge models can build meaningful Amnesty International solutions with SLMS on the shelf. This decentralization provokes a wave of creativity outside the walls of large technology.

Reflence intelligence

In the end, the rise of SLMS challenges our assumptions about what intelligence appears to be in machines. It calls us to bypass the scene of the size and towards a more accurate understanding – as it does not mean that we are smart to do everything, but rather the right thing, quickly, and in a good way.

Just as smartphones did not need giant computers to change the world, small language models may not need 500 billion teachers to form the next semester of artificial intelligence. They just need to be fast, focused and suitable for the purpose.

In a world race towards constantly bilateral algorithms, it is worth remembering: sometimes, smaller is more intelligent

Artificial intelligence is not suitable for everyone. The race to build the most powerful Amnesty International ever was born huge models such as GPT-4, Claude, Gemini, and Grok-includes hundreds of billions of parameters. these LLMS models (LLMS) It captured the imagination of the world by their ability to write the code, summarize books, pass legal tests, and simulate humanitarian conversation.

But there is another rising competitor: Small language models (SLMS) -Ais compact, smart, and AIS designed for this purpose. Although they may lack the theatrical scope of their largest generals, SLMS really redefines the meaning of “smart” in an era where Efficiency, privacy and control It is the key.

So what is the real difference? Is it always better better?

The size is important – but not always

Language Models:

-

border: 10B – 1t+

-

Strengths:

-

Weaknesses:

-

Training and running

-

Slow down on local or mobile devices

-

Intensive energy for energy and carbon

-

Small language models:

Who is: Amnesty International’s platforms and models

Below is a collapse of the leadership of artificial intelligence platforms and LLM or SLM contributions:

| Company / platform | Form Name (s) | He writes | Notes |

|---|---|---|---|

| Amnesty International dead | Llama 3, code llama | LLM and SLM | Open source, is widely used in research and society societies |

| Google DeepMind | Gemini 1.5 | Llm | Multimedia, competitor for GPT-4 with strong thinking |

| man | Claude 3 Opus/Haiku/Sonnet | LLM and SLM | Claude Haiku is lightweight and effective |

| Xai (Elon Musk) | Groc (1, 1.5, 2) | Llm | Integrated in X (formerly Twitter) |

| Bad artificial intelligence | Mistral 7B, Mixral Moe | Slm | Open and fast weights and performance small models |

| Deepseek ai | Deepseek-Coder, Deepseek-VL | LLM and SLM | It focuses on multimedia open models |

| Openai + microsoft | Copilot (supported by GPT-4) | Llm | Integrated in the office, GitHub, and more |

| AI confusion | Perplexity LLM + Search | Llm | Answers with direct internet access and martyrdom |

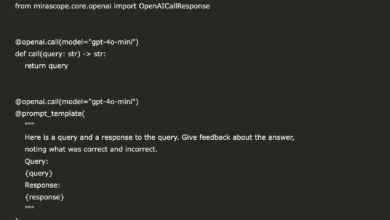

SLMS open source

These models are widely available and are often improved for real tasks with the number of smaller parameters:

1. Mistral 7B

2. Lama 2 (7b, 13b variables)

3. Gemma 2B / 7B

4. Phi-3 mini / phi-3 small

-

Developer: Microsoft Research

-

Phi-3 Mini: 3.8B parameters (ready for mobile)

-

Phi-3 Small: 7B Teachers

-

Notes: Training on “Quality of School Books” data for intelligent intelligence.

5. Tinyllama (1.1B)

6. Orca 2 (7B)

7. Falcon 7 b

-

Developer: The Institute of Technology Innovation (TII), United Arab Emirates

-

Notes: improved performance with lower resources, available for commercial use.

8. Openhermes 2.5 / Mythomax (13B or below)

-

Developer: Society (for example, nous research)

-

Notes: models that are set with a focus on playing roles, chatting and creative output in compressed formats.

II SLMS improved / edge devices

Designed for mobile, bug or low -power devices:

9. Google Gemini Nano

-

Basic System: Android (Pixel 8+)

-

Notes: The forces on smart responses on the device, summarize, etc.

10. Apple’s On-Device LLM (rumors)

11. DistilGPT2, Distilbert

12. Rwkv

SLMS multi -language or for the task

13. Pluz-560 m to 3b

14. Minigpt / Minichat

Summary Schedule

| Model name | Size (transactions) | Developer | Note use |

|---|---|---|---|

| Mistral 7B | 7 b | Bad artificial intelligence | For general purposes, SLM is strong |

| Phi-3 Mini | 3.8b | Microsoft | Mobile logic, efficiency |

| Tinyllama | 1.1b | Open source | Lightweight, paid society |

| Gemini Nano | Unknown (<3B) | Android on the artificial intelligence device | |

| DistilGPT2 | ~ 82m | Embroidery | Rapid and limited publishing of resources |

| Falcon 7 b | 7 b | T (Emirates) | Open license, strong performance |

| Lama 2-7 b | 7 b | Dead | Popular light |

| Openhermes | ~ 7-13B | community | Specialized instructions have been seized |

Customization for generalization

LLMS is trained in huge and varied data sets to generalize them through countless areas. But the allocation is difficult – they often need it Learning reinforcement with human reactions (RLHF) Or external memory systems.

SLMS, on the other hand, can be Custom interest To serve specialized industries such as:

Slms flourish in Sensitive environments for privacy, low -range frequency Where LLM will be extensively exaggerated.

Use the confrontation

| Using the case | Language model | Small language model |

|---|---|---|

| Legal or medical research | Deep thinking, multi -faceted synthesis | ❌ Very narrow or limited to critical domains |

| Customer support Chatbot | ✅ Multiple user but expensive | ✅ Good for common questions and narrow tasks |

| IOT smart devices (IOT) | ❌ Very heavy | Future for local treatment |

| Language translation | ✅ Multi -language, context | ✅ Good with limited languages or vocabulary |

| Education/lessons | ✅ Adaptive, reactions can be simulated at the PhD level | ✅ Useful in specialized learning applications |

The biggest picture: energy, ethics and control

The artificial intelligence community has become increasingly conscious with Environmental and ethical costs From llms. According to the consumer GPT-4 million hours of graphics processing, while inference remains a thirst for resources. On the other hand, SLMS A strengthening More sustainable for artificial ecosystemsPrefer energy efficiency and decentralization.

Governments and institutions also tend towards Amnesty International Sovereign OrganizationSLMS allows local publication and control of the most strict data.

Final judgment: It is not llm Opposite Slm – it’s llm and Slm

The future of artificial intelligence is not a zero game. Instead, it is Hybrid futurewhere:

-

LLMS The strength of the cloud Providing public intelligence and creativity on a large scale

-

Slms lives on the edge Providing safe, effective and personal intelligence

Think about it in this way: If the GPT-4 is your university professor, the small language model is your own teacher-always there, focused and effective.

When artificial intelligence becomes more included in our daily life, The smaller, smarter and ethical models will determine the next wave of adoption.

Main meals:

“The largest is not always better. Artificial intelligence revolution needs both powerful and effective public public.”

You may enjoy listening to AI World Deep Dive Podcast:

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-28 15:37:00