IBM and ETH Zürich Researchers Unveil Analog Foundation Models to Tackle Noise in In-Memory AI Hardware

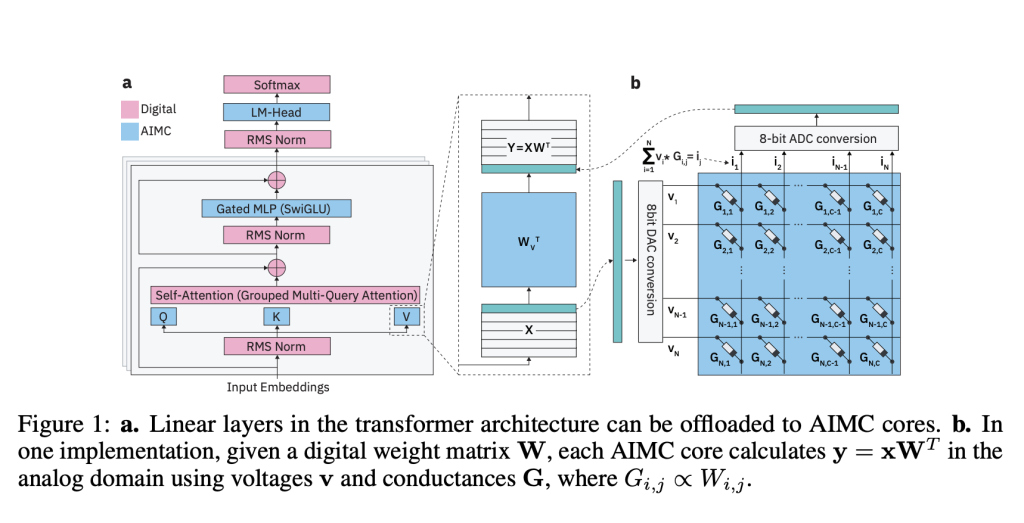

IBM researchers, along with Eth Zürich, have revealed a new category of AFMS models (AFMS) Designed to bridge the gap between LLMS models (LLMS) and AIMC analog computing (AIMC) Devices. AIMC has long promised a radical jump in efficiency-forms that have millions of teacher in a small imprint enough for compact devices or edge-thanks to the non-volatile memory (NVM) that combines storage and account. But Achilles heel in technology was a noise: the performance of the matrix complications directly inside NVM devices leads to unlimited errors that paralyze the models outside the shelf.

Why do the analog computing of LLMS?

Unlike the graphics processing units or TPUS that are transferred between the data between the memory and its units, the AIMC performs the complications of the matrix and the consumer directly within the memory sects. This design removes the Von Neumann bottle and provides enormous productivity and energy efficiency improvements. Previous studies have shown that combining AIMC with 3D NVM and Mix of experts (MEE) In principle, the structure can support the trillion parameters of the parameter on compact accelerators. This can make an artificial intelligence possible as possible on devices that go beyond databases.

What makes Analog computing in memory ((AIMC) Is it difficult to use in practice?

The largest barrier is noise. AIMC accounts suffer from device fluctuations, DAC/ADC air conditioning, and operating time fluctuations that decompose the resolution of the form. Unlike quantitative measurement on graphics processing units – where errors are inevitable and controlled – random random noise and unpredictable. Previous research has found ways to adapt small networks such as CNNS and RNNS (<100m parameters) to tolerate such noise, but LLMS with billions of parameters have constantly collapsed under the AIIC restrictions.

How does the analog foundation models address the noise problem?

IBM team offers Analog foundation modelsWhich merges the tools training to prepare LLMS for analog implementation. Their pipeline is used:

- Noise injection During training to simulate the random AIMC.

- Cut the repetitive weight To install the distributions within the boundaries of the device.

- Increase/fixed output domains learned It is in line with the restrictions of real devices.

- Llms pre -trained llms Using 20B codes of artificial data.

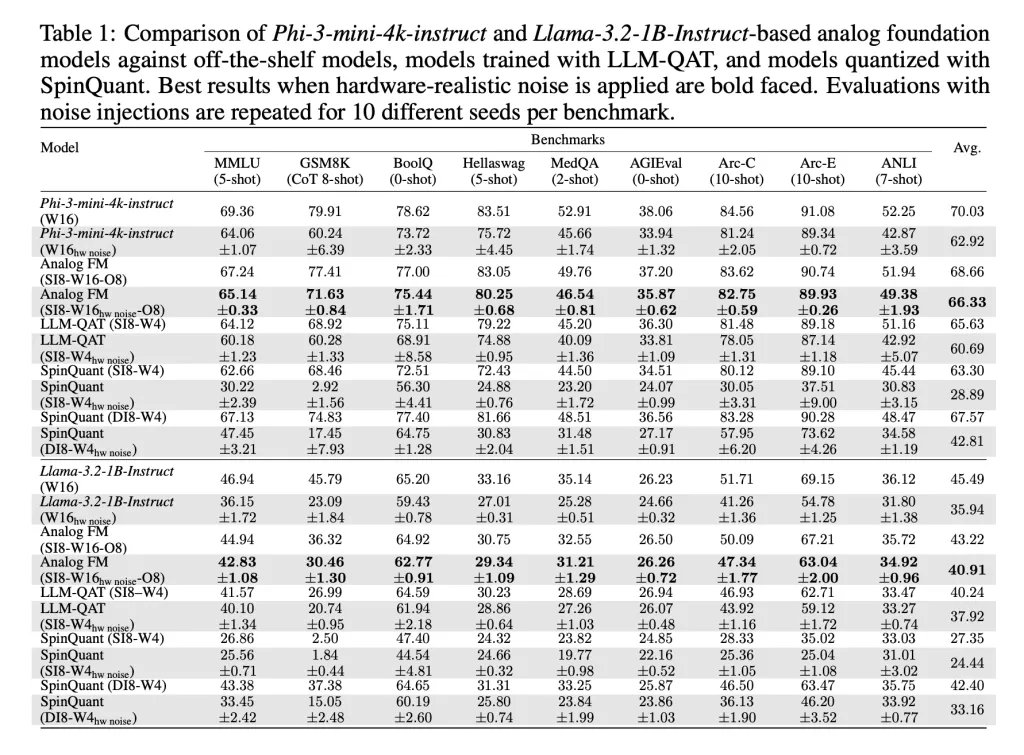

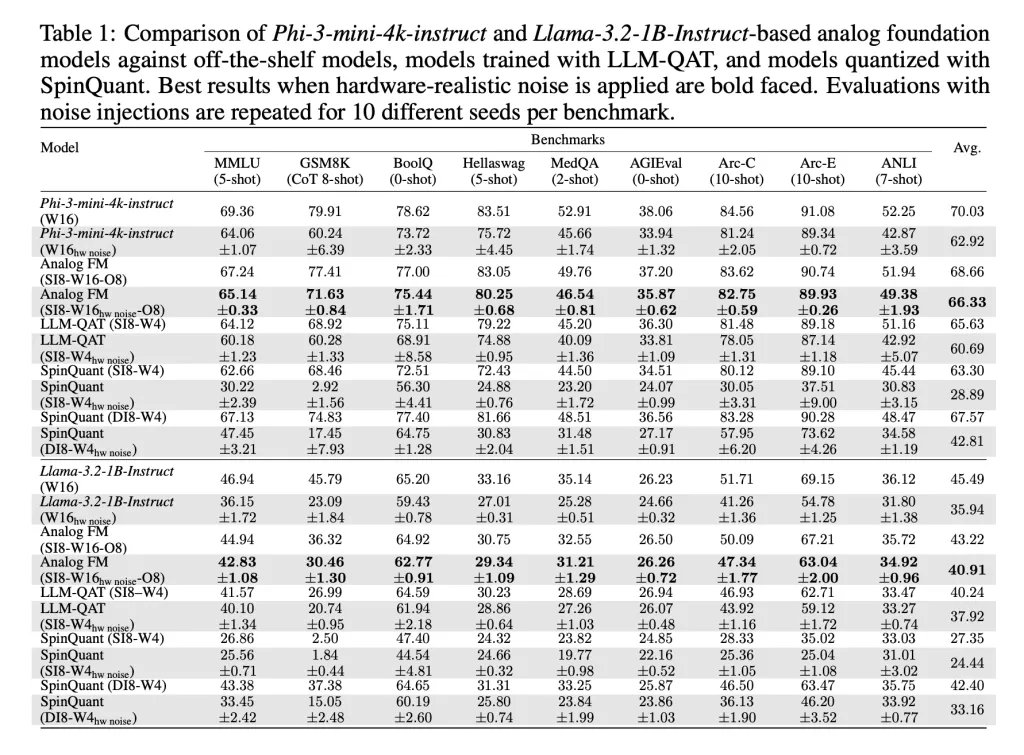

These methods, which were implemented with Aihwkit-LightningAllow models like Phi-3-MINI-4K-Instruct and Llama-3.2-1B-Instruct To preserve The performance is similar to the 4 -bit Basonic Basic Foundation under the analog noise. In realistic reviews and standards, AFMS outperformed both training in the quantity (QAT) and the quantity after training (Spinquant).

Do these models only work for analog devices?

no. The unexpected result is that AFMS also works vigorously Digital devices are low accurate. Since AFMS is trained to withstand noise and dance, it deals with a small amount after training (RTN) is better than the existing methods. This makes them useful not only for AIMC, but also for the digital reasoning devices.

Can performance measurement with more account at the time of reasoning?

Yes. The researchers tested Test time calculating time On the Math-500 Standard, generate multiple answers for each query and choose the best via the reward form. AFMS showed a better scaling behavior than the piece models, with resolution gaps shrinking as more inference account was dedicated. This is consistent with the strengths of AIMC-high-productivity inference rather than training.

How does it affect the future of analog computing in memory (AIMC)?

The research team provides the first systematic show that can be adapted to large LLMS with AIMC devices without the catastrophic resolution. While AFMS training is heavy resources and logical tasks such as GSM8K still shows accuracy gaps, the results are a milestone. Mixture Energy efficiency, durability for noise, and consensus via digital devices AFMS makes a promising trend to expand the foundation models outside the GPU border.

summary

The introduction of analog foundation models is a decisive milestone to expand the LLMS range beyond the boundaries of digital speeds. By making the unpredictable noise models for analog computing in memory, the research team shows that AIIC can move from a theoretical promise to a practical platform. While the training costs are still high and logical criteria still show gaps, this work determines a path towards large -scale models of energy that works on compressed devices, which pushes the basic models closer to the spread of the edge

verify paper and Jaytap page. Do not hesitate to check our GitHub page for lessons, symbols and notebooks. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

🔥[Recommended Read] Nvidia AI Open-Sources VIPE (Video Forms)

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-09-21 08:12:00