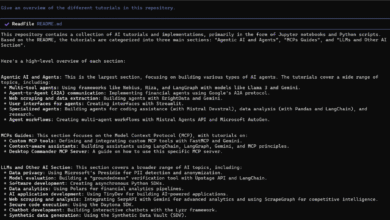

Ant Group uses domestic chips to train AI models and cut costs

The ANT group relies on the semiconductor Chinese conductors to train artificial intelligence models to reduce costs and reduce dependence on restricted American technology, according to people familiar with the matter.

The company, owned by Albaba, used chips from local suppliers, including those who are associated with parents and papia techniques to train large language models using a mixture of experts (MEE). The results were comparable to those that were produced with NVIDIA H800 chips, as the sources claim. While Ant continues to use NVIDIA chips for some AI’s development, one of the sources said that the company is increasingly turning into alternatives from AMD and Chinese chips for its latest models.

Development indicates the deepest ANT participation in the growing artificial intelligence race between Chinese technology companies and the United States, especially since companies are looking for effective ways to train models. The experience with local devices reflects a broader effort among Chinese companies to work around export restrictions that prevent access to high -end chips like Nvidia’s H800, which, although they are not the most advanced, still one of the most powerful graphics processing units available to Chinese institutions.

ANT published a research paper describing her work, stating that her models, in some tests, were better than those developed by Meta. Bloomberg NewsWhich was initially reported, did not verify the company’s results independently. If the models are as shown, ANT may be a step forward in trying China to reduce the cost of operating artificial intelligence applications and reduce dependence on foreign devices.

MEE models are divided into smaller data collections that are dealt with by separate components, and have gained interest among artificial intelligence researchers and data scientists. This technology is used by Google and startup based on Hangzhou, Deepseek. The MEE concept is similar to the presence of a team of specialists, every part of a task to make the production production process more efficient. ANT refused to comment on her work regarding devices.

MEE training models depend on high -performance graphics processing units that can be very expensive for smaller companies to gain or use. ANT research focused on reducing the cost barrier. The title of the paper is a clear goal: the scaling forms “without premium graphics processing units”. [our quotation marks]

The direction taken by ANT and the use of MEE to reduce the costs of contrast to the NVIDIA approach. Jensen Huang CEO said that the demand for computing power will continue to grow, even with more efficient models such as Deepseek’s R1. His point of view is that companies will search for more powerful chips to pay for revenue growth, rather than aim to reduce costs with cheaper alternatives. The NVIDIA strategy still focuses on building graphics processing units with more cores, transistors and memory.

According to ANT Group, the TRILLION TOKENS- Methods for Data Models used by AI Learning Models-costs about 6.35 million yuan (about $ 880,000) using high-performance traditional devices. The improved training method of the company has reduced the cost to about 5.1 million yuan using low discrimination chips.

Ant said she plans to apply her models produced in this way-Ling-Plus and Ling-Lite-on cases of artificial artificial intelligence such as health care and financing. Earlier this year, the company acquired Haodf.com, a Chinese medical platform online, for more ANT ambition to spread artificial intelligence solutions in health care. It also runs the services of other Amnesty International, including a virtual assistant application called Zhixiaobao and a financial advisory platform known as Maxiaocai.

“If you find one point of the attack to overcome the best in the world Kung Fu Robin Yu, the chief technology official at the IQ company in Beijing, Xinghang Tech said:

Ant has made open source models. Ling-Lite has 16.8 billion teachers-settings that help determine how the model works-while Ling-Plus has 290 billion. For comparison, it is estimated that the GPT-4.5 is closed to about 1.8 trillion teacher, according to Massachusetts Institute Technology Review Technology.

Despite progress, the Ant paper noted that the training forms are still difficult. Small adjustments to the structure of the hardware or model during typical training sometimes led to unstable performance, including nails in error rates.

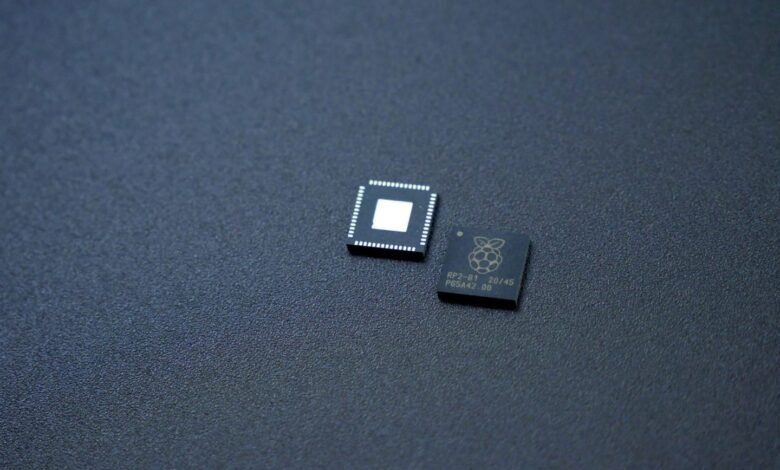

(Photo by Unsplash)

See also: Deepseek v3-0324 leads the artificial intelligence models

Do you want to learn more about artificial intelligence and large data from industry leaders? Check AI and Big Data Expo, which is held in Amsterdam, California, and London. The comprehensive event was identified with other leading events including the smart automation conference, Blockx, the digital transformation week, and the Cyber Security & Cloud.

Explore the upcoming web events and seminars with which Techforge works here.

2025-04-03 09:59:00