Meet LangGraph Multi-Agent Swarm: A Python Library for Creating Swarm-Style Multi-Agent Systems Using LangGraph

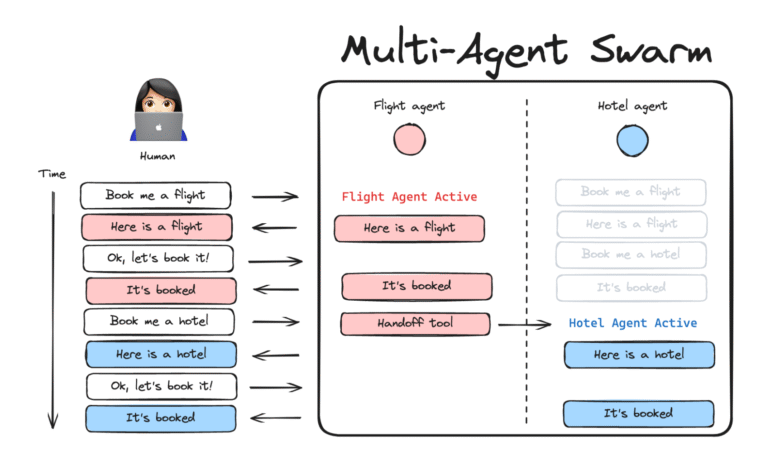

Langgraph Multi-Agent Swarm is a Python library designed to organize many artificial intelligence factors as a coherent “swarm”. It depends on Langgraph, a framework for creating strong workflow tasks and a state, to enable a specialized form of multi -agent architecture. In a squadron, agents with different disciplines deliver dynamically controlling each other with tasks, instead of one homogeneous customer trying everything. The system follows any agent that was another active so that when the user provides the next input, the conversation resumes smoothly with the same agent. This approach addresses the problem of building AI’s cooperative workflow where the most qualified agent can deal with all sub -tasks without losing context or continuity.

Langgraph Swarm aims to make this multi -agent format easier and more reliable for developers. It provides abstracts to link the agents of the individual language model (that is likely to be their tools and claims) in one integrated application. The library comes with support outside the box for broadcast responses, the integration of memory in the short and long term, and even human intervention in the ring, thanks to its foundation on Langgraph. By taking advantage of the Langgraph (low -level format frame) and its natural installation in the broader ecosystem, Langgraph Swarm allows automatic learning engineers and researchers to build complex AI with a clear control of information flow and decisions.

Langgraph swarm and major features

In essence, Swarm Langgraph represents multiple factors as a nodes in a directed case fee, edges determine delivery paths, and follow the common status “Active_gent”. When the agent calls for a delivery process, the library updates this field and transmits the necessary context so that the following agent continues smoothly in the conversation. This preparation supports the cooperative specialization, allowing each agent to focus on a tight field while providing customized delivery tools for the flexible workflow. SWARM was built on Langgraph, and maintains a long -term conversation and long -term knowledge, ensuring multiple cohesive interactions even with factors control transformations.

Coordination of the agent through delivery tools

Hanggraph Swarm’s Handoff tools allow one of the agents to one by issuing a “command” that updates the common situation, converts “active_agement” and passing the context, such as relevant messages or a dedicated summary. While the virtual tool takes out full conversation and inserts a notice, developers can apply tools dedicated to liquidating context, adding instructions, or reincarnation of the procedure to influence LLM behavior. Unlike self -guidance patterns, SWARM guidance is explicitly defined: each Hideoff is defined, i.e. the worker may take over, ensuring predictable flows. This mechanism supports cooperation patterns, such as the “travel scheme” that generates medical questions to a “medical advisor” or coordinator that distributes technical information and bills for specialized experts. It depends on an internal router to direct the user’s messages to the current agent until another sound occurs.

State management and memory

Status and memory management are essential to maintain the context as agents deliver tasks. By default, Langgraph Swarm maintains a common condition, contains the chat record and “Active_gement”, and uses Checkpointer (such as providing the base in the memory or database store) to continue this situation via the turns. Also, it supports the long -term memory store, allowing the system to record facts or previous reactions for future sessions while keeping a window of modern messages for immediate context. These mechanisms include together that the swarm “does not forget” any active agent or what has been discussed, which provides multi -smooth dialogues and accumulates user preferences or important data over time.

When more granular control is needed, developers can determine the dedicated state charts so that each agent has his own message. By wrapping the agent’s calls to appoint the global state in the agents of the agent before protesting and merged updates after that, the teams can adapt the degree of context sharing. This approach supports the workflow tasks ranging from fully cooperative agents to isolated thinking units, all with the benefit of strong woven, memory and infrastructure of the state administration.

Allocation and expansion

Langgraph Swarm provides wide flexibility in the dedicated workflow. The developers can bypass the virtual delivery tool, which passes all messages and replaces the active agent, to implement specialized logic, such as summarizing the context or attaching additional definition data. Langgraph is simply attributed to updating the situation, and agents must be formed to deal with these orders through the appropriate node types and condition keys. In addition to delivery, one can re -define how agents share or isolate memory using the written status plans in Langgraph: set SWARM global status in fields for each agent before protesting and merge the results after that. This scenario in which the agent maintains a private conversation record or uses a different communication format without exposing internal thinking. In order to fully control, it is possible to bypass the high -level application programming interface and the “stategraph” assembly: Add each worker assembled as a node, select the edges of the transition, and attach the active router. Although most of the users benefit from the simplicity of “Create_swarm” and “Create_REACT_Art_AGENT”, the ability to decrease to Langgraph alternatives ensures that practitioners can examine, adjust or extend each aspect of multi -agent coordination.

The integration of the ecosystem and consequences

Swarm Langgraph is tightly integrated with Langchain, use of ingredients such as Langsmith for evaluation, Langchain \ _pianai to reach the model, and Langgraph for synchronization features such as stability and deception. Its typical design, which is designed by the coordination of agents via the back LLM (Openai, embrace, or other), is available in Python (“PIP Install Langgraph-Swarm ‘) and Javascript/Typescript (‘@Langchain/Langgraph-Swarm’), which makes it suitable for the environment on the web or server.

Implementation of the sample

Below is the minimum preparation of two swabs:

from langchain_openai import ChatOpenAI

from langgraph.checkpoint.memory import InMemorySaver

from langgraph.prebuilt import create_react_agent

from langgraph_swarm import create_handoff_tool, create_swarm

model = ChatOpenAI(model="gpt-4o")

# Agent "Alice": math expert

alice = create_react_agent(

model,

[lambda a,b: a+b, create_handoff_tool(agent_name="Bob")],

prompt="You are Alice, an addition specialist.",

name="Alice",

)

# Agent "Bob": pirate persona who defers math to Alice

bob = create_react_agent(

model,

[create_handoff_tool(agent_name="Alice", description="Delegate math to Alice")],

prompt="You are Bob, a playful pirate.",

name="Bob",

)

workflow = create_swarm([alice, bob], default_active_agent="Alice")

app = workflow.compile(checkpointer=InMemorySaver())Here, Alice takes on the additions and can hand them over to Bob, while Bob responds brilliantly but directs mathematics questions to Alice. Inmemorysaver guarantees that the conversation situation continues through turns.

Using cases and applications

Langgraph Swarm cancels the provision of advanced multi -agent cooperation by enabling a central coordinator to delegate sub -tasks dynamically to specialized agents, whether they are the three emergencies by handing over medical experts or security or who respond to disasters, or reducing travel reservations between research, or dividing the worker in the field of increasing, guidance or guidance from workers in the field Weighting, guidance, or workers preceding them in the field of weighting, dividing, dividing, or directing, or directing review actions, or or agents condemning the car or tasks between researchers and correspondents and factors of fact -examining facts. In addition to these examples, the framework can make robots that support customers that direct inquiries to administrators specialists, interactive stories novel with distinct personal factors, or scientific pipelines with processors for the stage, or any scenario where the division of labor between members “SWARM” reliable experts and clarity. Meanwhile, Langgraph Swarm deals with basic messages, state management, and smooth shifts.

In conclusion, Langgraph Swarm is a jump towards artificial intelligence systems. It solves the multi -organized specialized factors in a graph of the tasks that one model is struggling, and each agent deals with his experience, then hand over control smoothly. This design keeps individual agents simple and can be explained while Swarm has a complex workflow that involves thinking, use of tools and decision -making. The library was built on Langchain and Langgraph, in a mature environmental system of LLMS, tools, memory stores, and error correction facilities. The developers reserve the explicit control of the agent’s interactions and the participation of the state, and the guarantee of reliability, yet they still benefit from the LLM flexibility to determine when the tools or delegation are called to another agent.

verify Jaytap page. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 90k+ ml subreddit.

SANA Hassan, consultant coach at Marktechpost and a double -class student in Iit Madras, is excited to apply technology and AI to face challenges in the real world. With great interest in solving practical problems, it brings a new perspective to the intersection of artificial intelligence and real life solutions.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-05-16 05:46:00