A Step-by-Step Coding Guide to Building an Iterative AI Workflow Agent Using LangGraph and Gemini

In this tutorial, we explain how to build a multi -step smart query processing agent using Langgraph and Gemini 1.5 Flash. The basic idea is to organize thinking about artificial intelligence as a government workflow, where possible query is passed through a series of purposeful contract: guidance, analysis, research, generating response, and verifying health. Each knot acts as a functional mass with a well -defined role, which makes the agent not only interactive but analytical. Using Langgraph’s StateGRAPH, we organize this contract to create an episode system that can analyze and improve its outcome until the response is valid as complete or access to the maximum repetition threshold.

!pip install langgraph langchain-google-genai python-dotenvFirst, Command! PIP installs Langgraph Langchain-Google-Genai Python-Dotenv that installs three biton packages necessary to build smart workflow tasks. Langgraph allows the synchronous for artificial intelligence agents, provides Langchain-Google-Genai integration with Goeni from Google, and Python-Dotenv allows safe loading of environmental variables of. ENV files.

import os

from typing import Dict, Any, List

from dataclasses import dataclass

from langgraph.graph import Graph, StateGraph, END

from langchain_google_genai import ChatGoogleGenerativeAI

from langchain.schema import HumanMessage, SystemMessage

import json

os.environ["GOOGLE_API_KEY"] = "Use Your API Key Here"We import the basic units and libraries to build a workforce operation tasks, including Chatgooglegenerative to interact with Gemini models and stategraph to manage the conversation status. OS.NVIron Line[“GOOGLE_API_KEY”] = “Use your API key here” appoints the API key for the environment variable, allowing the Gemini model to ratify and create responses.

@dataclass

class AgentState:

"""State shared across all nodes in the graph"""

query: str = ""

context: str = ""

analysis: str = ""

response: str = ""

next_action: str = ""

iteration: int = 0

max_iterations: int = 3Check the notebook here

DATACLASS Agentstate determines this common state that continues across the various contracts in the function of Langgraph. It tracks the main fields, including the user’s inquiry, the refundable context, any analysis made, the constructed response, and the next recommended procedure. It also includes the repetition meter, Max_itarss, to control the number of times that the workflow can flow, allowing repetitions or decision -making by the agent.

@dataclass

class AgentState:

"""State shared across all nodes in the graph"""

query: str = ""

context: str = ""

analysis: str = ""

response: str = ""

next_action: str = ""

iteration: int = 0

max_iterations: int = 3

This AgentState dataclass defines the shared state that persists across different nodes in a LangGraph workflow. It tracks key fields, including the user's query, retrieved context, any analysis performed, the generated response, and the recommended next action. It also includes an iteration counter and a max_iterations limit to control how many times the workflow can loop, enabling iterative reasoning or decision-making by the agent.

class GraphAIAgent:

def __init__(self, api_key: str = None):

if api_key:

os.environ["GOOGLE_API_KEY"] = api_key

self.llm = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0.7,

convert_system_message_to_human=True

)

self.analyzer = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0.3,

convert_system_message_to_human=True

)

self.graph = self._build_graph()

def _build_graph(self) -> StateGraph:

"""Build the LangGraph workflow"""

workflow = StateGraph(AgentState)

workflow.add_node("router", self._router_node)

workflow.add_node("analyzer", self._analyzer_node)

workflow.add_node("researcher", self._researcher_node)

workflow.add_node("responder", self._responder_node)

workflow.add_node("validator", self._validator_node)

workflow.set_entry_point("router")

workflow.add_edge("router", "analyzer")

workflow.add_conditional_edges(

"analyzer",

self._decide_next_step,

{

"research": "researcher",

"respond": "responder"

}

)

workflow.add_edge("researcher", "responder")

workflow.add_edge("responder", "validator")

workflow.add_conditional_edges(

"validator",

self._should_continue,

{

"continue": "analyzer",

"end": END

}

)

return workflow.compile()

def _router_node(self, state: AgentState) -> Dict[str, Any]:

"""Route and categorize the incoming query"""

system_msg = """You are a query router. Analyze the user's query and provide context.

Determine if this is a factual question, creative request, problem-solving task, or analysis."""

messages = [

SystemMessage(content=system_msg),

HumanMessage(content=f"Query: {state.query}")

]

response = self.llm.invoke(messages)

return {

"context": response.content,

"iteration": state.iteration + 1

}

def _analyzer_node(self, state: AgentState) -> Dict[str, Any]:

"""Analyze the query and determine the approach"""

system_msg = """Analyze the query and context. Determine if additional research is needed

or if you can provide a direct response. Be thorough in your analysis."""

messages = [

SystemMessage(content=system_msg),

HumanMessage(content=f"""

Query: {state.query}

Context: {state.context}

Previous Analysis: {state.analysis}

""")

]

response = self.analyzer.invoke(messages)

analysis = response.content

if "research" in analysis.lower() or "more information" in analysis.lower():

next_action = "research"

else:

next_action = "respond"

return {

"analysis": analysis,

"next_action": next_action

}

def _researcher_node(self, state: AgentState) -> Dict[str, Any]:

"""Conduct additional research or information gathering"""

system_msg = """You are a research assistant. Based on the analysis, gather relevant

information and insights to help answer the query comprehensively."""

messages = [

SystemMessage(content=system_msg),

HumanMessage(content=f"""

Query: {state.query}

Analysis: {state.analysis}

Research focus: Provide detailed information relevant to the query.

""")

]

response = self.llm.invoke(messages)

updated_context = f"{state.context}\n\nResearch: {response.content}"

return {"context": updated_context}

def _responder_node(self, state: AgentState) -> Dict[str, Any]:

"""Generate the final response"""

system_msg = """You are a helpful AI assistant. Provide a comprehensive, accurate,

and well-structured response based on the analysis and context provided."""

messages = [

SystemMessage(content=system_msg),

HumanMessage(content=f"""

Query: {state.query}

Context: {state.context}

Analysis: {state.analysis}

Provide a complete and helpful response.

""")

]

response = self.llm.invoke(messages)

return {"response": response.content}

def _validator_node(self, state: AgentState) -> Dict[str, Any]:

"""Validate the response quality and completeness"""

system_msg = """Evaluate if the response adequately answers the query.

Return 'COMPLETE' if satisfactory, or 'NEEDS_IMPROVEMENT' if more work is needed."""

messages = [

SystemMessage(content=system_msg),

HumanMessage(content=f"""

Original Query: {state.query}

Response: {state.response}

Is this response complete and satisfactory?

""")

]

response = self.analyzer.invoke(messages)

validation = response.content

return {"context": f"{state.context}\n\nValidation: {validation}"}

def _decide_next_step(self, state: AgentState) -> str:

"""Decide whether to research or respond directly"""

return state.next_action

def _should_continue(self, state: AgentState) -> str:

"""Decide whether to continue iterating or end"""

if state.iteration >= state.max_iterations:

return "end"

if "COMPLETE" in state.context:

return "end"

if "NEEDS_IMPROVEMENT" in state.context:

return "continue"

return "end"

def run(self, query: str) -> str:

"""Run the agent with a query"""

initial_state = AgentState(query=query)

result = self.graph.invoke(initial_state)

return result["response"]Check the notebook here

The Graphaaagent category determines the workflow of the Langgraph, using Gemini forms to analyze answers, research, respond and verify the authenticity of user inquiries. Standard nodes, such as the router, analyst, researcher, respondent and resistance, are used to cause complex tasks, and to refine responses through controlled repetitions.

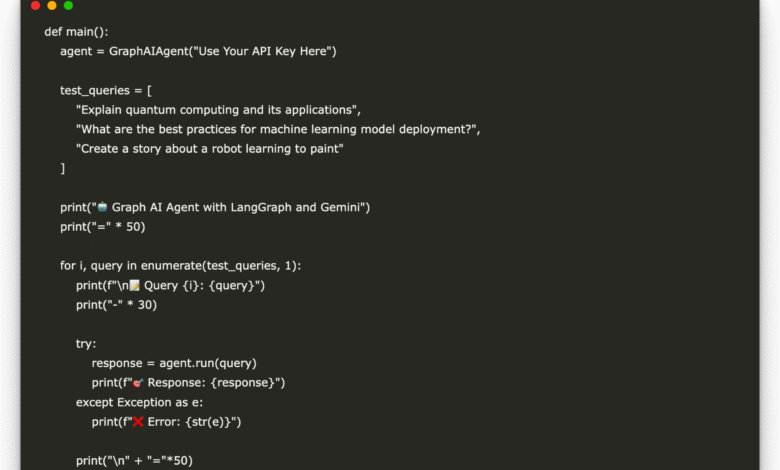

def main():

agent = GraphAIAgent("Use Your API Key Here")

test_queries = [

"Explain quantum computing and its applications",

"What are the best practices for machine learning model deployment?",

"Create a story about a robot learning to paint"

]

print("🤖 Graph AI Agent with LangGraph and Gemini")

print("=" * 50)

for i, query in enumerate(test_queries, 1):

print(f"\n📝 Query {i}: {query}")

print("-" * 30)

try:

response = agent.run(query)

print(f"🎯 Response: {response}")

except Exception as e:

print(f"❌ Error: {str(e)}")

print("\n" + "="*50)

if __name__ == "__main__":

main()

Finally, the main function () prepares Graphaaagent using the API Gemini key and runs it on a set of testing queries that cover technical, strategic and creative tasks. It prints all the query and the response created from artificial intelligence, which exposes how the Langgraph agent addresses various types of inputs using Gemini thinking capabilities and generating them.

In conclusion, by combining the structured status machine for Langgraph and the power of conversation intelligence in Gemini, this factor represents a new model in AI’s work engineering, which reflects human survey courses, analysis and analysis. The tutorial provides a normative template and is expanded to develop advanced artificial intelligence factors that can deal with various tasks independently, starting with responding to complex inquiries to generating creative content.

Check the notebook here. All the credit for this research goes to researchers in this project.

🆕 Do you know? Marktechpost is the fastest platform of artificial intelligence-which is proven by more than a million readers per month. Book a strategic invitation to discuss the goals of your campaign. Also, do not hesitate to follow us twitter And do not forget to join 95K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-06-05 21:04:00