Google DeepMind Releases Gemma 3n: A Compact, High-Efficiency Multimodal AI Model for Real-Time On-Device Use

Researchers re -imagine how the models work as a request from artificial intelligence for faster, more intelligent and more private on phones, tablets and laptops. The next generation of artificial intelligence is not only lighter and faster; It is local. By including intelligence directly on the devices, the developers cancel a semi -fixed response, reduce memory requirements, and restore privacy to the hands of users. As mobile devices are rapidly advanced, the race is operated to build fast -lighted compressed models enough to redefine daily digital experiences.

One of the main concerns is to provide high -quality and multi -media intelligence within the restricted environments of mobile devices. Unlike the systems based on the group of cores that have access to the wide arithmetic energy, models on the device should be performed within the strict random access memory and processing boundaries. Multimedia, capable of interpreting text, photos, sound and video, usually requires large models, most mobile devices cannot be dealt with efficiently. Also, Cloud Tremency offers cumin and privacy concerns, making it necessary to design models that can work locally without sacrificing performance.

Previous models such as GEMMA 3 and Gemma 3 Qat tried to fill this gap by reducing the size while maintaining performance. It is designed to use on cloud graphics processing units or desktop, and it greatly improves the efficiency of the model. However, these models still require strong devices and have not been able to overcome the restrictions of mobile platforms and fully respond. Although advanced jobs support, they often include concessions that limit the ability to use a smartphone in actual time.

The researchers presented from Google and Google DeepMind Gemma 3N. Architecture was improved behind the GMMA 3N for the first mobile publishing, targeting performance via Android and Chrome platforms. It also is the basis for the next version of Gemini Nano. Innovation is a big leap forward by supporting multimedia intelligence functions with a decrease in memory fingerprint while maintaining response capabilities in actual time. This is the first open model based on this joint infrastructure and is provided to developers in the inspection, which allows immediate experience.

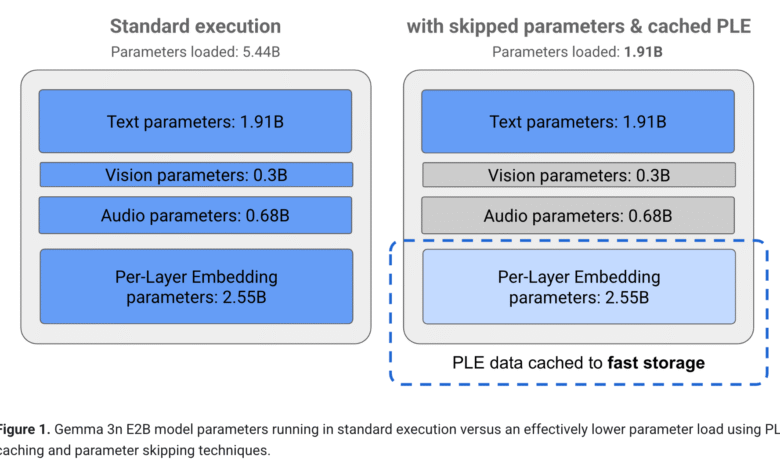

The primary innovation in Gemma 3N is the application of the implications of each layer (PL), a method that greatly reduces the use of RAM. While the sizes of the initial models include 5 billion and 8 billion teachers, they behave with the effects of memory feet equivalent to 2 billion and 4 billion models. Dynamic memory consumption is only 2 GB for 5B and 3 GB model for 8B. Also, the composition of an overlapping model uses a 4B memory fingerprint model. This allows developers to switch the dynamic performance conditions without loading separate models. Additional developments include KVC sharing and stimulating quantity, which reduces cumin and increases response speed. For example, a 1.5X mobile response time compared to GEMMA 3 4B improves better output quality.

Gemma 3N performance scales enhance their suitability for publishing a mobile phone. It excels in identifying and translating automatic speech, which allows the conversion of smooth speech into the translated text. On multi -language criteria such as WMT24 ++ (CHRF), it records 50.1 %, highlighting its strength in Japanese, German, Korean, Spanish and French. The ability of Mix’n’msh allows the creation of improved sub -models for various quality and cumin groups, providing developers more customization. Architecture supports overlapping inputs from various methods, text, sound, photos, and video, allowing more natural and rich reactions in the context. It also works in a non -connection mode, which guarantees privacy and reliability even without network connection. Using cases include visual and auditory comments, generating content known for context, and advanced applications.

Several fast food includes searching on GMMA 3N:

- It is designed with the cooperation between Google, DeepMind, Qualcomm, Mediaatek and Samsung System LSI. Designed to spread the first mobile.

- The size of the raw model of 5B and 8B parameters, with the effects of operational feet of 2 GB and 3 GB, respectively, using all the implications (PL).

- 1.5X faster response on the GMMA 3 4B mobile phone. The scale of multi -language is 50.1 % on WMT24 ++ (Chrf).

- It accepts and understands the sound, text, images, and videos, allowing multimedia complex and interlocking inputs.

- Supports dynamic bodies using MATFORMER training with overlapping sub -models and Mix’n’mch capabilities.

- It works without connection to the Internet, guaranteeing privacy and reliability.

- Preview is available via Google AI Studio and Google Ai Edge, with text and images processing capabilities.

In conclusion, this innovation provides a clear path to make Amnesty International High Performing, private. By treating RAM restrictions through innovative architecture and enhancing multi -language and multi -media capabilities, researchers provide an applicable solution to fetch artificial intelligence directly to daily devices. The flexible sub -switch, unimaginable preparation for the Internet, and the fast response time determines a comprehensive approach to the first eraser of mobile phones. The research deals with a balance of mathematical efficiency, user privacy and dynamic response. The result is a system capable of providing artificial intelligence experiences in an actual time without sacrificing ability or diversity, which mainly expands what users can expect from intelligence on the device.

Check the technical details and try it here. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 95K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-05-22 04:03:00