Build a Low-Footprint AI Coding Assistant with Mistral Devstral

In this super light Mistral Devstral The tutorial, a sprayed guide is provided specifically for users who face disk space restrictions. Large language models such as Mistral can be a challenge in storage and limited memory environments, but this tutorial shows how to publish the strong Devstral-Small model. Through the aggressive quantitative measurement using bitsandbytes, the management of cache, and an effective symbolic generation, this tutorial is run by building a lightweight, interactive and unique auxiliary. Whether you are correcting a code or writing small tools or initial models while moving, this setting guarantees the maximum performance with minimal fingerprint.

!pip install -q kagglehub mistral-common bitsandbytes transformers --no-cache-dir

!pip install -q accelerate torch --no-cache-dir

import shutil

import os

import gcThe tutorial begins by installing lightweight basic packages such as Kagglehub, Mistral-Common, Bitsandbytes and Transformers, ensuring that no cache to reduce the use of disc. It also includes the acceleration and flame to download the effective model and inference. To improve the space, any previously existing cache or temporary evidence using Python’s Shutil, OS and GC units.

def cleanup_cache():

"""Clean up unnecessary files to save disk space"""

cache_dirs = ['/root/.cache', '/tmp/kagglehub']

for cache_dir in cache_dirs:

if os.path.exists(cache_dir):

shutil.rmtree(cache_dir, ignore_errors=True)

gc.collect()

cleanup_cache()

print("🧹 Disk space optimized!")To maintain the minimum disk fingerprint during implementation, Cleanup_Cache () function is defined to remove repeated cache evidence such as /rot/cache and /TMP /kagglehub. This proactive cleaning helps to free space before and after the main operations. Once you invoke, the job confirms that the disk space has been improved, which enhances the concentration of the educational program on resource efficiency.

import warnings

warnings.filterwarnings("ignore")

import torch

import kagglehub

from mistral_common.protocol.instruct.messages import UserMessage

from mistral_common.protocol.instruct.request import ChatCompletionRequest

from mistral_common.tokens.tokenizers.mistral import MistralTokenizer

from transformers import AutoModelForCausalLM, BitsAndBytesConfigTo ensure smooth implementation without dispersing warning messages, we suppress all the operating time warnings using the Python Warning Unit. Then he imports the basic libraries of typical reaction, including the flame of tension accounts, kagglehub to flow the model, and transformers to download the quantum LLM. The semesters of Mistral, such as USermessge, Chatcompletionrequest, and Mistraltokenizer are also packed to deal with the distinctive symbol and request coordination designed on Devstral.

class LightweightDevstral:

def __init__(self):

print("📦 Downloading model (streaming mode)...")

self.model_path = kagglehub.model_download(

'mistral-AI/devstral-small-2505/Transformers/devstral-small-2505/1',

force_download=False

)

quantization_config = BitsAndBytesConfig(

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_quant_type="nf4",

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_storage=torch.uint8,

load_in_4bit=True

)

print("⚡ Loading ultra-compressed model...")

self.model = AutoModelForCausalLM.from_pretrained(

self.model_path,

torch_dtype=torch.float16,

device_map="auto",

quantization_config=quantization_config,

low_cpu_mem_usage=True,

trust_remote_code=True

)

self.tokenizer = MistralTokenizer.from_file(f'{self.model_path}/tekken.json')

cleanup_cache()

print("✅ Lightweight assistant ready! (~2GB disk usage)")

def generate(self, prompt, max_tokens=400):

"""Memory-efficient generation"""

tokenized = self.tokenizer.encode_chat_completion(

ChatCompletionRequest(messages=[UserMessage(content=prompt)])

)

input_ids = torch.tensor([tokenized.tokens])

if torch.cuda.is_available():

input_ids = input_ids.to(self.model.device)

with torch.inference_mode():

output = self.model.generate(

input_ids=input_ids,

max_new_tokens=max_tokens,

temperature=0.6,

top_p=0.85,

do_sample=True,

pad_token_id=self.tokenizer.eos_token_id,

use_cache=True

)[0]

del input_ids

torch.cuda.empty_cache() if torch.cuda.is_available() else None

return self.tokenizer.decode(output[len(tokenized.tokens):])

print("🚀 Initializing lightweight AI assistant...")

assistant = LightweightDevstral()We define the Lightweightvstral category, the primary component of the educational program, which undertakes the loading and generating texts in a resource -saving manner. It begins with a Devstral-Small-2555 model using Kagglehub, and avoid excess downloads. Then the model is loaded with 4 -bit aggressive quantities via bitsandbyteconfig, which greatly reduces memory use and the disc with the enabled performance conclusion. The distinctive code is prepared from the local JSON file, and the cache is cleared immediately after that. It uses the method of creating safe practices for memory, such as Torch.inference_mode () and fmare_cache (), to create responses efficiently, which makes this auxiliary suitable even for environments with narrow -bodies of devices.

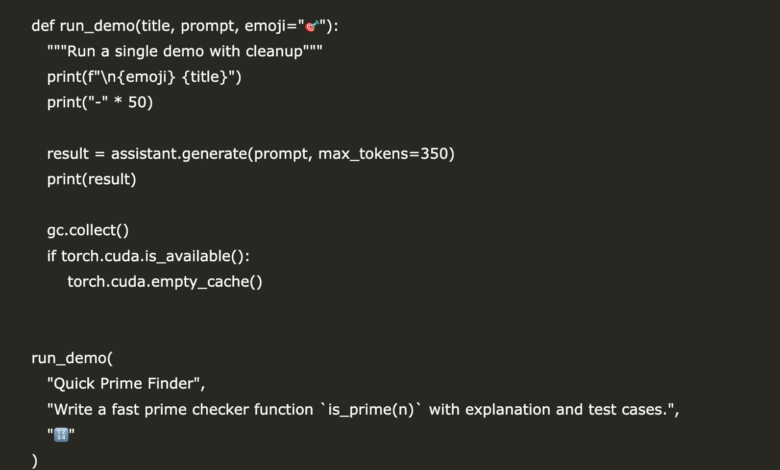

def run_demo(title, prompt, emoji="🎯"):

"""Run a single demo with cleanup"""

print(f"\n{emoji} {title}")

print("-" * 50)

result = assistant.generate(prompt, max_tokens=350)

print(result)

gc.collect()

if torch.cuda.is_available():

torch.cuda.empty_cache()

run_demo(

"Quick Prime Finder",

"Write a fast prime checker function `is_prime(n)` with explanation and test cases.",

"🔢"

)

run_demo(

"Debug This Code",

"""Fix this buggy function and explain the issues:

```python

def avg_positive(numbers):

total = sum([n for n in numbers if n > 0])

return total / len([n for n in numbers if n > 0])

```""",

"🐛"

)

run_demo(

"Text Tool Creator",

"Create a simple `TextAnalyzer` class with word count, char count, and palindrome check methods.",

"🛠️"

)

Here we show the form of the model coding through a compressed demo suite using the run_demo function (). Each experimental offer is sent to a Devstral assistant and the printing of the created response, followed by the memory cleaning to prevent accumulation through several operations. Examples include writing an effective function for the main examination, correction of the Peton excerpt with logical defects, and building a mini -category for Malizer. These demonstrations shed light on the benefit of the model as a lightweight coding assistant, and I intend to disk is able to generate and explain the code.

def quick_coding():

"""Lightweight interactive session"""

print("\n🎮 QUICK CODING MODE")

print("=" * 40)

print("Enter short coding prompts (type 'exit' to quit)")

session_count = 0

max_sessions = 5

while session_count < max_sessions:

prompt = input(f"\n[{session_count+1}/{max_sessions}] Your prompt: ")

if prompt.lower() in ['exit', 'quit', '']:

break

try:

result = assistant.generate(prompt, max_tokens=300)

print("💡 Solution:")

print(result[:500])

gc.collect()

if torch.cuda.is_available():

torch.cuda.empty_cache()

except Exception as e:

print(f"❌ Error: {str(e)[:100]}...")

session_count += 1

print(f"\n✅ Session complete! Memory cleaned.")We offer a rapid coding mode, which is an interactive lightweight interactive interface that allows users to send short coding claims to Devstral assistant. It is designed to reduce memory use, the interaction of CAPS in five claims, each of which follows the cleaning of an aggressive memory to ensure the continued response to low resources environments. The assistant responds to the suggestions of the brief and cut code, which makes this situation ideal for rapid initial models or correcting or exploring coding concepts during flying, all without overwhelming the notebook disk or memory capacity.

def check_disk_usage():

"""Monitor disk usage"""

import subprocess

try:

result = subprocess.run(['df', '-h', '/'], capture_output=True, text=True)

lines = result.stdout.split('\n')

if len(lines) > 1:

usage_line = lines[1].split()

used = usage_line[2]

available = usage_line[3]

print(f"💾 Disk: {used} used, {available} available")

except:

print("💾 Disk usage check unavailable")

print("\n🎉 Tutorial Complete!")

cleanup_cache()

check_disk_usage()

print("\n💡 Space-Saving Tips:")

print("• Model uses ~2GB vs original ~7GB+")

print("• Automatic cache cleanup after each use")

print("• Limited token generation to save memory")

print("• Use 'del assistant' when done to free ~2GB")

print("• Restart runtime if memory issues persist")Finally, we offer a useful cleaning routine to use the disk. Using the DF -H command via the Python sub -processing unit, it offers the amount of the disk space used and available, confirming the light nature of the model. After reinstallation Cleanup_cache () to ensure the minimum residues, the text program concludes with a set of practical advice to provide space.

In conclusion, we can now take advantage of the capabilities of the Mistral Devstral model in space -bound environments such as Google Colab, without ease of use or speed. The form is loaded in a very compressed format, generates effective text, and ensures memory immediately after use. With interactive coding mode and experimental width width, users can test their ideas quickly and smoothly.

verify Symbols. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-06-25 09:48:00