Build a Powerful Multi-Tool AI Agent Using Nebius with Llama 3 and Real-Time Reasoning Tools

In this tutorial, we offer advanced Amnesty International Undersecretary designed using Nebius The strong ecosystem, especially the Chatinius, Nebiusembedds, and the ingredients of the nebiusretriever. The agent uses the Llama-3.3-70B-Instruct-Fast model to create high-quality responses, including external functions such as searching for Wikipedia, recovering contextual documents and a safe sports account. By combining the structured rapid design with the standard framework of Langchain, this tutorial shows how to create an Amnesty International Medical Assistant and the ability to expand. Whether for scientific information, technological ideas, or basic numerical tasks, this factor presents the capabilities of Nebius as a platform for building advanced AI systems.

!pip install -q langchain-nebius langchain-core langchain-community wikipedia

import os

import getpass

from typing import List, Dict, Any

import wikipedia

from datetime import datetimeWe start installing the basic libraries, including Langchain-NEBIUS, Langchain-Core, Langchain-Community and Wikipedia, which is decisive to build an Amnesty International-rich assistant. Then he imports the necessary stereotypes such as OS, GetPass, Datime and OttITIVITIONES, and set up a Wikipedia application programming interface to access external data.

from langchain_core.documents import Document

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

from langchain_core.tools import tool

from langchain_nebius import ChatNebius, NebiusEmbeddings, NebiusRetriever

if "NEBIUS_API_KEY" not in os.environ:

os.environ["NEBIUS_API_KEY"] = getpass.getpass("Enter your Nebius API key: ")We import the basic components of Langchain and Nebius to enable document processing, rapid representation, directing the output, and integrating tools. It prepares major categories such as Chatnebius for language modeling, nebiusembeddds to represent verticals, and nebiusretriever for semantic research. The user’s API key is safely accessed using GETPASS to ratify subsequent API reactions.

class AdvancedNebiusAgent:

"""Advanced AI Agent with retrieval, reasoning, and external tool capabilities"""

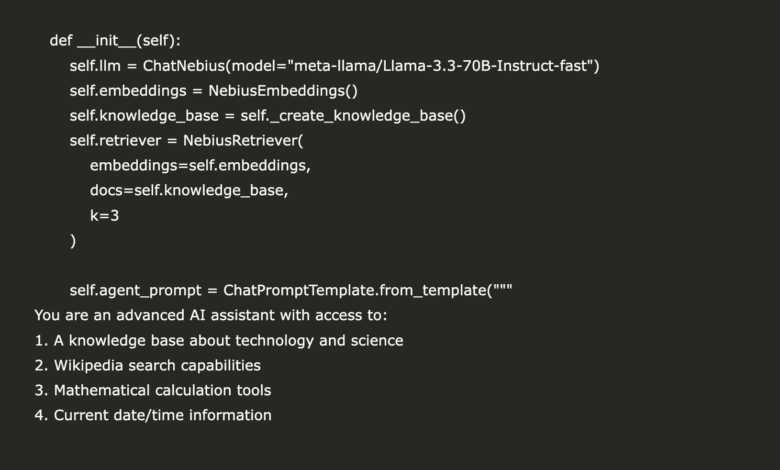

def __init__(self):

self.llm = ChatNebius(model="meta-llama/Llama-3.3-70B-Instruct-fast")

self.embeddings = NebiusEmbeddings()

self.knowledge_base = self._create_knowledge_base()

self.retriever = NebiusRetriever(

embeddings=self.embeddings,

docs=self.knowledge_base,

k=3

)

self.agent_prompt = ChatPromptTemplate.from_template("""

You are an advanced AI assistant with access to:

1. A knowledge base about technology and science

2. Wikipedia search capabilities

3. Mathematical calculation tools

4. Current date/time information

Context from knowledge base:

{context}

External tool results:

{tool_results}

Current date: {current_date}

User Query: {query}

Instructions:

- Use the knowledge base context when relevant

- If you need additional information, mention what external sources would help

- Be comprehensive but concise

- Show your reasoning process

- If calculations are needed, break them down step by step

Response:

""")

def _create_knowledge_base(self) -> List[Document]:

"""Create a comprehensive knowledge base"""

return [

Document(

page_content="Artificial Intelligence (AI) is transforming industries through ML, NLP, and computer vision. Key applications include autonomous vehicles, medical diagnosis, and financial trading.",

metadata={"topic": "AI", "category": "technology"}

),

Document(

page_content="Quantum computing uses quantum mechanical phenomena like superposition and entanglement to process information. Companies like IBM, Google, and Microsoft are leading quantum research.",

metadata={"topic": "quantum_computing", "category": "technology"}

),

Document(

page_content="Climate change is caused by greenhouse gas emissions, primarily CO2 from fossil fuels. Renewable energy sources are crucial for mitigation.",

metadata={"topic": "climate", "category": "environment"}

),

Document(

page_content="CRISPR-Cas9 is a revolutionary gene editing technology that allows precise DNA modifications. It has applications in treating genetic diseases and improving crops.",

metadata={"topic": "biotechnology", "category": "science"}

),

Document(

page_content="Blockchain technology enables decentralized, secure transactions without intermediaries. Beyond cryptocurrency, it has applications in supply chain, healthcare, and voting systems.",

metadata={"topic": "blockchain", "category": "technology"}

),

Document(

page_content="Space exploration has advanced with reusable rockets, Mars rovers, and commercial space travel. SpaceX, Blue Origin, and NASA are pioneering new missions.",

metadata={"topic": "space", "category": "science"}

),

Document(

page_content="Renewable energy costs have dropped dramatically. Solar & wind power are now cheaper than fossil fuels in many regions, driving global energy transition.",

metadata={"topic": "renewable_energy", "category": "environment"}

),

Document(

page_content="5G networks provide ultra-fast internet speeds and low latency, enabling IoT devices, autonomous vehicles, and augmented reality applications.",

metadata={"topic": "5G", "category": "technology"}

)

]

@tool

def wikipedia_search(query: str) -> str:

"""Search Wikipedia for additional information"""

try:

search_results = wikipedia.search(query, results=3)

if not search_results:

return f"No Wikipedia results found for '{query}'"

page = wikipedia.page(search_results[0])

summary = wikipedia.summary(search_results[0], sentences=3)

return f"Wikipedia: {page.title}n{summary}nURL: {page.url}"

except Exception as e:

return f"Wikipedia search error: {str(e)}"

@tool

def calculate(expression: str) -> str:

"""Perform mathematical calculations safely"""

try:

allowed_chars = set('0123456789+-*/.() ')

if not all(c in allowed_chars for c in expression):

return "Error: Only basic mathematical operations allowed"

result = eval(expression)

return f"Calculation: {expression} = {result}"

except Exception as e:

return f"Calculation error: {str(e)}"

def _format_docs(self, docs: List[Document]) -> str:

"""Format retrieved documents for context"""

if not docs:

return "No relevant documents found in knowledge base."

formatted = []

for i, doc in enumerate(docs, 1):

formatted.append(f"{i}. {doc.page_content}")

return "n".join(formatted)

def _get_current_date(self) -> str:

"""Get current date and time"""

return datetime.now().strftime("%Y-%m-%d %H:%M:%S")

def process_query(self, query: str, use_wikipedia: bool = False,

calculate_expr: str = None) -> str:

"""Process a user query with optional external tools"""

relevant_docs = self.retriever.invoke(query)

context = self._format_docs(relevant_docs)

tool_results = []

if use_wikipedia:

wiki_keywords = self._extract_keywords(query)

if wiki_keywords:

wiki_result = self.wikipedia_search(wiki_keywords)

tool_results.append(f"Wikipedia Search: {wiki_result}")

if calculate_expr:

calc_result = self.calculate(calculate_expr)

tool_results.append(f"Calculation: {calc_result}")

tool_results_str = "n".join(tool_results) if tool_results else "No external tools used"

chain = (

{

"context": lambda x: context,

"tool_results": lambda x: tool_results_str,

"current_date": lambda x: self._get_current_date(),

"query": RunnablePassthrough()

}

| self.agent_prompt

| self.llm

| StrOutputParser()

)

return chain.invoke(query)

def _extract_keywords(self, query: str) -> str:

"""Extract key terms for Wikipedia search"""

important_words = []

stop_words = {'what', 'how', 'why', 'when', 'where', 'is', 'are', 'the', 'a', 'an'}

words = query.lower().split()

for word in words:

if word not in stop_words and len(word) > 3:

important_words.append(word)

return ' '.join(important_words[:3])

def interactive_session(self):

"""Run an interactive session with the agent"""

print("🤖 Advanced Nebius AI Agent Ready!")

print("Features: Knowledge retrieval, Wikipedia search, calculations")

print("Commands: 'wiki:' for Wikipedia, 'calc:' for math")

print("Type 'quit' to exitn")

while True:

user_input = input("You: ").strip()

if user_input.lower() == 'quit':

print("Goodbye!")

break

use_wiki = False

calc_expr = None

if user_input.startswith('wiki:'):

use_wiki = True

user_input = user_input[5:].strip()

elif user_input.startswith('calc:'):

parts = user_input.split(':', 1)

if len(parts) == 2:

calc_expr = parts[1].strip()

user_input = f"Calculate {calc_expr}"

try:

response = self.process_query(user_input, use_wiki, calc_expr)

print(f"n🤖 Agent: {response}n")

except Exception as e:

print(f"Error: {e}n") The essence of implementation is wrapped in the Advancednebiusagen category, which regulates integration of thinking, retrieval and tool integration. High-performance LLM is prepared by Nebius (Meta-Lama/Llama-3.3-70B-Instruct-Fast. It prepares a semantic refuge based on compact documents, and it constitutes a small knowledge base that covers topics such as artificial intelligence, quantum computing, blocks and more. The dynamic mentor directs the agent’s responses by including the recovery context, the outputs of the external tool, and the current date. Two compact tools, Wikipedia_Search and an account, and enhance the functions of the agent by providing access to external seasonal knowledge and a safe arithmetic account, respectively. The Process_query method combines together, and the series calls for a dynamic demand to use context, tools and thinking to create useful and multi -resource answers. The optional interactive session provides conversations in the actual time with the agent, allowing to identify the special initiatives such as Wiki: or account: to activate the support of external tools.

if __name__ == "__main__":

agent = AdvancedNebiusAgent()

demo_queries = [

"What is artificial intelligence and how is it being used?",

"Tell me about quantum computing companies",

"How does climate change affect renewable energy adoption?"

]

print("=== Nebius AI Agent Demo ===n")

for i, query in enumerate(demo_queries, 1):

print(f"Demo {i}: {query}")

response = agent.process_query(query)

print(f"Response: {response}n")

print("-" * 50)

print("nDemo with Wikipedia:")

response_with_wiki = agent.process_query(

"What are the latest developments in space exploration?",

use_wikipedia=True

)

print(f"Response: {response_with_wiki}n")

print("Demo with calculation:")

response_with_calc = agent.process_query(

"If solar panel efficiency improved by 25%, what would be the new efficiency if current is 20%?",

calculate_expr="20 * 1.25"

)

print(f"Response: {response_with_calc}n")Finally, we offer the agent’s capabilities through a set of experimental queries. It begins with the establishment of Advancednebiusagement, followed by an episode that addresses pre -defined claims related to AI, quantum computing, and climate change, which indicates a recovery function. Then he inquires improved Wikipedia about space exploration, with an actual time to complete the base of knowledge. Finally, it runs a sports scenario that includes the efficiency of the solar plate to verify the authenticity of the account tool. These illustrations combined show how Nebius allows, along with Langchain and well -organized demands, processing multimedia smart inquiries in the real world assistant.

In conclusion, this factor, which works with Nebius, embodies how to effectively combine the logical thinking by LLM with the use of structural retrieval tool and the use of external tools to build an auxiliary capable of context. By combining Langchain with Nebeus Applications, the agent performs a coordinated knowledge base, attends live data from Wikipedia, takes care of safety with safety checks. The standard engineering of the educational program, which is characterized by fast templates, dynamic chains, and customizable inputs, provides a strong plan for developers who seek to create smart systems that exceed the responses of the LLM Fixed Language Model (LLM).

verify Symbols. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-06-27 07:30:00