Building and Optimizing Intelligent Machine Learning Pipelines with TPOT for Complete Automation and Performance Enhancement

We start this tutorial to show how to use Tpot To automate and improve automated learning pipelines in practice. By working directly at Google Colab, we ensure that the setting is lightweight, repetitive and accessible. We walk by downloading data, selecting a custom top scorer, designing the search space with advanced models such as XGBOST, and preparing health verification strategy. With our progress, we explore how the evolutionary algorithms in TPOT are looking for high -performance pipelines, providing American transparency through Pareto fronts and inspection points. verify Full codes here.

!pip -q install tpot==0.12.2 xgboost==2.0.3 scikit-learn==1.4.2 graphviz==0.20.3

import os, json, math, time, random, numpy as np, pandas as pd

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split, StratifiedKFold

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import make_scorer, f1_score, classification_report, confusion_matrix

from sklearn.pipeline import Pipeline

from tpot import TPOTClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.naive_bayes import GaussianNB

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier, ExtraTreesClassifier, GradientBoostingClassifier

from xgboost import XGBClassifier

SEED = 7

random.seed(SEED); np.random.seed(SEED); os.environ["PYTHONHASHSEED"]=str(SEED)We start installing libraries and importing all the basic units that support data processing, build models and improve pipelines. We set a fixed random seed to ensure our results remain repetitive every time we run the notebook. verify Full codes here.

X, y = load_breast_cancer(return_X_y=True, as_frame=True)

X_tr, X_te, y_tr, y_te = train_test_split(X, y, test_size=0.3, stratify=y, random_state=SEED)

scaler = StandardScaler().fit(X_tr)

X_tr_s, X_te_s = scaler.transform(X_tr), scaler.transform(X_te)

def f1_cost_sensitive(y_true, y_pred):

return f1_score(y_true, y_pred, average="binary", pos_label=1)

cost_f1 = make_scorer(f1_cost_sensitive, greater_is_better=True)Here, we download and divide the breast cancer data set into training and testing groups while maintaining the separation balance. We unify the features of stability and then define a custom scorer based on F1, allowing us to assess pipelines with a focus on effectively capturing positive cases. verify Full codes here.

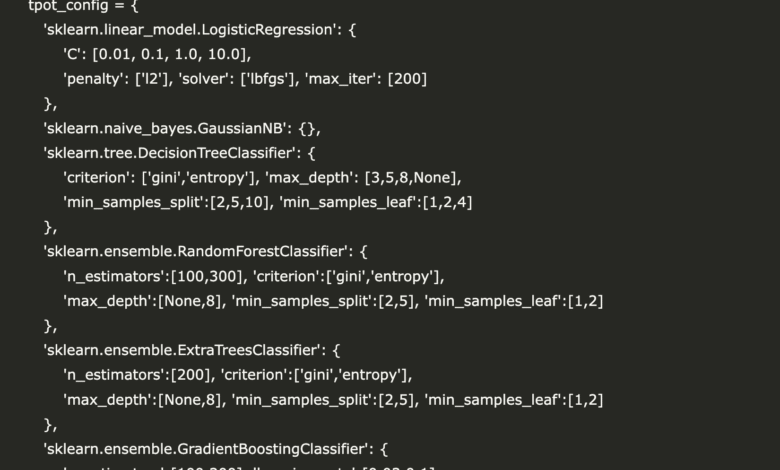

tpot_config = {

'sklearn.linear_model.LogisticRegression': {

'C': [0.01, 0.1, 1.0, 10.0],

'penalty': ['l2'], 'solver': ['lbfgs'], 'max_iter': [200]

},

'sklearn.naive_bayes.GaussianNB': {},

'sklearn.tree.DecisionTreeClassifier': {

'criterion': ['gini','entropy'], 'max_depth': [3,5,8,None],

'min_samples_split':[2,5,10], 'min_samples_leaf':[1,2,4]

},

'sklearn.ensemble.RandomForestClassifier': {

'n_estimators':[100,300], 'criterion':['gini','entropy'],

'max_depth':[None,8], 'min_samples_split':[2,5], 'min_samples_leaf':[1,2]

},

'sklearn.ensemble.ExtraTreesClassifier': {

'n_estimators':[200], 'criterion':['gini','entropy'],

'max_depth':[None,8], 'min_samples_split':[2,5], 'min_samples_leaf':[1,2]

},

'sklearn.ensemble.GradientBoostingClassifier': {

'n_estimators':[100,200], 'learning_rate':[0.03,0.1],

'max_depth':[2,3], 'subsample':[0.8,1.0]

},

'xgboost.XGBClassifier': {

'n_estimators':[200,400], 'max_depth':[3,5], 'learning_rate':[0.05,0.1],

'subsample':[0.8,1.0], 'colsample_bytree':[0.8,1.0],

'reg_lambda':[1.0,2.0], 'min_child_weight':[1,3],

'n_jobs':[0], 'tree_method':['hist'], 'eval_metric':['logloss'],

'gamma':[0,1]

}

}

cv = StratifiedKFold(n_splits=5, shuffle=True, random_state=SEED)We define a dedicated TPOT composition that combines linear models, tree -based learners, and XGBOST, using carefully selected metrics. We have also created a 5 -fold validation strategy, ensuring the test of each department nominated fairly through balanced splits for the data set. verify Full codes here.

t0 = time.time()

tpot = TPOTClassifier(

generations=5,

population_size=40,

offspring_size=40,

scoring=cost_f1,

cv=cv,

subsample=0.8,

n_jobs=-1,

config_dict=tpot_config,

verbosity=2,

random_state=SEED,

max_time_mins=10,

early_stop=3,

periodic_checkpoint_folder="tpot_ckpt",

warm_start=False

)

tpot.fit(X_tr_s, y_tr)

print(f"\n⏱️ First search took {time.time()-t0:.1f}s")

def pareto_table(tpot_obj, k=5):

rows=[]

for ind, meta in tpot_obj.pareto_front_fitted_pipelines_.items():

rows.append({

"pipeline": ind, "cv_score": meta['internal_cv_score'],

"size": len(str(meta['pipeline'])),

})

df = pd.DataFrame(rows).sort_values("cv_score", ascending=False).head(k)

return df.reset_index(drop=True)

pareto_df = pareto_table(tpot, k=5)

print("\nTop Pareto pipelines (cv):\n", pareto_df)

def eval_pipeline(pipeline, X_te, y_te, name):

y_hat = pipeline.predict(X_te)

f1 = f1_score(y_te, y_hat)

print(f"\n[{name}] F1(test) = {f1:.4f}")

print(classification_report(y_te, y_hat, digits=3))

print("\nEvaluating top pipelines on test:")

for i, (ind, meta) in enumerate(sorted(

tpot.pareto_front_fitted_pipelines_.items(),

key=lambda kv: kv[1]['internal_cv_score'], reverse=True)[:3], 1):

eval_pipeline(meta['pipeline'], X_te_s, y_te, name=f"Pareto#{i}")We launch an evolutionary search with TPOT, and CAP operating time for the practical application, and offers the checkpoint, allowing us to search for strong pipelines. Then we examine the Pareto interface to determine the best bodies, turn it into a compact schedule, and determine the leaders based on the degree of validity. Finally, we evaluate the best candidates in the suspended test to confirm the performance in the real world with F1 and the full classification report. verify Full codes here.

print("\n🔁 Warm-start for extra refinement...")

t1 = time.time()

tpot2 = TPOTClassifier(

generations=3, population_size=40, offspring_size=40,

scoring=cost_f1, cv=cv, subsample=0.8, n_jobs=-1,

config_dict=tpot_config, verbosity=2, random_state=SEED,

warm_start=True, periodic_checkpoint_folder="tpot_ckpt"

)

try:

tpot2._population = tpot._population

tpot2._pareto_front = tpot._pareto_front

except Exception:

pass

tpot2.fit(X_tr_s, y_tr)

print(f"⏱️ Warm-start extra search took {time.time()-t1:.1f}s")

best_model = tpot2.fitted_pipeline_ if hasattr(tpot2, "fitted_pipeline_") else tpot.fitted_pipeline_

eval_pipeline(best_model, X_te_s, y_te, name="BestAfterWarmStart")

export_path = "tpot_best_pipeline.py"

(tpot2 if hasattr(tpot2, "fitted_pipeline_") else tpot).export(export_path)

print(f"\n📦 Exported best pipeline to: {export_path}")

from importlib import util as _util

spec = _util.spec_from_file_location("tpot_best", export_path)

tbest = _util.module_from_spec(spec); spec.loader.exec_module(tbest)

reloaded_clf = tbest.exported_pipeline_

pipe = Pipeline([("scaler", scaler), ("model", reloaded_clf)])

pipe.fit(X_tr, y_tr)

eval_pipeline(pipe, X_te, y_te, name="ReloadedExportedPipeline")

report = {

"dataset": "sklearn breast_cancer",

"train_size": int(X_tr.shape[0]), "test_size": int(X_te.shape[0]),

"cv": "StratifiedKFold(5)",

"scorer": "custom F1 (binary)",

"search": {"gen_1": 5, "gen_2_warm": 3, "pop": 40, "subsample": 0.8},

"exported_pipeline_first_120_chars": str(reloaded_clf)[:120]+"...",

}

print("\n🧾 Model Card:\n", json.dumps(report, indent=2))We continue to search with a warm start, reuse the warm start to improve candidates and determine the best performance in our test set. We export the winning pipeline, re -download it alongside our commission to imitate publishing, and check its results. Finally, we create a compact model card to document the data set, search settings and the source of the source of the cloning.

In conclusion, we see how Tpot allows us to bypass the choice of experiment and error model and instead we rely on automatic, repetitive and interpretable improvement. We export the best pipeline, check the authenticity of the invisible data, and even re -download it for use, which confirms that the workflow is not just a pilot but ready for production. By combining cloning, flexibility, and interpretation, we end with a strong framework that we can apply with confidence in the most complex data collections and problems in the real world.

verify Full codes here. Do not hesitate to check our GitHub page for lessons, symbols and notebooks. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

Don’t miss more hot News like this! AI/" target="_blank" rel="noopener">Click here to discover the latest in AI news!

2025-08-29 16:30:00