Qwen3-Coder-480B-A35B-Instruct launches and it ‘might be the best coding model yet’

Want more intelligent visions of your inbox? Subscribe to our weekly newsletters to get what is concerned only for institutions AI, data and security leaders. Subscribe now

The giant QWEN team in the Chinese e -commerce Alibaba did so again.

Just days after its launch for free and with the licensing of open sources, what is now the best non-general linguistic model (LLM) in the world-complete pause, even compared to artificial intelligence models of artificial intelligence from well-funded US laboratories such as Google and Openai-in the form of other analgesics called QWen3-235b-A22B-2507, this group of researchers was then done.

that it QWEN3-Coder-480B-A35B-InstructA new open source LLM focuses on helping software development. It is designed to deal with the complex multi -step coding workflow and can create full functional applications Seconds Or minutes.

The model is placed to compete with ownership offers such as Clauds Sonnet-4 in coding tasks and puts new degrees between open styles.

It is available in the face of embrace, GitHub, QWEN CHAT, via API QWEN from Alibaba, and an increased list of third -sided coding platforms and AI tools.

Open sources license means low cost and high options for institutions

But unlike Claude and other ownership models, the QWEN3 rejectionist, which will be called in short, is now available under an open source Apache 2.0 license, which means that it is free to take any institution without fees, download, amend, publish and use its commercial applications for employees or professional customers without paying alibaba or anyone else.

It is also a very high performance on third-party standards and fictional use among artificial intelligence power users for “full coding”-coding using natural language and without formal processes-at least one books, Llm Sebastian Raschka, on x: that: “This may be the best coding model so far. General purposes are great, but if you want the best in coding, the specialty wins. No free lunch.”

The developers and institutions interested in downloading can find the code on the AI Sharing Rovering Face icon.

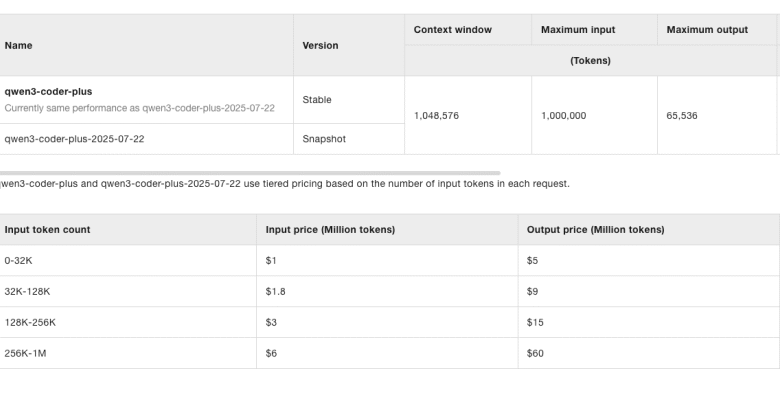

Institutions that do not want it, or do not have the ability to host the model alone or through various cloud inferior subordination providers from the third party, also use it directly through Alibans Cloud Qwen API, where the costs of the distinctive symbol begin for each million dollars, then up to up to up to $ 25, up to up to $ 12,000, up to up to up to up to $ 12,000, 6, 6 Dollars/$ 60 for a full million.

The form of architecture and abilities

According to the documents issued by the QWEN Team Online team, QWEN3-Code is a mixture of experts (MEE) with a total of 480 billion teachers, 35 billion activists for each inquiry and 8 active experts out of 160.

It supports 256 thousand lengths of a symbolic context in the first place, with the extrapolation of up to one million symbols using yarn (after extrapolating another rope – a technique used to expand the length of the language of the language model beyond its original training by adjusting the localized models (rope) used during the calculation of interest.

It is designed as a causal linguistic model, characterized by 62 layers, 96 heads of attention to the inquiries, and 8 for the main value pairs. It has been improved for the tasks provided for the distinctive symbol, follow -up of instructions, and achieve support to support

High performance

QWEN3-Coder has achieved the leading performance between the open models on many of the evaluation wings of agents:

- Swe-Bench: 67.0 % (standard), 69.6 % (500 transformation)

- GPT-4.1: 54.6 %

- Gemini 2.5 Pro Preview: 49.0 %

- Claude Sonnet 4: 70.4 %

The model is also competitively recorded through tasks such as using a browser, multi -language programming, and the use of tools. Visual standards are gradually improved through training repetitions in categories such as code generation, SQL programming, editing code, and the following instructions.

Besides the model, QWEN has an open source QWEN code, which is a SLI echoed tool of Gemini. This interface supports summons of functionality and organized application, facilitating the integration of QWEN3-Recommer into the course of coding. The QWEN code supports Node.js and can be installed via NPM or from the source.

QWEN3-Coder also integrates with developers platforms such as:

- Claud Code

- Klein (as a background compatible with Openai)

- OLLAMA, LMSTudio, MLX-LM, Llama.cp, and KtransFormers

Developers can operate QWEN3-Conder locally or call via OpenAi applications compatible with Openai using the end-up points hosted on Alibaba Cloud.

Post -training techniques: RL code and long horizon planning

In addition to 7.5 trillion symbols (70 % icon), QWEN3-QWEN3 Coding takes advantage of advanced training technologies:

- RL code (reinforcement learning): High -quality learning, based on the various and verified tasks of the code

- Long horizontal factor RL: Training the model for planning, using tools and adapting to multiple turns reactions

This stage mimics the challenges of software engineering in the real world. To enable him, QWEN built a 20,000-based system and environment on Cloud Alibaba, providing the measure needed to assess models and training on complex workflow like those in Swe-Bus.

The effects of the Foundation: Amnesty International for Engineering Work and Davors

For institutions, QWEN3-Coder offers an open and very capable alternative to closed ownership models. With strong results in the implementation of coding and long thinking in the context, it is especially suitable for:

- Understand the level of the base of the code: Ideal for artificial intelligence systems that must understand large warehouses, technical documents or architectural patterns

- Automated withdrawal request: Its ability to plan and adapt through turns makes it suitable for generating or reviewing the withdrawal requests

- Integration of tools and coordination: Through the interface of application programming facades and the original job interface, the model can be included in the internal tools systems and CI/CD systems. This makes him particularly viable for the functioning of work and agents, that is, those in which the user prepares multiple tasks or tasks that he wants to explode the artificial intelligence model and do it independently, on its own, only when completing or when the questions appear.

- Establishing data and controlling costs: As an open model, institutions can publish QWEN3 shelves on their infrastructure-whether it is a cloud or a protected-avoids the seller’s closure and manage the account use more directly

Supporting long contexts and normative publishing options across various DeV environments makes QWEN3 visible a candidate for artificial intelligence pipelines in the production category in both large technology companies and smaller geometric teams.

The arrival of the developer and best practices

To use QWEN3-Code optimally, QWEN recommends:

- Sampling settings: temperature = 0.7, top_p = 0.8, top_k = 20, reftition_Penalty = 1.05

- Exit length: up to 65,536 symbols

- Transformers version: 4.51.0 or newer (old versions may throw errors due to the incompatibility of QWEN3_moe)

Examples of application programming facades and SDK are provided using Openai compatible PYTHON customers.

Developers can identify custom tools and allow QWEN3-Recommer to call dynamically during the code’s conversation or tasks.

A warm early reception of artificial intelligence energy users

Initial responses on QWEN3-Coder-480b-A35B-Instruct were significantly positive among artificial intelligence researchers, engineers and developers who tested the model in the course of coding work in the real world.

In addition to praise of the noble Raschka above, Wolfram Ravenwolf, an engineer and resident of Amnesty International in Ishind, participated in integrating the model with the Claude code on X, saying, “This is definitely the best one.”

After testing many integration agents, Ravenwolf said he finally built it using Litellm to ensure optimal performance, indicating the model’s attractiveness to practical practitioners who focus on customizing tools.

Teacher and Ai Tinker Kevin Nelson were also weight on X after using the model for simulation tasks.

“QWEN 3 COMER at another level,” It has been published, noting that the model was not only implemented on the scaffolding, but also that a message was included in the simulation out of the simulation – an unexpected but welcoming sign of the model’s awareness of the task context.

Even the co -founder of Twitter and the founder of Square (now called “Block”) Jack Dormyy founded the X message in praise of the form, writing: “Goose + Qwen3-Coder = Wow,Referring to the work of the AI Open Source Open, which is covered by Venturebeat again in January 2025.

These responses indicate that QWEN3-coding resonates with a technically smart user base that seeks to obtain performance, the ability to adapt, and deeper integration with current development chimneys.

Looking to the future: more sizes, more use

While this version focuses on the most powerful variable, QWEN3-Coder-480b-A35B-Instruct, the QWEN team indicates that additional models sizes are under development.

These will aim to provide similar capabilities with low publishing costs, and expand access.

Future work also includes exploring self -improvement, as the team searches whether the agents can improve their performance repeatedly through real use.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-23 21:49:00