Cisco Released Cisco Time Series Model: Their First Open-Weights Foundation Model based on Decoder-only Transformer Architecture

Cisco and Splunk provided Cisco time series modela univariate time series baseline model designed for observability and security measures. It is released as an open weight checkpoint on Hugging Face under the Apache 2.0 license, and is aimed at predicting workloads without fine-tuning for a specific task. The model extends to TimesFM 2.0 with a clear multi-solution architecture that integrates coarse and fine history into a single context window.

Why observability needs a multi-solution context?

Production measures are not simple, single-scale signals. Weekly patterns, long-term growth and saturation are only visible at approximate precision. Saturation events, traffic spikes and accident dynamics are shown at 1- or 5-minute resolution. Common single-resolution time series basis models work with context windows between 512 and 4096 points, while TimesFM 2.5 spans 16,384 points. For 1-minute data, this still covers at most two weeks and often less.

This is an observability issue as data platforms often only keep historical data in aggregated form. Fine-grained specimens expire and survive for only one hour. The Cisco Time Series model is designed for this storage style. It treats the approximate date as a first-order input that improves the predictions with high accuracy. The architecture operates directly on a multi-resolution context rather than pretending that all inputs live on a single network.

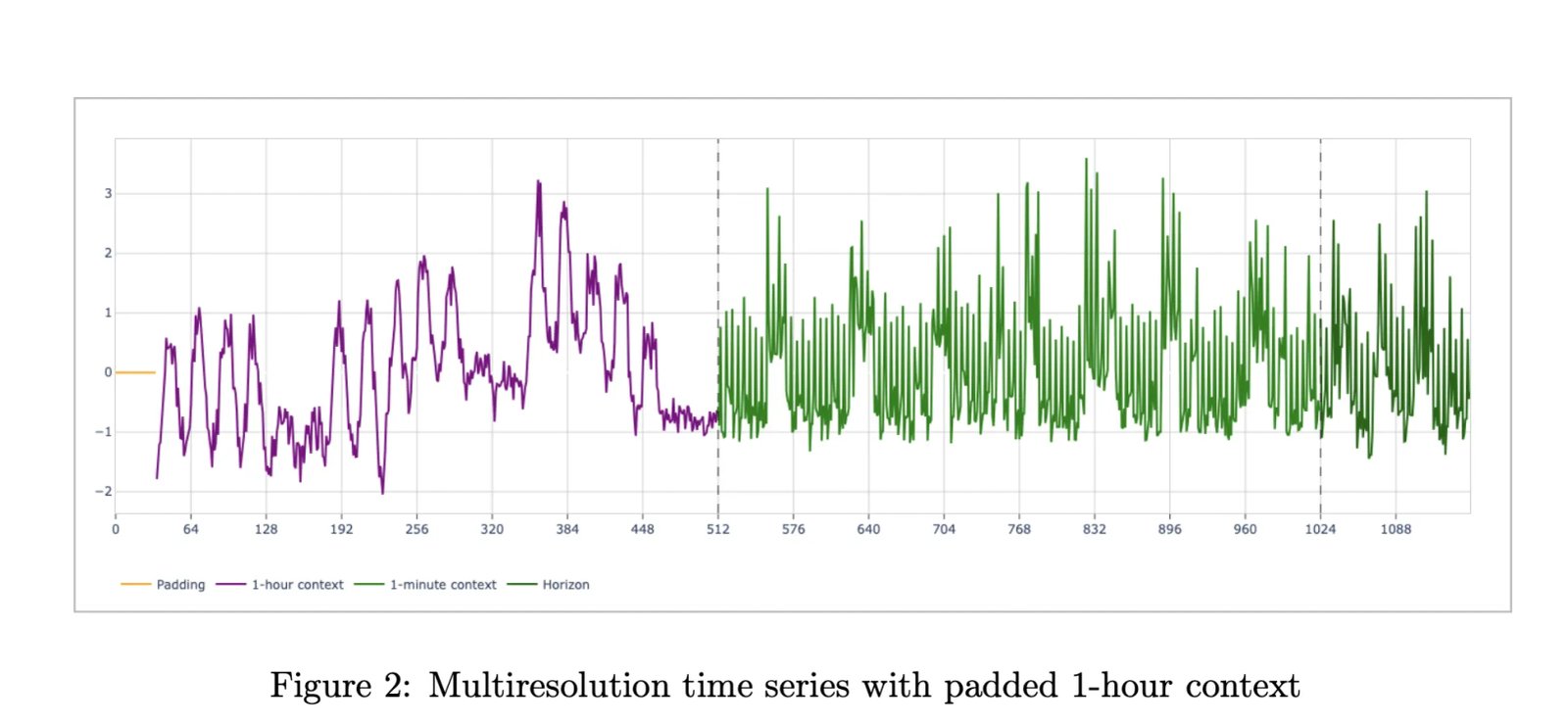

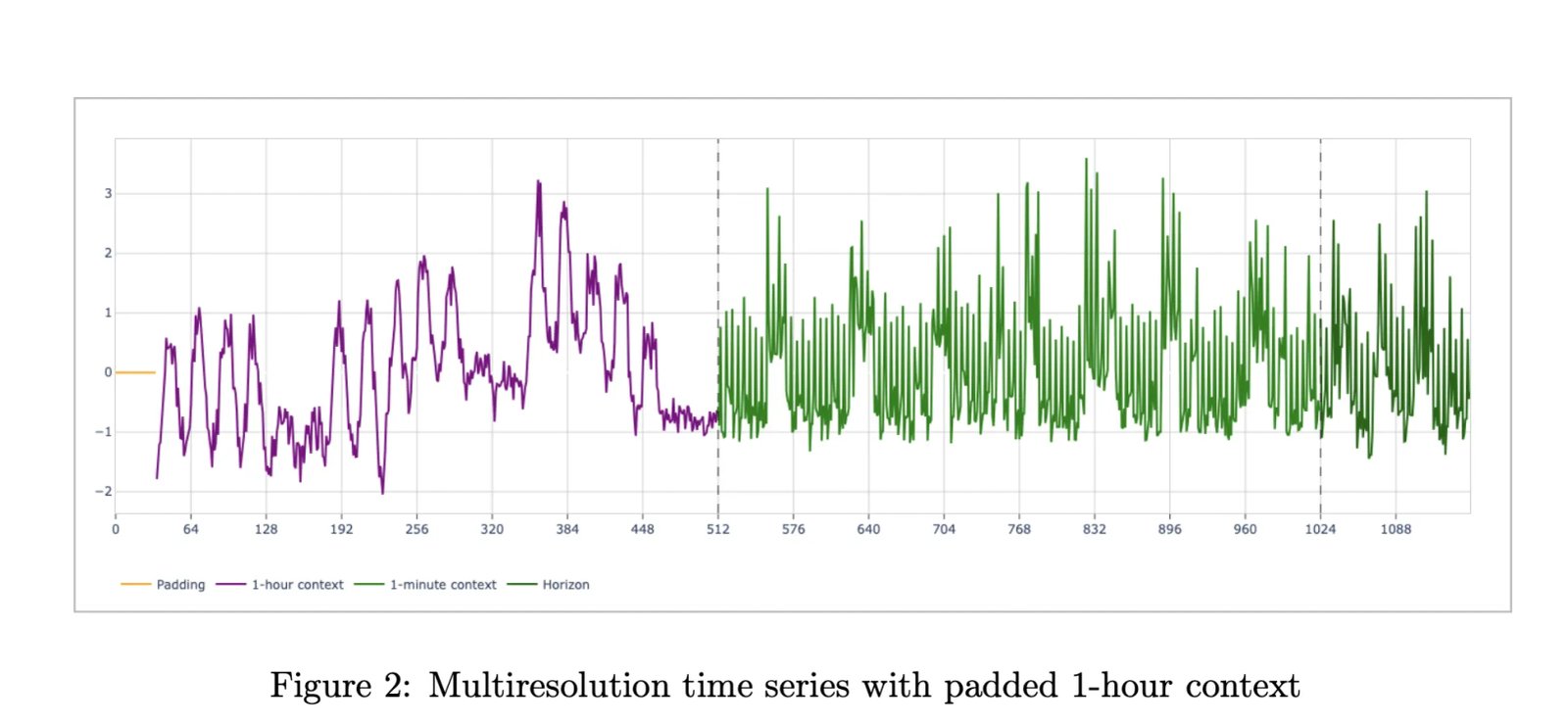

Multi-solution input and prediction target

Formally, the model consumes a pair of contexts (xCSand). The length of the coarse context (x_c) and the exact context (x_f) is 512. The spacing between (x)C) is fixed at a distance of 60 times (xand). A typical observability setting uses 512 hours of one-hour totals and 512 minutes of one-minute values. Both series end at the same expected cutoff point. The model predicts a horizon of 128 points at fine resolution, with a mean and a range of quantiles from 0.1 to 0.9.

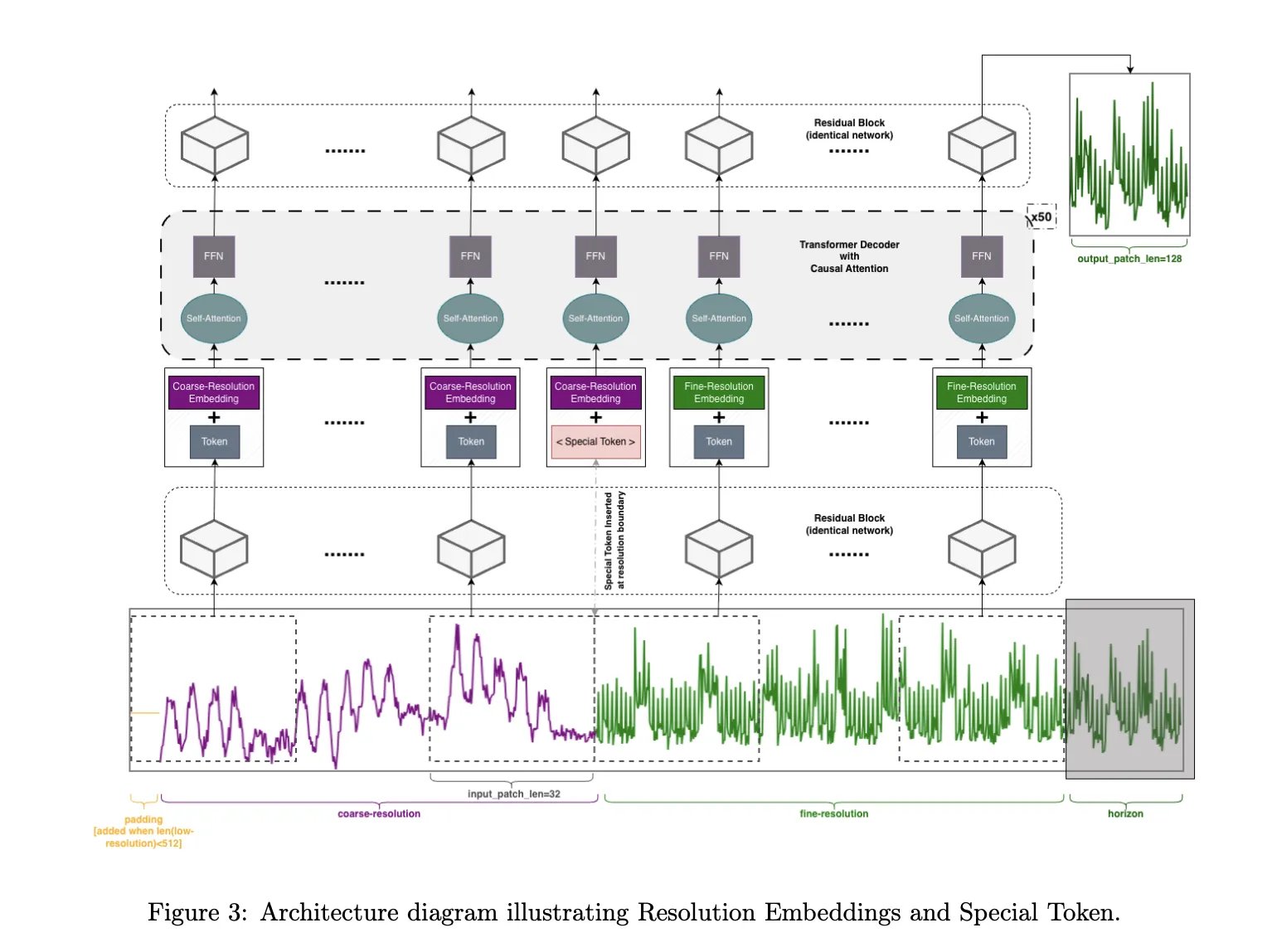

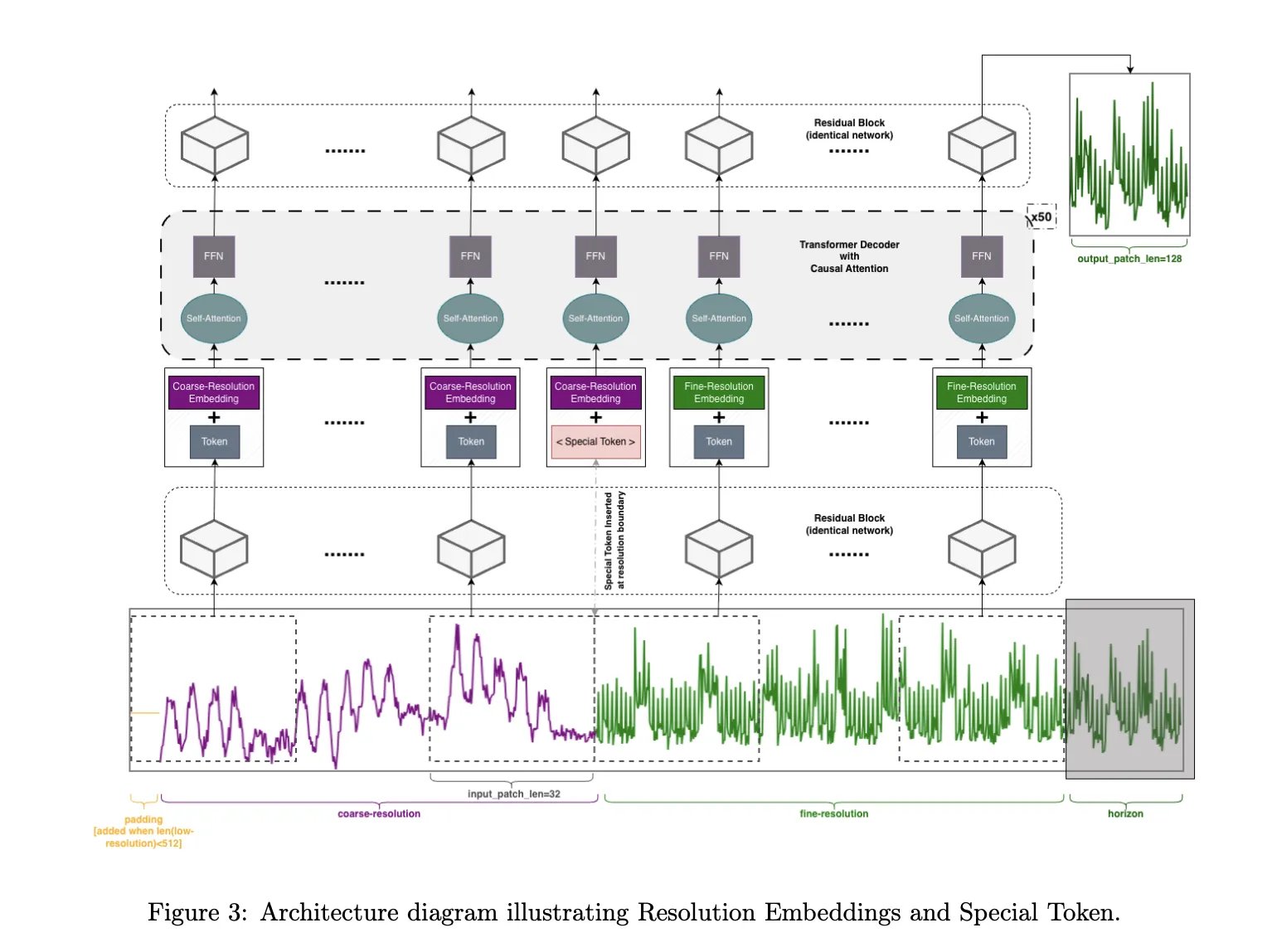

Architecture,Core TimesFM with precision embeddings

Internally, the Cisco Time Series model reuses the TimesFM patch-based decoder stack. The input is normalized, rectified into non-overlapping parts, and passed through the remaining embedding block. The core of the converter consists of only 50 decoding layers. The final remaining block assigns tokens to the horizon. The research team removes positional embeddings and instead relies on patch order, multi-precision structure, and new-resolution embedding to encode the structure.

Two additions make the multi-precision structure aware. A special token, often called ST in the report, is inserted between the coarse and fine token flows. It lives in the space of sequences and represents the boundaries between decisions. Resolution embeddings, often called RE, are added into the model space. An embedding vector is used for all coarse symbols and another for all coarse symbols. The ablation studies reported in the paper show that both components improve quality, especially in long context scenarios.

The decoding procedure is also multi-precision. The model outputs average and quantitative forecasts for the fine-resolution horizon. During long horizon decoding, the newly predicted fine points are appended to the fine context. The sums of these predictions update the approximate context. This creates an autoregressive loop in which both decisions evolve together during forecasting.

Recipe training data

The Cisco Time Series model is trained by continuous pre-training on TimesFM weights. The final model contains 500 million parameters. Training uses AdamW for biases, norms and embeddings, and Muon for hidden layers, with cosine learning rate tables. The loss combines the mean square error of the average forecast with the quantile loss on quantiles from 0.1 to 0.9. The team trains for 20 epochs and chooses the best checkpoint via validation loss.

The data set is large and skewed toward observability. The Splunk team reported about 400 million metric time series from their Splunk Observability Cloud deployments, collected at 1-minute resolution over 13 months and partially aggregated at 5-minute resolution. The research team states that the final set contains more than 300 billion unique data points, with approximately 35 percent 1-minute observability, 16.5 percent 5-minute observability, 29.5 percent GIFT Eval pre-training data, 4.5 percent Chronos datasets and 14.5 percent KernelSynth synthetic string.

Normative results on observability and gift evaluation

The research team evaluates the model on two main criteria. The first is an observability dataset derived from Splunk metrics at 1-minute and 5-minute resolution. The second is a filtered version of GIFT Eval, where datasets leaking TimesFM 2.0 training data are removed.

For 1-minute resolution observability data with 512 microsteps, the Cisco Time Series model using multi-resolution context 512 reduces the mean absolute error from 0.6265 for TimesFM 2.5 and 0.6315 for TimesFM 2.0 to 0.4788, with similar improvements in the mean absolute scaled error and ordered continuous probability score. Similar gains are shown at 5-minute resolution. Across both resolutions, the model outperforms the Chronos 2, Chronos Bolt, Toto, and AutoARIMA baselines under the benchmarks used in the paper.

In the filtered GIFT Eval benchmark, the Cisco Time Series model matches the base TimesFM 2.0 model and performs competitively with TimesFM-2.5, Chronos-2, and Toto. The main claim is not global dominance but maintaining overall prediction quality while adding a powerful advantage over long context windows and observability workloads.

Key takeaways

- The Cisco Time Series model is a basic univariate time series model that extends the backbone of the TimesFM 2.0 decoder only with a multi-solution architecture for observability and security metrics.

- The model consumes a multi-resolution context, with a coarse series and a fine series, each up to 512 steps long, where the coarse resolution is 60 times the fine resolution, and predicts 128 fine resolution steps with average and quantitative outputs.

- The Cisco Time Series Model was trained on over 300B data points, more than half of which are from observability, Splunk device data shuffling, GIFT Eval, Chronos datasets, and KernelSynth synthetic series, and contains about 0.5B of parameters.

- On observability benchmarks at 1-minute and 5-minute resolutions, the model achieves a lower error than TimesFM 2.0, Chronos, and other baselines, while maintaining competitive performance on the general-purpose GIFT Eval benchmark.

verify Paper, blog and Model card on high frequency. Feel free to check out our website GitHub page for tutorials, codes, and notebooks. Also, feel free to follow us on twitter Don’t forget to join us 100k+ mil SubReddit And subscribe to Our newsletter. I am waiting! Are you on telegram? Now you can join us on Telegram too.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of AI for social good. His most recent endeavor is the launch of the AI media platform, Marktechpost, which features in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand by a broad audience. The platform has more than 2 million views per month, which shows its popularity among the masses.

🙌 FOLLOW MARKTECHPOST: Add us as a favorite source on Google.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-12-07 20:39:00