Cohere Released Command A: A 111B Parameter AI Model with 256K Context Length, 23-Language Support, and 50% Cost Reduction for Enterprises

LLMS is widely used in AI conversation, content generation and institutional automation. However, the performance balance with mathematical efficiency is a major challenge in this field. Many modern models require wide devices resources, making them inappropriate for smaller institutions. The demand for active intelligence solutions in terms of costs pushing researchers to develop high performance models with lower mathematical requirements.

Provides artificial intelligence models and publishing obstacles to researchers and companies. Wide -range models require a large math strength, making them expensive to maintain them. Also, artificial intelligence models must deal with multi -language tasks, ensure the accuracy of the high instructions, and support the institution’s applications such as data analysis, automation and coding. Current market solutions, although they are effective, often demanding an infrastructure outside the scope of many institutions. The challenge lies in improving artificial intelligence models to address efficiency without compromising accuracy or functions.

Many artificial intelligence models are currently dominating the market, including GPT-4O and Deepseek-V3. These models excel in the treatment and generation of natural language, but they require advanced devices, and sometimes you need 32 graphics processing units to work effectively. While it provides advanced capabilities in the generation of text, multi -language support, and coding, the dependency of their devices limits access. Some models also struggle with the accuracy of tracking instructions at the level of the institution and the integration of tools. Companies need Amnesty International solutions that maintain competitive performance while reducing infrastructure and publishing costs. This request has paid efforts to improve language models to work with the minimum devices requirements.

The researchers presented from Cohere The matter aAI’s high -performance model, specially designed for institutions that require maximum efficiency. Unlike traditional models that require large calculation resources, driving A is on graphics processing units only while maintaining competitive performance. The model includes 111 billion teachers and supports a context of 256 thousand, which makes it suitable for institutions applications that include processing long -form documents. Its ability to deal efficiently in the tasks of commercial tasks and multi -language tasks distinguish her from her ancestors. This model is improved to generate high -quality text while reducing operational costs, making it an effective cost -cost alternative to companies that aim to take advantage of the artificial intelligence of various applications.

The basic technology of ComMand A is organized around an improved transformer structure, which includes three layers of the sliding window attention, each with a window size of 4096 symbols. This mechanism promotes local context modeling, allowing the model to maintain important details through expanded text inputs. The fourth layer includes global attention without localizations, which provides symbolic reactions that are not restricted through the entire sequence. Training to supervise the model and training preference increases the refinement of its ability to align responsibilities with human expectations in accordance with accuracy, safety and assistance. Also, A 23 language supports it, which makes it one of the most diverse artificial intelligence models for companies with global processes. Its chat capabilities have been pre -created for interactive behavior, allowing AI applications unlikely.

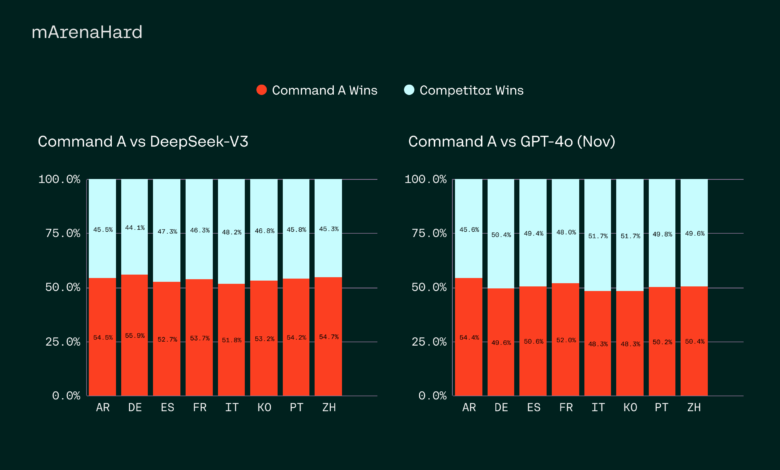

Performance assessments indicate that A matter is competing positively with Amnesty International models such as GPT-4O and Deepseek-V3 through various standards that focus on institutions. The model achieves a symbolic generation rate of 156 icons per second, the highest 1.75 times of GPT-4O and 2.4 times from Deepseek-V3, making it one of the most efficient models available. With regard to cost efficiency, special publishing operations of the matter are 50 % cheaper than the API alternatives, which greatly reduces the financial burden on companies. The matter also exceeds the tasks of tracking instructions, SQL -based information, and the RAG applications (RAG) of recovery. High accuracy has shown in institutional data assessments in the real world, and outperformed its competitors in cases of multi -language business.

In the direct comparison to perform the institution’s mission, the results of the human evaluation show that the matter is constantly outperforming its competitors in the benefit of fluency, sincerity and the benefit of response. The ready -made capabilities of the typical institutions include a strong generation for retrieval with verification and use of advanced tools for the agent, and high -level safety measures to protect sensitive business data. Its multi -language capabilities exceed simple translation, which indicates superior efficiency in accurately responding to the dialects of the private region. For example, the Arab dialects assessments, including the Egyptian, Saudi, Syrian and Moroccan Arabic language, revealed that the matter had given more accurate and appropriate responses to the leadership of artificial intelligence models. These results emphasize its strong application in the environments of international institutions, where the diversity of language is very important.

Many main meals include:

- The order A does on graphics processing units only, which greatly reduces mathematical costs while maintaining high performance.

- With 111 billion teachers, the model for applications is improved by the institution that requires wide -ranging text processing.

- The model supports the length of the 256K context, allowing it to process the taller institution documents more effectively than the competing models.

- Command A is trained in 23 languages, ensuring high accuracy and importance of context for international companies.

- It achieves 156 icons per second, 1.75X higher than GPT-4O and 2.4X higher than Deepseek-V3.

- The model is constantly outperforming competitors in the assessments of institutions in the real world, excellence in SQL, Agentic, and tool -based tasks.

- Advanced rag capabilities with verified categories make it very suitable for institutional information retrieval applications.

- Special publishing operations of order A can be 50 % cheaper than API models.

- The model includes safety features at the level of the institution, ensuring the safe dealing of sensitive business data.

- It shows high efficiency in regional dialects, making it ideal for companies operating in various linguistic areas.

Payment The model is on the face embrace. All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 80k+ ml subreddit.

Asif Razzaq is the CEO of Marktechpost Media Inc .. As a pioneer and vision engineer, ASIF is committed to harnessing the potential of artificial intelligence for social goodness. His last endeavor is to launch the artificial intelligence platform, Marktechpost, which highlights its in -depth coverage of machine learning and deep learning news, which is technically sound and can be easily understood by a wide audience. The platform is proud of more than 2 million monthly views, which shows its popularity among the masses.

2025-03-16 18:05:00