Discovering novel algorithms with AlphaTensor

research

The first extension of the Elazero Mathematics opens new possibilities for research

The algorithms have helped mathematics to perform basic operations for thousands of years. The ancient Egyptians invented an algorithm to strike two numbers without the need for the multiplication table, and described the Greek mathematics scientist as an algorithm for the largest joint division, which is still being used today.

During the Islamic Golden Age, the Persian mathematician Muhammad bin Musa Khuraizmi designed new algorithms to solve linear and spring equations. In fact, the name of Al -Khwarizmi, translated into Latin AlgorithmIt led to the term algorithm. However, despite the familiarity of the algorithms today – used throughout society from algebra in separation to scientific research – the process of discovering the new algorithms is very difficult, and an example of the amazing thinking capabilities of the human mind.

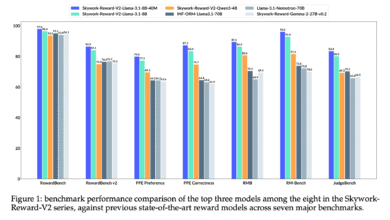

In our paper, it was published today in natureand We offer AlphatensorThe first artificial intelligence system (AI) to discover new, effective, and clear algorithms for basic tasks such as doubling the matrix. This sheds light on a 50 -year -old open question in mathematics about finding the fastest way to strike two matrices.

This paper is a starting point in the DeepMind mission of enhancing science and locking the most basic problems using artificial intelligence. Our system, alphatensor, depends on Alphazero, an agent who has shown extraordinary performance games, such as chess, Go and Shogi, and this work shows Alphazero’s journey from playing games to tackling sports problems that have not been solved for the first time.

Double the matrix

The reproduction of the matrix is one of the simplest operations in algebra, which is usually studied in mathematics classes in secondary schools. But outside the semester, this modest sporting process has a tremendous impact in the contemporary digital world and is everywhere in modern computing.

An example of the process of hitting two matrices 3×3.

This process is used to process images on smartphones, identify speech orders, create computer games, run simulations to predict weather, data compress and videos to share online, and much more. Companies all over the world spend large sums of time and money in developing computing devices to efficiently strike matrix. Therefore, even slight improvements on the efficiency of the reproduction of the matrix can have a widespread effect.

For several centuries, mathematicians believed that the Standard Multiple Mutriph algorithm was the best that could be achieved in terms of efficiency. But in 1969, German mathematician Volcker Strenjin was shocked by the sports community by showing better algorithms.

Standard algorithm compared to the Strasen algorithm, which is used to double the lowest number (7 instead of 8) to double the matrices 2×2. The strikes are much more important than the additions to the total efficiency.

By studying very small matrices (size 2 x 2), discover a brilliant way to combine matrix entries to give faster algorithm. Despite contracts of research after the penetration of Strasen, versions of this problem remained without a solution – to the extent that the efficiency of double two small matrices of up to 3 x 3.

In our paper, we discovered how modern artificial intelligence techniques can provide automatic discovery of the new matrix algorithms. Based on the progress of human intuition, Alphatensor discovered more efficient algorithms than the latest matrix sizes. Our algorithms, designed, outperforming artificial intelligence, are a big step forward in the field of algorithm.

Operation and providing automation to discover the algorithm

First, we converted the problem of finding effective algorithms to strike the matrix into one player’s game. In this game, the plate is a three -dimensional tensioner (a set of numbers), and picks up the extent of the current algorithm. Through a set of permissible moves, the corresponding to the Algorian instructions, the player tries to modify the tensioner and export his entries. When the player can do this, this results in a double -standing algorithm clearly for any pair of matrices, and its efficiency is captured by the number of steps taken to zero outside the tensioner.

This game is incredibly difficult – the number of possible algorithms that must be taken into account is much larger than the number of atoms in the universe, even for small cases of doubling the matrix. Compared to the Go game, which has been a challenge for Amnesty International for decades, the number of possible movements in each of our game is 30 requests greater than the size (above 1033 for one of the settings we take).

Basically, to play this game well, one needs to determine the smallest needles in a huge straw pile of possibilities. To meet the challenges of this field, which heavily leaves from traditional games, we have developed multiple decisive ingredients, including the new neurological network structure that includes inductive biases for the problem, and a procedure to generate useful artificial data, and a recipe to take advantage of the problem consensus.

Next, we trained Alphatensor agent using the reinforcement learning to play the game, starting with any knowledge about the current matrix algorithms. Through learning, Alphatensor gradually improves over time, as it was discovered by the multiplier historical matrix algorithms such as Strasen’s, ultimately bypassing the world of human intuition and discovering faster algorithms than previously known.

A single player game played by Alphatensor, where the goal is to find a correct matrix algorithm. The condition of the game is a cube set of numbers (shown gray for 0, blue for 1, and green for -1), and represents the remaining work to be done.

For example, if the traditional algorithm that was taught in the school doubles the 4×5 matrix by 5 x 5 using 100 doubles, and this number was reduced to 80 with human ingenuity, alphatensor found algorithms that do the same process using only 76 doubles.

The algorithm discovered by alphatensor using 76 doubles, which is an improvement in modern algorithms.

In addition to this example, Alphatensor’s algorithm in the Strasen algorithm consisting of two levels in a limited field for the first time since its discovery 50 years ago. These algorithms can be used to multiply small matrices as beginnings to reproduce a much larger prescription than the arbitrary size.

Moreover, Alphatensor also discovers a variety of modern complexity algorithms-up to thousands of strained algorithms for each size, which indicates that the area of double-matched algorithms is richer than before.

The algorithms in this rich area have different mathematical and practical properties. Take advantage of this diversity, we adapted Alphatensor to find fast algorithms specifically on certain devices, such as NVIDIA V100 GPU and Google Tpu V2. These large-scalep algorithms multiply 10-20 % of commonly used algorithms on the same devices, which showcase alphatensor in improving arbitrary goals.

Alphatensor with a goal that corresponds to the time for operation of the algorithm. When the correct matrix is discovered, it is determined on the targeted devices, which are fed up to alphatensor, in order to know more efficient algorithms on the targeted devices.

Explore the influence on research and future applications

Sports, our results can direct more research in the theory of complexity, which aims to determine the fastest algorithms to solve mathematical problems. By exploring the space of possible algorithms in a more effective way than previous methods, Alphatensor helps to enhance our understanding of the richness of the reproduction of matrix. Understanding this space may understand new results to help identify the uninterrupted complexity of the matrix, which is one of the most important open problems in computer science.

Since the reproduction of the matrix is an essential component of many mathematical tasks, computer drawings, digital communications, nervous network and scientific computing can make algorithms discovered by Alphatensor accounts in these areas more efficient. Alphatensor’s elasticity can also consider any type of target new applications to design algorithms that improve measures such as energy use and numerical stability, which helps prevent small approximation errors from snowball with the algorithm.

While we focused here on the problem of hitting the private matrix, we hope to inspire our other paper to use artificial intelligence to direct the discovery of algorithms for other basic mathematical tasks. Our research also shows that Alphazero is a powerful algorithm that can extend beyond the traditional games field to help solve open mathematics problems. Based on our research, we hope to stimulate a larger set of work – the application of artificial intelligence to help society solve some of the most important challenges in mathematics and through science.

You can find more information in the GitHub alphatensor warehouse.

Thanks and appreciation

Francisco R. Ruiz, Thomas Hubert, Alexander Novakov, Alex Junte for reactions to the blog post. Sean Carlson, Ariel Bear, Gabriella Pearl, Katy Maknakni, Max Barnett to help them in the text and numbers. This work has been completed by a team with contributions from Alhussein Fawzi, Matej Balog, Aja Huang, Thomas Hubert, Bernardino Romera-Parker, Mohammadamin Barekatain, Francisco Ruiz, Alexander Novikov and Julyan Schrittwieser, Grzegor Swirszcz and David KoH.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2022-10-05 00:00:00