Edge AI: Navigating Hardware Constraints

While preparing for a evening of relaxation at home, you may ask your smartphone to run your favorite song or tell your home assistant to reduce the lights. These tasks feel simple because they are supported by artificial intelligence (AI) that is now combined into our daily procedures. At the heart of these smooth reactions, the AI -AII edge that works directly on devices such as smartphones, wearable devices and IOT tools, provides immediate and intuitive responses.

Edge AI refers to the publication of AI’s algorithms directly on the devices on the “edge” of the network, rather than relying on the central cloud data centers. This approach benefits from the processing possibilities of edge devices – such as laptops, smartphones, smart watches and home appliances – to make decisions locally.

Edge AI provides critical advantages of privacy and safety: by reducing the need to transfer sensitive data online, Edge AI reduces the risk of data violation. It also enhances data processing and decision -making, which is crucial for actual time applications such as healthcare devices, industrial automation, augmented reality and games. The edge AI can work in intermittent contact environments, which supports independence with limited maintenance and reduce data transfer costs.

While artificial intelligence is now combined in many devices, the enabled the powerful potential of artificial intelligence in daily devices is a technical challenge. Edge devices within strict restrictions work on the power of processing, memory and battery life, and carry out complex tasks within the specifications of modest devices.

For example, for smartphones to perform an advanced face recognition, they should use advanced improvement algorithms to analyze images and the features of matching the millimeters again. Real time translation on earphones requires maintaining low energy to ensure the battery life for a long time. While artificial intelligence models based on a group of crescents can rely on external servers with wide arithmetic energy, edge devices should make what is within reach. This shift to the edge processing mainly changes how to develop, improve and spread artificial intelligence models.

Behind the scenes: Improving the artificial intelligence of the edge

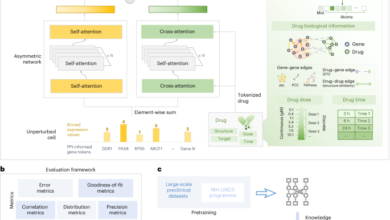

Artificial intelligence models that are able to operate efficiently on edge devices and their account should be significantly reduced, while maintaining similar reliable results. This process, which is often referred to as the compression of the model, includes advanced algorithms such as the search for nervous architecture (NAS), transportation, trim, and quantities.

Improving the model should start by choosing or design a model brown in particular for the capabilities of the device’s devices, then improving it to operate it efficiently on specific edge devices. NAS technologies use search algorithms to explore many possible artificial intelligence models and find the most appropriate for a specific task on the edge device. Transferring learning techniques training a much smaller model (student) using a larger model (teacher) has already been trained. Pruning includes eliminating excess parameters that do not significantly affect accuracy, and convert the quantities of models to use less accurate account to provide at the expense and use of memory.

When bringing the latest artificial intelligence models to devices, it is only tempting to focus on the efficiency of basic-special calculations, specifically, “double” operations, or MACS. In simple phrases, Mac efficiency measures the speed that the chip can make mathematics in the heart of artificial intelligence: hitting and adding numbers. Models developers can get a “Mac Tunnel Vision”, focusing on this scale and ignoring other important factors.

Some of the most popular artificial intelligence models are designed – such as Mobilenet, CafFicnet and Transformers for vision applications – to be very effective in these accounts. But in practice, these models do not always work well on artificial intelligence chips inside our phones or smart watches. This is because performance in the real world depends on more than just math speed-also depends on the speed that the data moves within the device. If the model constantly needs to bring data from memory, it can slow everything, regardless of the extent of the speed of the calculations.

Surprisingly, the older old models such as Resnet sometimes work better on today’s devices. It may not be the latest or more simplified, but the background between memory and processing is much more suitable for the specifications of artificial intelligence processors. In real tests, these classic models have provided better speed and accuracy on edge devices, even after trim to fit.

Lesson? The “best” artificial intelligence model is not always the new most clear or higher theoretical design. For EDGE devices, what matters most is the suitability of the model with the devices that are actually turned on.

This device is developing quickly. To keep pace with the demands of modern artificial intelligence, devices makers including special custom chips called AI Accelerators began in smartphones, smart watches, wearable devices, and more. These accelerators are specially designed to deal with the types of accounts and the movement of data required by artificial intelligence models. Every year it brings developments in architecture, manufacturing and integration, ensuring that devices are accompanying with artificial intelligence trends.

The road forward to Edge AI

The spread of artificial intelligence models on edge devices is complicated by the fragmented nature of the ecological system. Since many applications require custom models and specific devices, there is a lack of monotheism. Required is effective development tools to simplify the life -learning life cycle of edge applications. These tools should make it easy for developers to improve the real world performance and energy consumption and cumin.

Cooperation between devices manufacturers and artificial intelligence developers narrows the gap between engineering and user interaction. Emerging trends focus on awareness of context and adaptive learning, allowing devices to expect the user’s needs and respond naturally to them. By taking advantage of environmental signals and monitoring user habits, EDGE AI can provide an intuitive and personal feelings. Local and dedicated intelligence has been set to convert our experience in technology and the world.

From your site articles

Related articles about the web

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-07-20 13:00:00