Efficient Long Sequence Decoding on Dataflow Accelerators

View PDF of the article SnapStream: Efficient Long Sequence Decoding in streaming Accelerators, by Jonathan Lee and 20 other authors

View PDF HTML (beta)

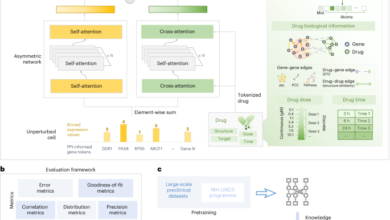

a summary:The proliferation of 100B+ parameter large language models (LLMs) with context length support up to 100k+ has increased demand on on-chip memory to support the large KV cache. Technologies such as StreamingLLM and SnapKV demonstrate how to control the size of the KV cache while maintaining model accuracy. However, these techniques are not commonly used in industrial deployments using frameworks such as vLLM or SGLang. The reason is twofold: on the one hand, the static graphs and continuous clustering methodology used by these frameworks make it difficult to accept modifications to the standard multi-headed attention algorithm, while on the other hand, the implications of the accuracy of these techniques on modern instruction-following and reasoning models are not well understood, obscuring the need to implement these techniques. In this paper, we explore these accuracy implications on Llama-3.1-8B-Instruct and DeepSeek-R1, and develop SnapStream, a KV cache compression method that can be widely deployed. We demonstrate the effectiveness of SnapStream in deploying a 16-way parallel tensor for DeepSeek-671B on SambaNova SN40L accelerators running at a context length of 128K and up to 1832 tokens per second in a real production environment. SnapStream enables improved on-chip memory usage of $4\times and offers minimal accuracy degradation in LongBench-v2, AIME24, and LiveCodeBench. To our knowledge, this is the first application of sparse KV attention techniques deployed in a production inference system using static graphs and continuous batches.

Submission date

From: Jonathan Lee [view email]

[v1]

Wednesday, 5 November 2025, 00:38:31 UTC (338 KB)

[v2]

Thursday, 6 November 2025, 18:27:11 UTC (339 KB)

[v3]

Friday, 7 November 2025, 19:27:58 UTC (339 KB)

[v4]

Friday, 14 November 2025, 19:14:59 UTC (339 KB)

[v5]

Wednesday, 10 December 2025, 00:29:21 UTC (339 KB)

Don’t miss more hot News like this! AI/" target="_blank" rel="noopener">Click here to discover the latest in AI news!

2025-12-11 05:00:00