EmbodiedGen: A Scalable 3D World Generator for Realistic Embodied AI Simulations

The challenge of limiting 3D environments in the embodied artificial intelligence

The creation of three -dimensional and accurate environments is necessary for training and assessment of the embodiment of artificial intelligence. However, the current methods still depend on the handmade 3D graphics, which are expensive and lack realism, thus reducing the ability to expand and generalize. Unlike data on the Internet scale used in models such as GPT and Clip, artificial intelligence data is expensive, specific for context, and is difficult to reuse. Access to intelligence for general purposes in physical environments requires realistic simulations, learning to reinforce and various 3D assets. While modern proliferation models and 3D generation techniques show the promise, many of them still lack the main features such as physical accuracy, water -based and correct size, making them insufficient for automated training environments.

Restrictions of current 3D generation techniques

The generation of 3D objects usually follows three main methods: Feedforward generation for quick results, high -quality improvement methods, and display of reconstruction from multiple images. Although modern technologies have improved realism through the separation of engineering and the creation of texture, many models still give priority to the visual appearance on realistic physics. This makes it less suitable for simulation that requires accurate engineering for expansion and complexity. For 3D scenes, panoramic technologies allowed a full show, but still lacking interaction. Although some tools are trying to enhance simulation environments with the created assets, quality and diversity are still limited, as they reach the needs of complex embodied intelligence research.

Submit an embodiment: open source, units, and ready for simulation

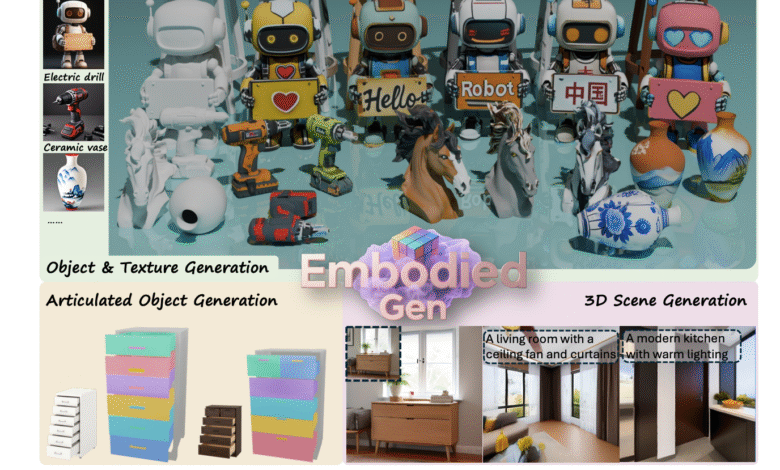

EmbodiedGen is an open source framework that has been cooperated by Horizon Robotics, Hong Kong Chinese University, Shanghai Qi Che, and Tsinghwa University. It is designed to generate realistic and developed three -dimensional origins designed for the tasks of embodied artificial intelligence. The basic system comes out physically physically for 3D objects in URDF format, with the descriptive data for simulation compatibility. It includes six normative ingredients, including an image to 3D, a text to 3D, the generation of planning, and the rearranging of the objects, it allows the creation of a controlled and efficient scene. By bridging the gap between traditional 3D graphics and ready -made assets of robots, it is easy to embody the developmentable and effective development in terms of interactive environments for embodied intelligence research.

Main Features: Multimedia Given Rich Content

EmbodiedGen is a multi -use tool collection designed to create realistic and interactive 3D environments designed for the tasks of embodied AI. It combines multiple generation units: converting images or text into detailed 3D objects, creating articulated elements with animated parts, and generating a variety of consistency to improve visual quality. It also supports the construction of the full scene by arranging these assets in a way that respects the physical characteristics in the real world. The output is directly compatible with simulation platforms, which makes it easier and affordable to build vibrant virtual worlds. This system helps researchers simulate the real world’s scenarios without relying on expensive manual modeling.

The integration of simulation and physical accuracy in the real world

Expodiedgen is a powerful and accessible platform that allows the generation of high -quality 3D assets designed to research the embodied intelligence. It features many major units that allow users to create assets of images or text, create articulated and reserved objects, and build realistic scenes. These assets are a disturbing, realistic, and physically tight, which makes them ideal for training and evaluation based on robots. The platform supports integration with common simulation environments, including Openai gym, Mujoco, ISAAC LAB and SAPIEN, allowing researchers to efficiently simulate tasks such as mobility, manipulating things, and avoid obstacles at a low cost.

Robosplatter: 3DGs Simulation 3DGs Show

The prominent feature is Robosplatter, which brings 3DGs (3DGs) in physical simulation. Unlike traditional graphics pipelines, Robosplatter enhances visual sincerity while reducing the general calculation expenditures. Through units such as the generation of texture and real conversion, users can edit the appearance of 3D assets or re -create scenes in the real world with high realism. In general, Gosiedgen’s embodiment of the creation of three -dimensional interactive worlds, bridging the gap between robots in the real world and digital simulation. It is openly available as an easy -to -use domain group to support broader adoption and constant innovation in embodied artificial intelligence research.

Why this research is important?

This research deals with the essential bottleneck in the embodied artificial intelligence: the absence of 3D environments that can be developed and realistic and compatible with physics for training and evaluation. While data on the Internet has led to progress in vision and language models, the embodiment of intelligence requires the prefabricated assets of the accurate scope, engineering and interaction-often the processes of traditional 3D primary pipelines. The embodiment of this gap is filled by providing an open source unit platform capable of producing high -quality 3D objects and scenes that are consistently compatible with the main robot simulation devices. Its ability to transform the text and images into 3D environments is physically reasonable to make it a founding tool for progress in artificial intelligence research, digital twins and real learning.

verify Paper and project page All the credit for this research goes to researchers in this project. Also, do not hesitate to follow us twitter And do not forget to join 100K+ ML Subreddit And subscribe to Our newsletter.

Free registration: Minicon AI 2025 (August 2, 2025) infrastructure, 2025) [Speakers: Jessica Liu, VP Product Management @ Cerebras, Andreas Schick, Director AI @ US FDA, Volkmar Uhlig, VP AI Infrastructure @ IBM, Daniele Stroppa, WW Sr. Partner Solutions Architect @ Amazon, Aditya Gautam, Machine Learning Lead @ Meta, Sercan Arik, Research Manager @ Google Cloud AI, Valentina Pedoia, Senior Director AI/ML @ the Altos Labs, Sandeep Kaipu, Software Engineering Manager @ Broadcom ]

SANA Hassan, consultant coach at Marktechpost and a double -class student in Iit Madras, is excited to apply technology and AI to face challenges in the real world. With great interest in solving practical problems, it brings a new perspective to the intersection of artificial intelligence and real life solutions.

Don’t miss more hot News like this! Click here to discover the latest in AI news!

2025-06-22 20:18:00